Table of Contents

- 1. Introduction

- 2. Additional resources

- 3. Features

- 4. Sampling

- 5. Instrumentation

- 6. Span lifecycle

- 7. Naming spans

- 8. Managing spans with annotations

- 9. Customizations

- 10. Sending spans to Zipkin

- 11. Span Data as Messages

- 12. Metrics

- 13. Integrations

- 14. Running examples

Spring Cloud Sleuth implements a distributed tracing solution for Spring Cloud.

Spring Cloud Sleuth borrows Dapper’s terminology.

Span: The basic unit of work. For example, sending an RPC is a new span, as is sending a response to an RPC. Span’s are identified by a unique 64-bit ID for the span and another 64-bit ID for the trace the span is a part of. Spans also have other data, such as descriptions, timestamped events, key-value annotations (tags), the ID of the span that caused them, and process ID’s (normally IP address).

Spans are started and stopped, and they keep track of their timing information. Once you create a span, you must stop it at some point in the future.

![[Tip]](images/tip.png) | Tip |

|---|---|

The initial span that starts a trace is called a |

Trace: A set of spans forming a tree-like structure. For example, if you are running a distributed big-data store, a trace might be formed by a put request.

Annotation: is used to record existence of an event in time. Some of the core annotations used to define the start and stop of a request are:

- cs - Client Sent - The client has made a request. This annotation depicts the start of the span.

- sr - Server Received - The server side got the request and will start processing it. If one subtracts the cs timestamp from this timestamp one will receive the network latency.

- ss - Server Sent - Annotated upon completion of request processing (when the response got sent back to the client). If one subtracts the sr timestamp from this timestamp one will receive the time needed by the server side to process the request.

- cr - Client Received - Signifies the end of the span. The client has successfully received the response from the server side. If one subtracts the cs timestamp from this timestamp one will receive the whole time needed by the client to receive the response from the server.

Visualization of what Span and Trace will look in a system together with the Zipkin annotations:

Each color of a note signifies a span (7 spans - from A to G). If you have such information in the note:

Trace Id = X Span Id = D Client Sent

That means that the current span has Trace-Id set to X, Span-Id set to D. It also has emitted Client Sent event.

This is how the visualization of the parent / child relationship of spans would look like:

In the following sections the example from the image above will be taken into consideration.

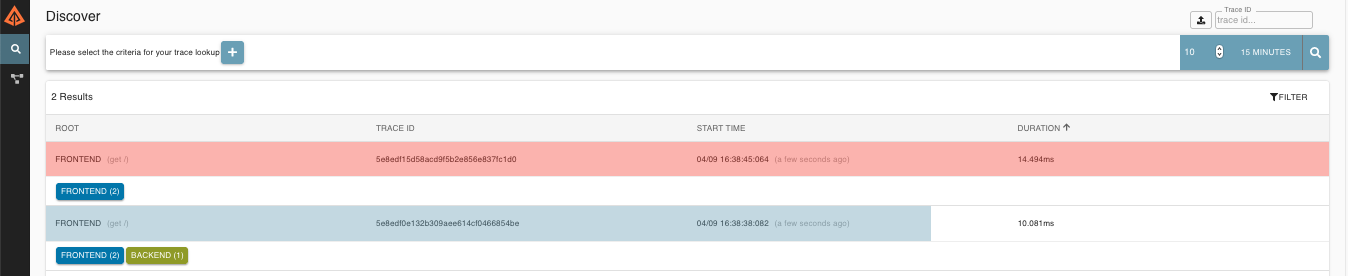

Altogether there are 7 spans . If you go to traces in Zipkin you will see this number in the second trace:

However if you pick a particular trace then you will see 4 spans:

![[Note]](images/note.png) | Note |

|---|---|

When picking a particular trace you will see merged spans. That means that if there were 2 spans sent to Zipkin with Server Received and Server Sent / Client Received and Client Sent annotations then they will presented as a single span. |

Why is there a difference between the 7 and 4 spans in this case?

- 2 spans come from

http:/startspan. It has the Server Received (SR) and Server Sent (SS) annotations. - 2 spans come from the RPC call from

service1toservice2to thehttp:/fooendpoint. It has the Client Sent (CS) and Client Received (CR) annotations onservice1side. It also has Server Received (SR) and Server Sent (SS) annotations on theservice2side. Physically there are 2 spans but they form 1 logical span related to an RPC call. - 2 spans come from the RPC call from

service2toservice3to thehttp:/barendpoint. It has the Client Sent (CS) and Client Received (CR) annotations onservice2side. It also has Server Received (SR) and Server Sent (SS) annotations on theservice3side. Physically there are 2 spans but they form 1 logical span related to an RPC call. - 2 spans come from the RPC call from

service2toservice4to thehttp:/bazendpoint. It has the Client Sent (CS) and Client Received (CR) annotations onservice2side. It also has Server Received (SR) and Server Sent (SS) annotations on theservice4side. Physically there are 2 spans but they form 1 logical span related to an RPC call.

So if we count the physical spans we have 1 from http:/start, 2 from service1 calling service2, 2 form service2

calling service3 and 2 from service2 calling service4. Altogether 7 spans.

Logically we see the information of Total Spans: 4 because we have 1 span related to the incoming request

to service1 and 3 spans related to RPC calls.

Zipkin allows you to visualize errors in your trace. When an exception was thrown and wasn’t caught then we’re setting proper tags on the span which Zipkin can properly colorize. You could see in the list of traces one trace that was in red color. That’s because there was an exception thrown.

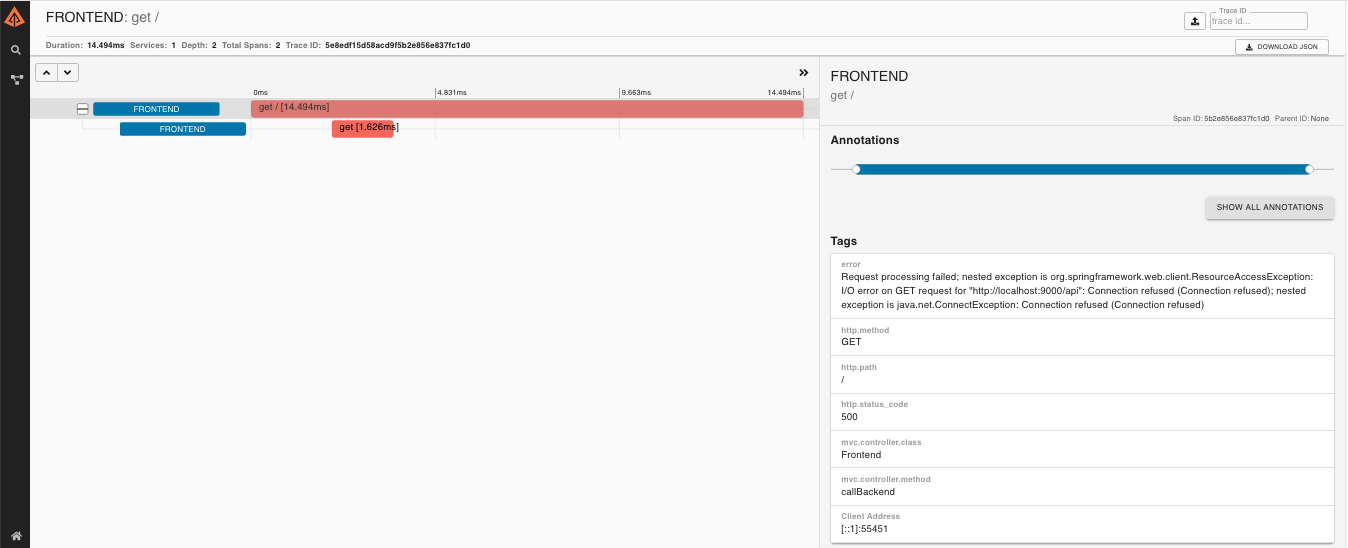

If you click that trace then you’ll see a similar picture

Then if you click on one of the spans you’ll see the following

As you can see you can easily see the reason for an error and the whole stacktrace related to it.

The dependency graph in Zipkin would look like this:

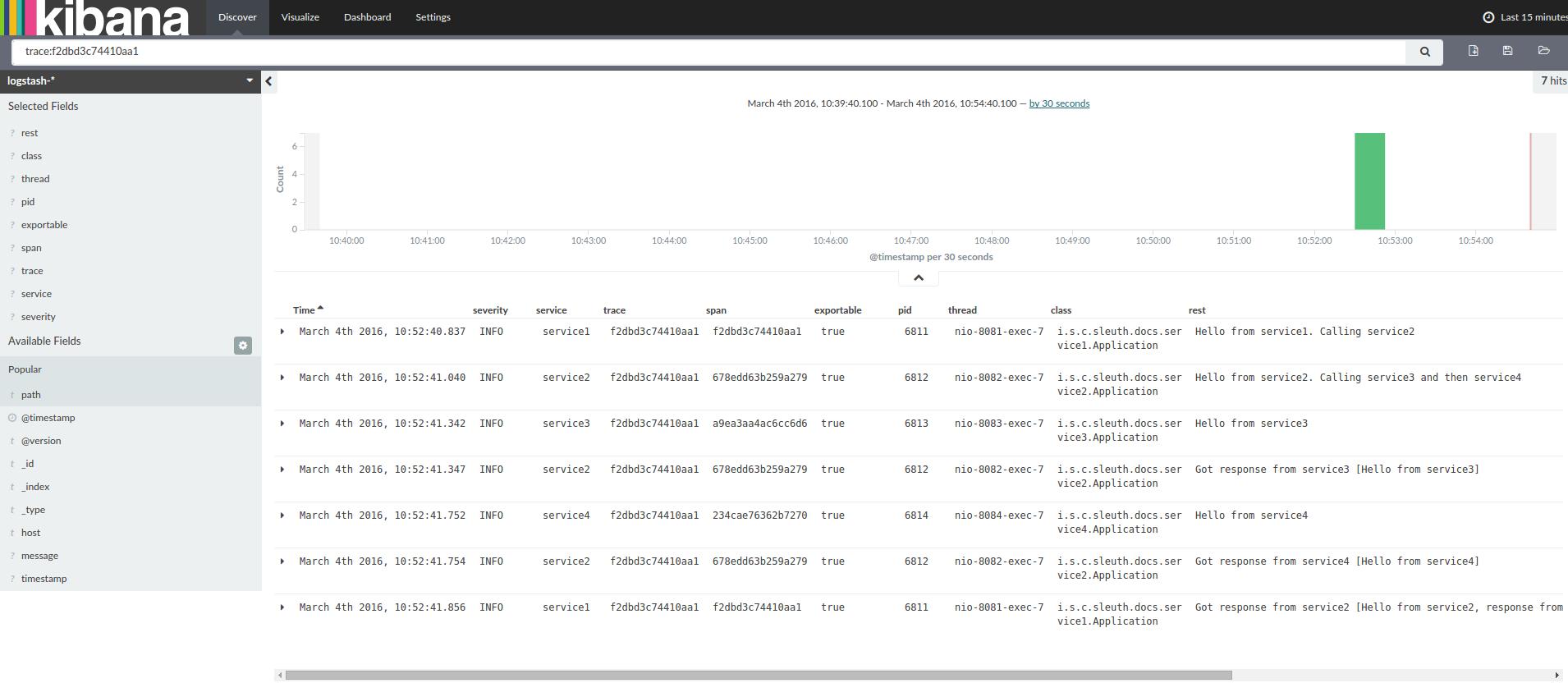

When grepping the logs of those four applications by trace id equal to e.g. 2485ec27856c56f4 one would get the following:

service1.log:2016-02-26 11:15:47.561 INFO [service1,2485ec27856c56f4,2485ec27856c56f4,true] 68058 --- [nio-8081-exec-1] i.s.c.sleuth.docs.service1.Application : Hello from service1. Calling service2 service2.log:2016-02-26 11:15:47.710 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Hello from service2. Calling service3 and then service4 service3.log:2016-02-26 11:15:47.895 INFO [service3,2485ec27856c56f4,1210be13194bfe5,true] 68060 --- [nio-8083-exec-1] i.s.c.sleuth.docs.service3.Application : Hello from service3 service2.log:2016-02-26 11:15:47.924 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Got response from service3 [Hello from service3] service4.log:2016-02-26 11:15:48.134 INFO [service4,2485ec27856c56f4,1b1845262ffba49d,true] 68061 --- [nio-8084-exec-1] i.s.c.sleuth.docs.service4.Application : Hello from service4 service2.log:2016-02-26 11:15:48.156 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Got response from service4 [Hello from service4] service1.log:2016-02-26 11:15:48.182 INFO [service1,2485ec27856c56f4,2485ec27856c56f4,true] 68058 --- [nio-8081-exec-1] i.s.c.sleuth.docs.service1.Application : Got response from service2 [Hello from service2, response from service3 [Hello from service3] and from service4 [Hello from service4]]

If you’re using a log aggregating tool like Kibana, Splunk etc. you can order the events that took place. An example of Kibana would look like this:

If you want to use Logstash here is the Grok pattern for Logstash:

filter {

# pattern matching logback pattern

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp}\s+%{LOGLEVEL:severity}\s+\[%{DATA:service},%{DATA:trace},%{DATA:span},%{DATA:exportable}\]\s+%{DATA:pid}\s+---\s+\[%{DATA:thread}\]\s+%{DATA:class}\s+:\s+%{GREEDYDATA:rest}" }

}

}![[Note]](images/note.png) | Note |

|---|---|

If you want to use Grok together with the logs from Cloud Foundry you have to use this pattern: |

filter {

# pattern matching logback pattern

grok {

match => { "message" => "(?m)OUT\s+%{TIMESTAMP_ISO8601:timestamp}\s+%{LOGLEVEL:severity}\s+\[%{DATA:service},%{DATA:trace},%{DATA:span},%{DATA:exportable}\]\s+%{DATA:pid}\s+---\s+\[%{DATA:thread}\]\s+%{DATA:class}\s+:\s+%{GREEDYDATA:rest}" }

}

}Often you do not want to store your logs in a text file but in a JSON file that Logstash can immediately pick. To do that you have to do the following (for readability

we’re passing the dependencies in the groupId:artifactId:version notation.

Dependencies setup

- Ensure that Logback is on the classpath (

ch.qos.logback:logback-core) - Add Logstash Logback encode - example for version

4.6:net.logstash.logback:logstash-logback-encoder:4.6

Logback setup

Below you can find an example of a Logback configuration (file named logback-spring.xml) that:

- logs information from the application in a JSON format to a

build/${spring.application.name}.jsonfile - has commented out two additional appenders - console and standard log file

- has the same logging pattern as the one presented in the previous section

<?xml version="1.0" encoding="UTF-8"?> <configuration> <include resource="org/springframework/boot/logging/logback/defaults.xml"/> <springProperty scope="context" name="springAppName" source="spring.application.name"/> <!-- Example for logging into the build folder of your project --> <property name="LOG_FILE" value="${BUILD_FOLDER:-build}/${springAppName}"/> <!-- You can override this to have a custom pattern --> <property name="CONSOLE_LOG_PATTERN" value="%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/> <!-- Appender to log to console --> <appender name="console" class="ch.qos.logback.core.ConsoleAppender"> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <!-- Minimum logging level to be presented in the console logs--> <level>DEBUG</level> </filter> <encoder> <pattern>${CONSOLE_LOG_PATTERN}</pattern> <charset>utf8</charset> </encoder> </appender> <!-- Appender to log to file --> <appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender"> <file>${LOG_FILE}</file> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern> <maxHistory>7</maxHistory> </rollingPolicy> <encoder> <pattern>${CONSOLE_LOG_PATTERN}</pattern> <charset>utf8</charset> </encoder> </appender> <!-- Appender to log to file in a JSON format --> <appender name="logstash" class="ch.qos.logback.core.rolling.RollingFileAppender"> <file>${LOG_FILE}.json</file> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>${LOG_FILE}.json.%d{yyyy-MM-dd}.gz</fileNamePattern> <maxHistory>7</maxHistory> </rollingPolicy> <encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder"> <providers> <timestamp> <timeZone>UTC</timeZone> </timestamp> <pattern> <pattern> { "severity": "%level", "service": "${springAppName:-}", "trace": "%X{X-B3-TraceId:-}", "span": "%X{X-B3-SpanId:-}", "parent": "%X{X-B3-ParentSpanId:-}", "exportable": "%X{X-Span-Export:-}", "pid": "${PID:-}", "thread": "%thread", "class": "%logger{40}", "rest": "%message" } </pattern> </pattern> </providers> </encoder> </appender> <root level="INFO"> <appender-ref ref="console"/> <!-- uncomment this to have also JSON logs --> <!--<appender-ref ref="logstash"/>--> <!--<appender-ref ref="flatfile"/>--> </root> </configuration>

![[Note]](images/note.png) | Note |

|---|---|

If you’re using a custom |

The span context is the state that must get propagated to any child Spans across process boundaries. Part of the Span Context is the Baggage. The trace and span IDs are a required part of the span context. Baggage is an optional part.

Baggage is a set of key:value pairs stored in the span context. Baggage travels together with the trace

and is attached to every span. Spring Cloud Sleuth will understand that a header is baggage related if the HTTP

header is prefixed with baggage- and for messaging it starts with baggage_.

![[Important]](images/important.png) | Important |

|---|---|

There’s currently no limitation of the count or size of baggage items. However, keep in mind that too many can decrease system throughput or increase RPC latency. In extreme cases, it could crash the app due to exceeding transport-level message or header capacity. |

Example of setting baggage on a span:

Span initialSpan = this.tracer.createSpan("span"); initialSpan.setBaggageItem("foo", "bar"); initialSpan.setBaggageItem("UPPER_CASE", "someValue");

Baggage travels with the trace (i.e. every child span contains the baggage of its parent). Zipkin has no knowledge of baggage and will not even receive that information.

Tags are attached to a specific span - they are presented for that particular span only. However you can search by tag to find the trace, where there exists a span having the searched tag value.

If you want to be able to lookup a span based on baggage, you should add corresponding entry as a tag in the root span.

@Autowired Tracer tracer; Span span = tracer.getCurrentSpan(); String baggageKey = "key"; String baggageValue = "foo"; span.setBaggageItem(baggageKey, baggageValue); tracer.addTag(baggageKey, baggageValue);

![[Important]](images/important.png) | Important |

|---|---|

To ensure that your application name is properly displayed in Zipkin

set the |

If you want to profit only from Spring Cloud Sleuth without the Zipkin integration just add

the spring-cloud-starter-sleuth module to your project.

Maven.

<dependencyManagement><dependencies> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>${release.train.version}</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement> <dependency>

<groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-sleuth</artifactId> </dependency>

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM | |

Add the dependency to |

Gradle.

dependencyManagement {  imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:${releaseTrainVersion}"

}

}

dependencies {

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:${releaseTrainVersion}"

}

}

dependencies {  compile "org.springframework.cloud:spring-cloud-starter-sleuth"

}

compile "org.springframework.cloud:spring-cloud-starter-sleuth"

}

If you want both Sleuth and Zipkin just add the spring-cloud-starter-zipkin dependency.

Maven.

<dependencyManagement><dependencies> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>${release.train.version}</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement> <dependency>

<groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-zipkin</artifactId> </dependency>

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM | |

Add the dependency to |

Gradle.

dependencyManagement {  imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:${releaseTrainVersion}"

}

}

dependencies {

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:${releaseTrainVersion}"

}

}

dependencies {  compile "org.springframework.cloud:spring-cloud-starter-zipkin"

}

compile "org.springframework.cloud:spring-cloud-starter-zipkin"

}

If you want to use RabbitMQ or Kafka instead of http, add the spring-rabbit or spring-kafka

dependencies. The default destination name is zipkin.

Note: spring-cloud-sleuth-stream is deprecated and incompatible with these destinations

If you want Sleuth over RabbitMQ add the spring-cloud-starter-zipkin and spring-rabbit

dependencies.

Maven.

<dependencyManagement><dependencies> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>${release.train.version}</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement> <dependency>

<groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-zipkin</artifactId> </dependency> <dependency>

<groupId>org.springframework.amqp</groupId> <artifactId>spring-rabbit</artifactId> </dependency>

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM | |

Add the dependency to | |

To automatically configure rabbit, simply add the spring-rabbit dependency |

Gradle.

dependencyManagement {  imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:${releaseTrainVersion}"

}

}

dependencies {

compile "org.springframework.cloud:spring-cloud-starter-zipkin"

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:${releaseTrainVersion}"

}

}

dependencies {

compile "org.springframework.cloud:spring-cloud-starter-zipkin"  compile "org.springframework.amqp:spring-rabbit"

compile "org.springframework.amqp:spring-rabbit"  }

}

Marcin Grzejszczak talking about Spring Cloud Sleuth and Zipkin

Adds trace and span ids to the Slf4J MDC, so you can extract all the logs from a given trace or span in a log aggregator. Example logs:

2016-02-02 15:30:57.902 INFO [bar,6bfd228dc00d216b,6bfd228dc00d216b,false] 23030 --- [nio-8081-exec-3] ... 2016-02-02 15:30:58.372 ERROR [bar,6bfd228dc00d216b,6bfd228dc00d216b,false] 23030 --- [nio-8081-exec-3] ... 2016-02-02 15:31:01.936 INFO [bar,46ab0d418373cbc9,46ab0d418373cbc9,false] 23030 --- [nio-8081-exec-4] ...

notice the

[appname,traceId,spanId,exportable]entries from the MDC:- spanId - the id of a specific operation that took place

- appname - the name of the application that logged the span

- traceId - the id of the latency graph that contains the span

- exportable - whether the log should be exported to Zipkin or not. When would you like the span not to be exportable? In the case in which you want to wrap some operation in a Span and have it written to the logs only.

- Provides an abstraction over common distributed tracing data models: traces, spans (forming a DAG), annotations, key-value annotations. Loosely based on HTrace, but Zipkin (Dapper) compatible.

Sleuth records timing information to aid in latency analysis. Using sleuth, you can pinpoint causes of latency in your applications. Sleuth is written to not log too much, and to not cause your production application to crash.

- propagates structural data about your call-graph in-band, and the rest out-of-band.

- includes opinionated instrumentation of layers such as HTTP

- includes sampling policy to manage volume

- can report to a Zipkin system for query and visualization

- Instruments common ingress and egress points from Spring applications (servlet filter, async endpoints, rest template, scheduled actions, message channels, zuul filters, feign client).

- Sleuth includes default logic to join a trace across http or messaging boundaries. For example, http propagation

works via Zipkin-compatible request headers. This propagation logic is defined and customized via

SpanInjectorandSpanExtractorimplementations. - Sleuth gives you the possibility to propagate context (also known as baggage) between processes. That means that if you set on a Span a baggage element then it will be sent downstream either via HTTP or messaging to other processes.

- Provides a way to create / continue spans and add tags and logs via annotations.

- Provides simple metrics of accepted / dropped spans.

If

spring-cloud-sleuth-zipkinis on the classpath then the app will generate and collect Zipkin-compatible traces. By default it sends them via HTTP to a Zipkin server on localhost (port 9411). Configure the location of the service usingspring.zipkin.baseUrl.- If you depend on

spring-rabbitorspring-kafkayour app will send traces to a broker instead of http. - Note:

spring-cloud-sleuth-streamis deprecated and should no longer be used.

- If you depend on

![[Important]](images/important.png) | Important |

|---|---|

If using Zipkin, configure the percentage of spans exported using |

![[Note]](images/note.png) | Note |

|---|---|

the SLF4J MDC is always set and logback users will immediately see the trace and span ids in logs per the example

above. Other logging systems have to configure their own formatter to get the same result. The default is

|

In distributed tracing the data volumes can be very high so sampling

can be important (you usually don’t need to export all spans to get a

good picture of what is happening). Spring Cloud Sleuth has a

Sampler strategy that you can implement to take control of the

sampling algorithm. Samplers do not stop span (correlation) ids from

being generated, but they do prevent the tags and events being

attached and exported. By default you get a strategy that continues to

trace if a span is already active, but new ones are always marked as

non-exportable. If all your apps run with this sampler you will see

traces in logs, but not in any remote store. For testing the default

is often enough, and it probably is all you need if you are only using

the logs (e.g. with an ELK aggregator). If you are exporting span data

to Zipkin or Spring Cloud Stream, there is also an AlwaysSampler

that exports everything and a PercentageBasedSampler that samples a

fixed fraction of spans.

![[Note]](images/note.png) | Note |

|---|---|

the |

A sampler can be installed just by creating a bean definition, e.g:

@Bean public Sampler defaultSampler() { return new AlwaysSampler(); }

![[Tip]](images/tip.png) | Tip |

|---|---|

You can set the HTTP header |

Spring Cloud Sleuth instruments all your Spring application

automatically, so you shouldn’t have to do anything to activate

it. The instrumentation is added using a variety of technologies

according to the stack that is available, e.g. for a servlet web

application we use a Filter, and for Spring Integration we use

ChannelInterceptors.

You can customize the keys used in span tags. To limit the volume of

span data, by default an HTTP request will be tagged only with a

handful of metadata like the status code, host and URL. You can add

request headers by configuring spring.sleuth.keys.http.headers (a

list of header names).

![[Note]](images/note.png) | Note |

|---|---|

Remember that tags are only collected and exported if there is a

|

![[Note]](images/note.png) | Note |

|---|---|

Currently the instrumentation in Spring Cloud Sleuth is eager - it means that we’re actively trying to pass the tracing context between threads. Also timing events are captured even when sleuth isn’t exporting data to a tracing system. This approach may change in the future towards being lazy on this matter. |

You can do the following operations on the Span by means of org.springframework.cloud.sleuth.Tracer interface:

- start - when you start a span its name is assigned and start timestamp is recorded.

- close - the span gets finished (the end time of the span is recorded) and if the span is exportable then it will be eligible for collection to Zipkin. The span is also removed from the current thread.

- continue - a new instance of span will be created whereas it will be a copy of the one that it continues.

- detach - the span doesn’t get stopped or closed. It only gets removed from the current thread.

- create with explicit parent - you can create a new span and set an explicit parent to it

![[Tip]](images/tip.png) | Tip |

|---|---|

Spring creates the instance of |

You can manually create spans by using the Tracer interface.

// Start a span. If there was a span present in this thread it will become // the `newSpan`'s parent. Span newSpan = this.tracer.createSpan("calculateTax"); try { // ... // You can tag a span this.tracer.addTag("taxValue", taxValue); // ... // You can log an event on a span newSpan.logEvent("taxCalculated"); } finally { // Once done remember to close the span. This will allow collecting // the span to send it to Zipkin this.tracer.close(newSpan); }

In this example we could see how to create a new instance of span. Assuming that there already was a span present in this thread then it would become the parent of that span.

![[Important]](images/important.png) | Important |

|---|---|

Always clean after you create a span! Don’t forget to close a span if you want to send it to Zipkin. |

![[Important]](images/important.png) | Important |

|---|---|

If your span contains a name greater than 50 chars, then that name will be truncated to 50 chars. Your names have to be explicit and concrete. Big names lead to latency issues and sometimes even thrown exceptions. |

Sometimes you don’t want to create a new span but you want to continue one. Example of such a situation might be (of course it all depends on the use-case):

- AOP - If there was already a span created before an aspect was reached then you might not want to create a new span.

- Hystrix - executing a Hystrix command is most likely a logical part of the current processing. It’s in fact only a technical implementation detail that you wouldn’t necessarily want to reflect in tracing as a separate being.

The continued instance of span is equal to the one that it continues:

Span continuedSpan = this.tracer.continueSpan(spanToContinue);

assertThat(continuedSpan).isEqualTo(spanToContinue);To continue a span you can use the Tracer interface.

// let's assume that we're in a thread Y and we've received // the `initialSpan` from thread X Span continuedSpan = this.tracer.continueSpan(initialSpan); try { // ... // You can tag a span this.tracer.addTag("taxValue", taxValue); // ... // You can log an event on a span continuedSpan.logEvent("taxCalculated"); } finally { // Once done remember to detach the span. That way you'll // safely remove it from the current thread without closing it this.tracer.detach(continuedSpan); }

![[Important]](images/important.png) | Important |

|---|---|

Always clean after you create a span! Don’t forget to detach a span if some work was done started in one thread (e.g. thread X) and it’s waiting for other threads (e.g. Y, Z) to finish. Then the spans in the threads Y, Z should be detached at the end of their work. When the results are collected the span in thread X should be closed. |

There is a possibility that you want to start a new span and provide an explicit parent of that span.

Let’s assume that the parent of a span is in one thread and you want to start a new span in another thread. The

startSpan method of the Tracer interface is the method you are looking for.

// let's assume that we're in a thread Y and we've received // the `initialSpan` from thread X. `initialSpan` will be the parent // of the `newSpan` Span newSpan = this.tracer.createSpan("calculateCommission", initialSpan); try { // ... // You can tag a span this.tracer.addTag("commissionValue", commissionValue); // ... // You can log an event on a span newSpan.logEvent("commissionCalculated"); } finally { // Once done remember to close the span. This will allow collecting // the span to send it to Zipkin. The tags and events set on the // newSpan will not be present on the parent this.tracer.close(newSpan); }

![[Important]](images/important.png) | Important |

|---|---|

After having created such a span remember to close it. Otherwise you will see a lot of warnings in your logs related to the fact that you have a span present in the current thread other than the one you’re trying to close. What’s worse your spans won’t get closed properly thus will not get collected to Zipkin. |

Picking a span name is not a trivial task. Span name should depict an operation name. The name should be low cardinality (e.g. not include identifiers).

Since there is a lot of instrumentation going on some of the span names will be artificial like:

controller-method-namewhen received by a Controller with a method nameconrollerMethodNameasyncfor asynchronous operations done via wrappedCallableandRunnable.@Scheduledannotated methods will return the simple name of the class.

Fortunately, for the asynchronous processing you can provide explicit naming.

You can name the span explicitly via the @SpanName annotation.

@SpanName("calculateTax") class TaxCountingRunnable implements Runnable { @Override public void run() { // perform logic } }

In this case, when processed in the following manner:

Runnable runnable = new TraceRunnable(tracer, spanNamer, new TaxCountingRunnable()); Future<?> future = executorService.submit(runnable); // ... some additional logic ... future.get();

The span will be named calculateTax.

It’s pretty rare to create separate classes for Runnable or Callable. Typically one creates an anonymous

instance of those classes. You can’t annotate such classes thus to override that, if there is no @SpanName annotation present,

we’re checking if the class has a custom implementation of the toString() method.

So executing such code:

Runnable runnable = new TraceRunnable(tracer, spanNamer, new Runnable() { @Override public void run() { // perform logic } @Override public String toString() { return "calculateTax"; } }); Future<?> future = executorService.submit(runnable); // ... some additional logic ... future.get();

will lead in creating a span named calculateTax.

The main arguments for this features are

api-agnostic means to collaborate with a span

- use of annotations allows users to add to a span with no library dependency on a span api. This allows Sleuth to change its core api less impact to user code.

reduced surface area for basic span operations.

- without this feature one has to use the span api, which has lifecycle commands that could be used incorrectly. By only exposing scope, tag and log functionality, users can collaborate without accidentally breaking span lifecycle.

collaboration with runtime generated code

- with libraries such as Spring Data / Feign the implementations of interfaces are generated at runtime thus span wrapping of objects was tedious. Now you can provide annotations over interfaces and arguments of those interfaces

If you really don’t want to take care of creating local spans manually you can profit from the

@NewSpan annotation. Also we give you the @SpanTag annotation to add tags in an automated

fashion.

Let’s look at some examples of usage.

@NewSpan void testMethod();

Annotating the method without any parameter will lead to a creation of a new span whose name will be equal to annotated method name.

@NewSpan("customNameOnTestMethod4") void testMethod4();

If you provide the value in the annotation (either directly or via the name parameter) then

the created span will have the name as the provided value.

// method declaration @NewSpan(name = "customNameOnTestMethod5") void testMethod5(@SpanTag("testTag") String param); // and method execution this.testBean.testMethod5("test");

You can combine both the name and a tag. Let’s focus on the latter. In this case whatever the value of

the annotated method’s parameter runtime value will be - that will be the value of the tag. In our sample

the tag key will be testTag and the tag value will be test.

@NewSpan(name = "customNameOnTestMethod3") @Override public void testMethod3() { }

You can place the @NewSpan annotation on both the class and an interface. If you override the

interface’s method and provide a different value of the @NewSpan annotation then the most

concrete one wins (in this case customNameOnTestMethod3 will be set).

If you want to just add tags and annotations to an existing span it’s enough

to use the @ContinueSpan annotation as presented below. Note that in contrast

with the @NewSpan annotation you can also add logs via the log parameter:

// method declaration @ContinueSpan(log = "testMethod11") void testMethod11(@SpanTag("testTag11") String param); // method execution this.testBean.testMethod11("test");

That way the span will get continued and:

- logs with name

testMethod11.beforeandtestMethod11.afterwill be created - if an exception will be thrown a log

testMethod11.afterFailurewill also be created - tag with key

testTag11and valuetestwill be created

There are 3 different ways to add tags to a span. All of them are controlled by the SpanTag annotation.

Precedence is:

- try with the bean of

TagValueResolvertype and provided name - if one hasn’t provided the bean name, try to evaluate an expression. We’re searching for a

TagValueExpressionResolverbean. The default implementation uses SPEL expression resolution. If we do not find any expression to evaluate, return thetoString()value of the parameter. IMPORTANT You can only reference properties from the SPEL expression. Method execution is not allowed due to security constraints. - if one hasn’t provided any expression to evaluate just return a

toString()value of the parameter

The value of the tag for following method will be computed by an implementation of TagValueResolver interface.

Its class name has to be passed as the value of the resolver attribute.

Having such an annotated method:

@NewSpan public void getAnnotationForTagValueResolver(@SpanTag(key = "test", resolver = TagValueResolver.class) String test) { }

and such a TagValueResolver bean implementation

@Bean(name = "myCustomTagValueResolver") public TagValueResolver tagValueResolver() { return parameter -> "Value from myCustomTagValueResolver"; }

Will lead to setting of a tag value equal to Value from myCustomTagValueResolver.

Having such an annotated method:

@NewSpan public void getAnnotationForTagValueExpression(@SpanTag(key = "test", expression = "'hello' + ' characters'") String test) { }

and no custom implementation of a TagValueExpressionResolver will lead to evaluation of the SPEL expression and a tag with value 4 characters will be set on the span.

If you want to use some other expression resolution mechanism you can create your own implementation

of the bean.

Thanks to the SpanInjector and SpanExtractor you can customize the way spans

are created and propagated.

There are currently two built-in ways to pass tracing information between processes:

- via Spring Integration

- via HTTP

Span ids are extracted from Zipkin-compatible (B3) headers (either Message

or HTTP headers), to start or join an existing trace. Trace information is

injected into any outbound requests so the next hop can extract them.

The default way of coding tracing context is done via the b3 header that contains the

traceId-spanId-sampled notation (e.g. 0000000000000005-0000000000000004-1).

For backward compatibility, if the b3 header is not present, we also check if

X-B3 entries are present, and retrieve tracing context from there e.g.

(X-B3-TraceId: 0000000000000005, X-B3-SpanId: 0000000000000004, X-B3-Sampled: 1).

The key change in comparison to the previous versions of Sleuth is that Sleuth is implementing

the Open Tracing’s TextMap notion. In Sleuth it’s called SpanTextMap. Basically the idea

is that any means of communication (e.g. message, http request, etc.) can be abstracted via

a SpanTextMap. This abstraction defines how one can insert data into the carrier and

how to retrieve it from there. Thanks to this if you want to instrument a new HTTP library

that uses a FooRequest as a mean of sending HTTP requests then you have to create an

implementation of a SpanTextMap that delegates calls to FooRequest in terms of retrieval

and insertion of HTTP headers.

For Spring Integration there are 2 interfaces responsible for creation of a Span from a Message.

These are:

MessagingSpanTextMapExtractorMessagingSpanTextMapInjector

You can override them by providing your own implementation.

For HTTP there are 2 interfaces responsible for creation of a Span from a Message.

These are:

HttpSpanExtractorHttpSpanInjector

You can override them by providing your own implementation.

Let’s assume that instead of the standard Zipkin compatible tracing HTTP header names you have

- for trace id -

correlationId - for span id -

mySpanId

This is a an example of a SpanExtractor

static class CustomHttpSpanExtractor implements HttpSpanExtractor { @Override public Span joinTrace(SpanTextMap carrier) { Map<String, String> map = TextMapUtil.asMap(carrier); long traceId = Span.hexToId(map.get("correlationid")); long spanId = Span.hexToId(map.get("myspanid")); // extract all necessary headers Span.SpanBuilder builder = Span.builder().traceId(traceId).spanId(spanId); // build rest of the Span return builder.build(); } } static class CustomHttpSpanInjector implements HttpSpanInjector { @Override public void inject(Span span, SpanTextMap carrier) { carrier.put("correlationId", span.traceIdString()); carrier.put("mySpanId", Span.idToHex(span.getSpanId())); } }

And you could register it like this:

@Bean HttpSpanInjector customHttpSpanInjector() { return new CustomHttpSpanInjector(); } @Bean HttpSpanExtractor customHttpSpanExtractor() { return new CustomHttpSpanExtractor(); }

Spring Cloud Sleuth does not add trace/span related headers to the Http Response for security reasons. If you need the headers then a custom SpanInjector

that injects the headers into the Http Response and a Servlet filter which makes use of this can be added the following way:

static class CustomHttpServletResponseSpanInjector extends ZipkinHttpSpanInjector { @Override public void inject(Span span, SpanTextMap carrier) { super.inject(span, carrier); carrier.put(Span.TRACE_ID_NAME, span.traceIdString()); carrier.put(Span.SPAN_ID_NAME, Span.idToHex(span.getSpanId())); } } static class HttpResponseInjectingTraceFilter extends GenericFilterBean { private final Tracer tracer; private final HttpSpanInjector spanInjector; public HttpResponseInjectingTraceFilter(Tracer tracer, HttpSpanInjector spanInjector) { this.tracer = tracer; this.spanInjector = spanInjector; } @Override public void doFilter(ServletRequest request, ServletResponse servletResponse, FilterChain filterChain) throws IOException, ServletException { HttpServletResponse response = (HttpServletResponse) servletResponse; Span currentSpan = this.tracer.getCurrentSpan(); this.spanInjector.inject(currentSpan, new HttpServletResponseTextMap(response)); filterChain.doFilter(request, response); } class HttpServletResponseTextMap implements SpanTextMap { private final HttpServletResponse delegate; HttpServletResponseTextMap(HttpServletResponse delegate) { this.delegate = delegate; } @Override public Iterator<Map.Entry<String, String>> iterator() { Map<String, String> map = new HashMap<>(); for (String header : this.delegate.getHeaderNames()) { map.put(header, this.delegate.getHeader(header)); } return map.entrySet().iterator(); } @Override public void put(String key, String value) { this.delegate.addHeader(key, value); } } }

And you could register them like this:

@Bean HttpSpanInjector customHttpServletResponseSpanInjector() { return new CustomHttpServletResponseSpanInjector(); } @Bean HttpResponseInjectingTraceFilter responseInjectingTraceFilter(Tracer tracer) { return new HttpResponseInjectingTraceFilter(tracer, customHttpServletResponseSpanInjector()); }

You can also modify the behaviour of the TraceFilter - the component that is responsible

for processing the input HTTP request and adding tags basing on the HTTP response. You can customize

the tags, or modify the response headers by registering your own instance of the TraceFilter bean.

In the following example we will register the TraceFilter bean and we will add the

ZIPKIN-TRACE-ID response header containing the current Span’s trace id. Also we will

add to the Span a tag with key custom and a value tag.

@Bean TraceFilter myTraceFilter(BeanFactory beanFactory, final Tracer tracer) { return new TraceFilter(beanFactory) { @Override protected void addResponseTags(HttpServletResponse response, Throwable e) { // execute the default behaviour super.addResponseTags(response, e); // for readability we're returning trace id in a hex form response.addHeader("ZIPKIN-TRACE-ID", Span.idToHex(tracer.getCurrentSpan().getTraceId())); // we can also add some custom tags tracer.addTag("custom", "tag"); } }; }

To change the order of TraceFilter registration, please set the

spring.sleuth.web.filter-order property.

Sometimes you want to create a manual Span that will wrap a call to an external service which is not instrumented.

What you can do is to create a span with the peer.service tag that will contain a value of the service that you want to call.

Below you can see an example of a call to Redis that is wrapped in such a span.

org.springframework.cloud.sleuth.Span newSpan = tracer.createSpan("redis"); try { newSpan.tag("redis.op", "get"); newSpan.tag("lc", "redis"); newSpan.logEvent(org.springframework.cloud.sleuth.Span.CLIENT_SEND); // call redis service e.g // return (SomeObj) redisTemplate.opsForHash().get("MYHASH", someObjKey); } finally { newSpan.tag("peer.service", "redisService"); newSpan.tag("peer.ipv4", "1.2.3.4"); newSpan.tag("peer.port", "1234"); newSpan.logEvent(org.springframework.cloud.sleuth.Span.CLIENT_RECV); tracer.close(newSpan); }

![[Important]](images/important.png) | Important |

|---|---|

Remember not to add both |

By default Sleuth assumes that when you send a span to Zipkin, you want the span’s service name

to be equal to spring.application.name value. That’s not always the case though. There

are situations in which you want to explicitly provide a different service name for all spans coming

from your application. To achieve that it’s enough to just pass the following property

to your application to override that value (example for foo service name):

spring.zipkin.service.name: fooBefore reporting spans to e.g. Zipkin you can be interested in modifying that span in some way.

You can achieve that by using the SpanAdjuster interface.

Example of usage:

In Sleuth we’re generating spans with a fixed name. Some users want to modify the name depending on values

of tags. Implementation of the SpanAdjuster interface can be used to alter that name. Example:

@Bean SpanAdjuster customSpanAdjuster() { return span -> span.toBuilder().name(scrub(span.getName())).build(); }

This will lead in changing the name of the reported span just before it gets sent to Zipkin.

![[Important]](images/important.png) | Important |

|---|---|

Your |

In order to define the host that is corresponding to a particular span we need to resolve the host name and port. The default approach is to take it from server properties. If those for some reason are not set then we’re trying to retrieve the host name from the network interfaces.

If you have the discovery client enabled and prefer to retrieve the host address from the registered instance in a service registry then you have to set the property (it’s applicable for both HTTP and Stream based span reporting).

spring.zipkin.locator.discovery.enabled: true

![[Important]](images/important.png) | Important |

|---|---|

|

By default if you add spring-cloud-starter-zipkin as a dependency to your project,

when the span is closed, it will be sent to Zipkin over HTTP. The communication

is asynchronous. You can configure the URL by setting the spring.zipkin.baseUrl

property as follows:

spring.zipkin.baseUrl: https://192.168.99.100:9411/

If you want to find Zipkin via service discovery it’s enough to pass the

Zipkin’s service id inside the URL. If you want to disable this feature

just set spring.zipkin.discoveryClientEnabled to false.

Example for `zipkinserver service id:

spring.zipkin.baseUrl: http://zipkinserver/When this Discovery Client feature is enabled, Sleuth uses

LoadBalancerClient to find the URL of the Zipkin Server. It means

that you can set up the load balancing configuration e.g. via Ribbon.

zipkinserver: ribbon: ListOfServers: host1,host2

If you have web, rabbit or kafka together on the classpath, you might need

to pick the means by which you would like to send spans to zipkin. To do that

just set either web, rabbit or kafka to the spring.zipkin.sender.type property.

Example for web:

spring.zipkin.sender.type: webTo customize the RestTemplate that sends spans to Zipkin via HTTP, you can register

the ZipkinRestTemplateCustomizer bean.

@Configuration class MyConfig { @Bean ZipkinRestTemplateCustomizer myCustomizer() { return new ZipkinRestTemplateCustomizer() { @Override void customize(RestTemplate restTemplate) { // customize the RestTemplate } }; } }

If, however, you would like to control the full process of creating the RestTemplate

object, you will have to create a bean of zipkin2.reporter.Sender type.

@Bean Sender myRestTemplateSender(ZipkinProperties zipkin, ZipkinRestTemplateCustomizer zipkinRestTemplateCustomizer) { RestTemplate restTemplate = mySuperCustomRestTemplate(); zipkinRestTemplateCustomizer.customize(restTemplate); return myCustomSender(zipkin, restTemplate); }

You can accumulate and send span data over

Spring Cloud Stream by

including the spring-cloud-sleuth-stream jar as a dependency, and

adding a Channel Binder implementation

(e.g. spring-cloud-starter-stream-rabbit for RabbitMQ or

spring-cloud-starter-stream-kafka for Kafka). This will

automatically turn your app into a producer of messages with payload

type Spans. The channel name to which the spans will be sent

is called sleuth.

![[Important]](images/important.png) | Important |

|---|---|

|

There is a special convenience annotation for setting up a message consumer

for the Span data and pushing it into a Zipkin SpanStore. This application

@SpringBootApplication @EnableZipkinStreamServer public class Consumer { public static void main(String[] args) { SpringApplication.run(Consumer.class, args); } }

will listen for the Span data on whatever transport you provide via a

Spring Cloud Stream Binder (e.g. include

spring-cloud-starter-stream-rabbit for RabbitMQ, and similar

starters exist for Redis and Kafka). If you add the following UI dependency

<groupId>io.zipkin.java</groupId> <artifactId>zipkin-autoconfigure-ui</artifactId>

Then you’ll have your app a Zipkin server, which hosts the UI and api on port 9411.

The default SpanStore is in-memory (good for demos and getting

started quickly). For a more robust solution you can add MySQL and

spring-boot-starter-jdbc to your classpath and enable the JDBC

SpanStore via configuration, e.g.:

spring: rabbitmq: host: ${RABBIT_HOST:localhost} datasource: schema: classpath:/mysql.sql url: jdbc:mysql://${MYSQL_HOST:localhost}/test username: root password: root # Switch this on to create the schema on startup: initialize: true continueOnError: true sleuth: enabled: false zipkin: storage: type: mysql

![[Note]](images/note.png) | Note |

|---|---|

The |

A custom consumer can also easily be implemented using

spring-cloud-sleuth-stream and binding to the SleuthSink. Example:

@EnableBinding(SleuthSink.class) @SpringBootApplication(exclude = SleuthStreamAutoConfiguration.class) @MessageEndpoint public class Consumer { @ServiceActivator(inputChannel = SleuthSink.INPUT) public void sink(Spans input) throws Exception { // ... process spans } }

![[Note]](images/note.png) | Note |

|---|---|

the sample consumer application above explicitly excludes

|

In order to customize the polling mechanism you can create a bean of PollerMetadata type

with name equal to StreamSpanReporter.POLLER. Here you can find an example of such a configuration.

@Configuration public static class CustomPollerConfiguration { @Bean(name = StreamSpanReporter.POLLER) PollerMetadata customPoller() { PollerMetadata poller = new PollerMetadata(); poller.setMaxMessagesPerPoll(500); poller.setTrigger(new PeriodicTrigger(5000L)); return poller; } }

Currently Spring Cloud Sleuth registers very simple metrics related to spans. It’s using the Spring Boot’s metrics support to calculate the number of accepted and dropped spans. Each time a span gets sent to Zipkin the number of accepted spans will increase. If there’s an error then the number of dropped spans will get increased.

If you’re wrapping your logic in Runnable or Callable it’s enough to wrap those classes in their Sleuth representative.

Example for Runnable:

Runnable runnable = new Runnable() { @Override public void run() { // do some work } @Override public String toString() { return "spanNameFromToStringMethod"; } }; // Manual `TraceRunnable` creation with explicit "calculateTax" Span name Runnable traceRunnable = new TraceRunnable(tracer, spanNamer, runnable, "calculateTax"); // Wrapping `Runnable` with `Tracer`. The Span name will be taken either from the // `@SpanName` annotation or from `toString` method Runnable traceRunnableFromTracer = tracer.wrap(runnable);

Example for Callable:

Callable<String> callable = new Callable<String>() { @Override public String call() throws Exception { return someLogic(); } @Override public String toString() { return "spanNameFromToStringMethod"; } }; // Manual `TraceCallable` creation with explicit "calculateTax" Span name Callable<String> traceCallable = new TraceCallable<>(tracer, spanNamer, callable, "calculateTax"); // Wrapping `Callable` with `Tracer`. The Span name will be taken either from the // `@SpanName` annotation or from `toString` method Callable<String> traceCallableFromTracer = tracer.wrap(callable);

That way you will ensure that a new Span is created and closed for each execution.

We’re registering a custom HystrixConcurrencyStrategy

that wraps all Callable instances into their Sleuth representative -

the TraceCallable. The strategy either starts or continues a span depending on the fact whether tracing was already going

on before the Hystrix command was called. To disable the custom Hystrix Concurrency Strategy set the spring.sleuth.hystrix.strategy.enabled to false.

Assuming that you have the following HystrixCommand:

HystrixCommand<String> hystrixCommand = new HystrixCommand<String>(setter) { @Override protected String run() throws Exception { return someLogic(); } };

In order to pass the tracing information you have to wrap the same logic in the Sleuth version of the HystrixCommand which is the

TraceCommand:

TraceCommand<String> traceCommand = new TraceCommand<String>(tracer, traceKeys, setter) { @Override public String doRun() throws Exception { return someLogic(); } };

We’re registering a custom RxJavaSchedulersHook

that wraps all Action0 instances into their Sleuth representative -

the TraceAction. The hook either starts or continues a span depending on the fact whether tracing was already going

on before the Action was scheduled. To disable the custom RxJavaSchedulersHook set the spring.sleuth.rxjava.schedulers.hook.enabled to false.

You can define a list of regular expressions for thread names, for which you don’t want a Span to be created. Just provide a comma separated list

of regular expressions in the spring.sleuth.rxjava.schedulers.ignoredthreads property.

Features from this section can be disabled by providing the spring.sleuth.web.enabled property with value equal to false.

Via the TraceFilter all sampled incoming requests result in creation of a Span. That Span’s name is http: + the path to which

the request was sent. E.g. if the request was sent to /foo/bar then the name will be http:/foo/bar. You can configure which URIs you would

like to skip via the spring.sleuth.web.skipPattern property. If you have ManagementServerProperties on classpath then

its value of contextPath gets appended to the provided skip pattern.

Since we want the span names to be precise we’re using a TraceHandlerInterceptor that either wraps an

existing HandlerInterceptor or is added directly to the list of existing HandlerInterceptors. The

TraceHandlerInterceptor adds a special request attribute to the given HttpServletRequest. If the

the TraceFilter doesn’t see this attribute set it will create a "fallback" span which is an additional

span created on the server side so that the trace is presented properly in the UI. Seeing that most likely

signifies that there is a missing instrumentation. In that case please file an issue in Spring Cloud Sleuth.

We’re injecting a RestTemplate interceptor that ensures that all the tracing information is passed to the requests. Each time a

call is made a new Span is created. It gets closed upon receiving the response. In order to block the synchronous RestTemplate features

just set spring.sleuth.web.client.enabled to false.

![[Important]](images/important.png) | Important |

|---|---|

You have to register |

![[Important]](images/important.png) | Important |

|---|---|

A traced version of an |

Custom instrumentation is set to create and close Spans upon sending and receiving requests. You can customize the ClientHttpRequestFactory

and the AsyncClientHttpRequestFactory by registering your beans. Remember to use tracing compatible implementations (e.g. don’t forget to

wrap ThreadPoolTaskScheduler in a TraceAsyncListenableTaskExecutor). Example of custom request factories:

@EnableAutoConfiguration @Configuration public static class TestConfiguration { @Bean ClientHttpRequestFactory mySyncClientFactory() { return new MySyncClientHttpRequestFactory(); } @Bean AsyncClientHttpRequestFactory myAsyncClientFactory() { return new MyAsyncClientHttpRequestFactory(); } }

To block the AsyncRestTemplate features set spring.sleuth.web.async.client.enabled to false.

To disable creation of the default TraceAsyncClientHttpRequestFactoryWrapper set spring.sleuth.web.async.client.factory.enabled

to false. If you don’t want to create AsyncRestClient at all set spring.sleuth.web.async.client.template.enabled to false.

Sometimes you need to use multiple implementations of Asynchronous Rest Template. In the following snippet you

can see an example of how to set up such a custom AsyncRestTemplate.

@Configuration @EnableAutoConfiguration static class Config { @Autowired Tracer tracer; @Autowired HttpTraceKeysInjector httpTraceKeysInjector; @Autowired HttpSpanInjector spanInjector; @Bean(name = "customAsyncRestTemplate") public AsyncRestTemplate traceAsyncRestTemplate(@Qualifier("customHttpRequestFactoryWrapper") TraceAsyncClientHttpRequestFactoryWrapper wrapper, ErrorParser errorParser) { return new TraceAsyncRestTemplate(wrapper, this.tracer, errorParser); } @Bean(name = "customHttpRequestFactoryWrapper") public TraceAsyncClientHttpRequestFactoryWrapper traceAsyncClientHttpRequestFactory() { return new TraceAsyncClientHttpRequestFactoryWrapper(this.tracer, this.spanInjector, asyncClientFactory(), clientHttpRequestFactory(), this.httpTraceKeysInjector); } private ClientHttpRequestFactory clientHttpRequestFactory() { ClientHttpRequestFactory clientHttpRequestFactory = new CustomClientHttpRequestFactory(); //CUSTOMIZE HERE return clientHttpRequestFactory; } private AsyncClientHttpRequestFactory asyncClientFactory() { AsyncClientHttpRequestFactory factory = new CustomAsyncClientHttpRequestFactory(); //CUSTOMIZE HERE return factory; } }

If you’re using the Traverson library

it’s enough for you to inject a RestTemplate as a bean into your Traverson object. Since RestTemplate

is already intercepted, you will get full support of tracing in your client. Below you can find a pseudo code

of how to do that:

@Autowired RestTemplate restTemplate; Traverson traverson = new Traverson(URI.create("http://some/address"), MediaType.APPLICATION_JSON, MediaType.APPLICATION_JSON_UTF8).setRestOperations(restTemplate); // use Traverson

By default Spring Cloud Sleuth provides integration with feign via the TraceFeignClientAutoConfiguration. You can disable it entirely

by setting spring.sleuth.feign.enabled to false. If you do so then no Feign related instrumentation will take place.

Part of Feign instrumentation is done via a FeignBeanPostProcessor. You can disable it by providing the spring.sleuth.feign.processor.enabled equal to false.

If you set it like this then Spring Cloud Sleuth will not instrument any of your custom Feign components. All the default instrumentation

however will be still there.

In Spring Cloud Sleuth we’re instrumenting async related components so that the tracing information is passed between threads.

You can disable this behaviour by setting the value of spring.sleuth.async.enabled to false.

If you annotate your method with @Async then we’ll automatically create a new Span with the following characteristics:

- if the method is annotated with

@SpanNamethen the value of the annotation will be the Span’s name - if the method is not annotated with

@SpanNamethe Span name will be the annotated method name - the Span will be tagged with that method’s class name and the method name too

In Spring Cloud Sleuth we’re instrumenting scheduled method execution so that the tracing information is passed between threads. You can disable this behaviour

by setting the value of spring.sleuth.scheduled.enabled to false.

If you annotate your method with @Scheduled then we’ll automatically create a new Span with the following characteristics:

- the Span name will be the annotated method name

- the Span will be tagged with that method’s class name and the method name too

If you want to skip Span creation for some @Scheduled annotated classes you can set the

spring.sleuth.scheduled.skipPattern with a regular expression that will match the fully qualified name of the

@Scheduled annotated class.

![[Tip]](images/tip.png) | Tip |

|---|---|

If you are using |

We’re providing LazyTraceExecutor, TraceableExecutorService and TraceableScheduledExecutorService. Those implementations

are creating Spans each time a new task is submitted, invoked or scheduled.

Here you can see an example of how to pass tracing information with TraceableExecutorService when working with CompletableFuture:

CompletableFuture<Long> completableFuture = CompletableFuture.supplyAsync(() -> {

// perform some logic

return 1_000_000L;

}, new TraceableExecutorService(executorService,

// 'calculateTax' explicitly names the span - this param is optional

tracer, traceKeys, spanNamer, "calculateTax"));![[Important]](images/important.png) | Important |

|---|---|

Sleuth doesn’t work with |

Sometimes you need to set up a custom instance of the AsyncExecutor. In the following snippet you

can see an example of how to set up such a custom Executor.

@Configuration @EnableAutoConfiguration @EnableAsync static class CustomExecutorConfig extends AsyncConfigurerSupport { @Autowired BeanFactory beanFactory; @Override public Executor getAsyncExecutor() { ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor(); // CUSTOMIZE HERE executor.setCorePoolSize(7); executor.setMaxPoolSize(42); executor.setQueueCapacity(11); executor.setThreadNamePrefix("MyExecutor-"); // DON'T FORGET TO INITIALIZE executor.initialize(); return new LazyTraceExecutor(this.beanFactory, executor); } }

Spring Cloud Sleuth integrates with Spring Integration. It creates spans for publish and

subscribe events. To disable Spring Integration instrumentation, set spring.sleuth.integration.enabled to false.

You can provide the spring.sleuth.integration.patterns pattern to explicitly

provide the names of channels that you want to include for tracing. By default all channels

are included.

![[Important]](images/important.png) | Important |

|---|---|

When using the |

We’re registering Zuul filters to propagate the tracing information (the request header is enriched with tracing data).

To disable Zuul support set the spring.sleuth.zuul.enabled property to false.

Sleuth works out of the box with Spring Cloud Function. Since functions

might be short living, it’s best to make the Zipkin span reporting synchronous.

Just define a Reporter<Span> bean as presented below:

@Configuration class ReporterConfiguration { @Bean public Reporter<Span> reporter( SpanMetricReporter spanMetricReporter, ZipkinProperties zipkin, Sender sender ) { final AsyncReporter<Span> reporter = AsyncReporter.builder(sender) .queuedMaxSpans(1000) .messageTimeout(zipkin.getMessageTimeout(), TimeUnit.SECONDS) .metrics(new ReporterMetricsAdapter(spanMetricReporter)) .build(zipkin.getEncoder()); return new Reporter<Span>() { @Override public void report(Span span) { reporter.report(span); // make the reporter synchronous reporter.flush(); } }; } }

You can find the running examples deployed in the Pivotal Web Services. Check them out in the following links: