Table of Contents

- 1. Introduction

- 2. Opinionated implementation

- 3. Project opinions

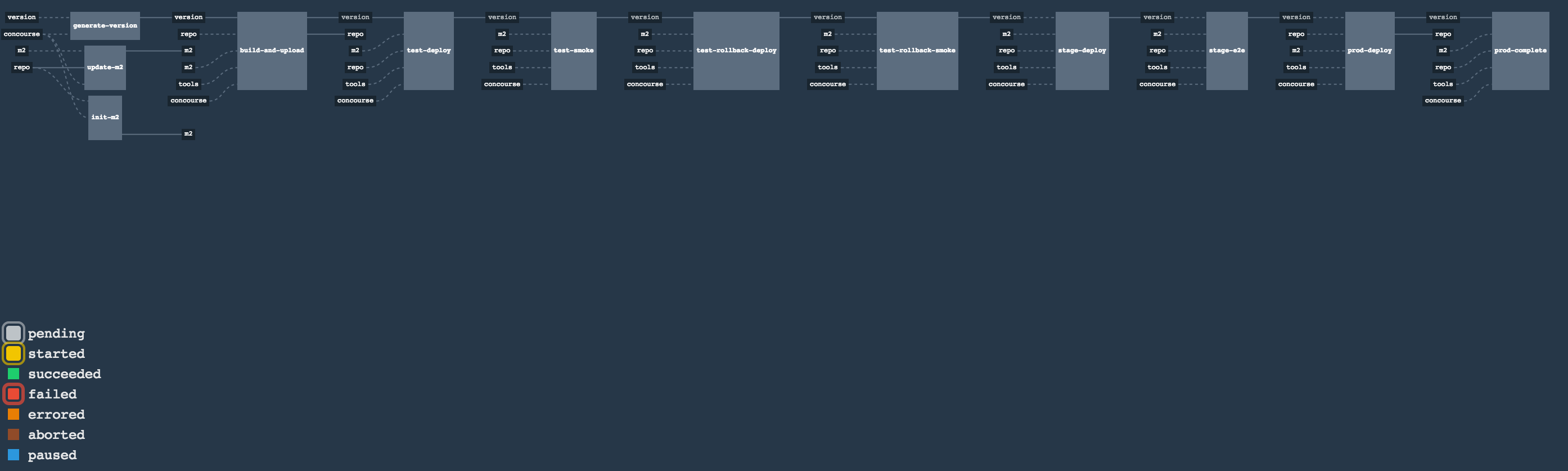

- 4. Concourse Pipeline (Cloud Foundry)

- 5. Concourse Pipeline (Kubernetes)

- 6. Concourse FAQ

- 6.1. Can I use the pipeline for some other repos?

- 6.2. Will this work for ANY project out of the box?

- 6.3. Can I modify this to reuse in my project?

- 6.4. I ran out of resources!! (PCF Dev)

- 6.5. The rollback step fails due to missing JAR ?!

- 6.6. Can I see the output of a job from the terminal?

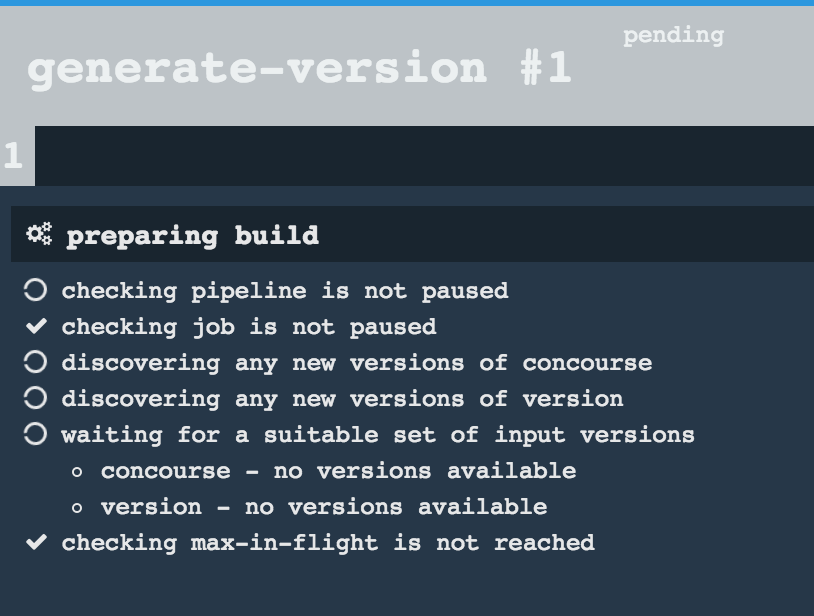

- 6.7. I clicked the job and it’s constantly pending…

- 6.8. The route is already in use (CF)

- 6.9. I’m unauthorized to deploy infrastructure jars

- 6.10.

versionresource is broken

- 7. Jenkins Pipeline (Common)

- 8. Jenkins Pipeline (Cloud Foundry)

- 9. Jenkins Pipeline (Kubernetes)

- 10. Jenkins FAQ

- 10.1. Pipeline version contains ${PIPELINE_VERSION}

- 10.2. Pipeline version is not passed to the build

- 10.3. The build times out with

pipeline.shinfo - 10.4. Can I use the pipeline for some other repos?

- 10.5. Will this work for ANY project out of the box?

- 10.6. Can I modify this to reuse in my project?

- 10.7. The rollback step fails due to missing JAR ?!

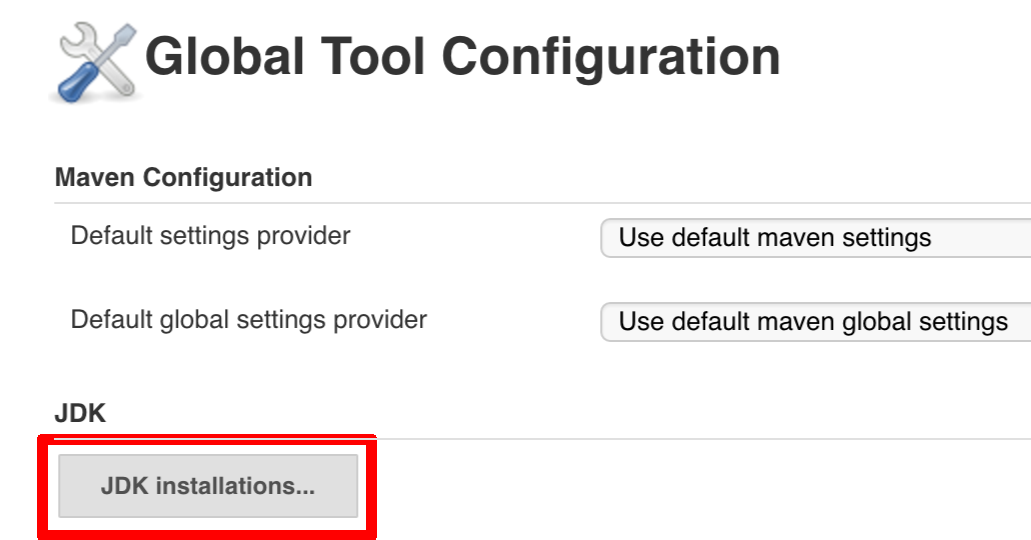

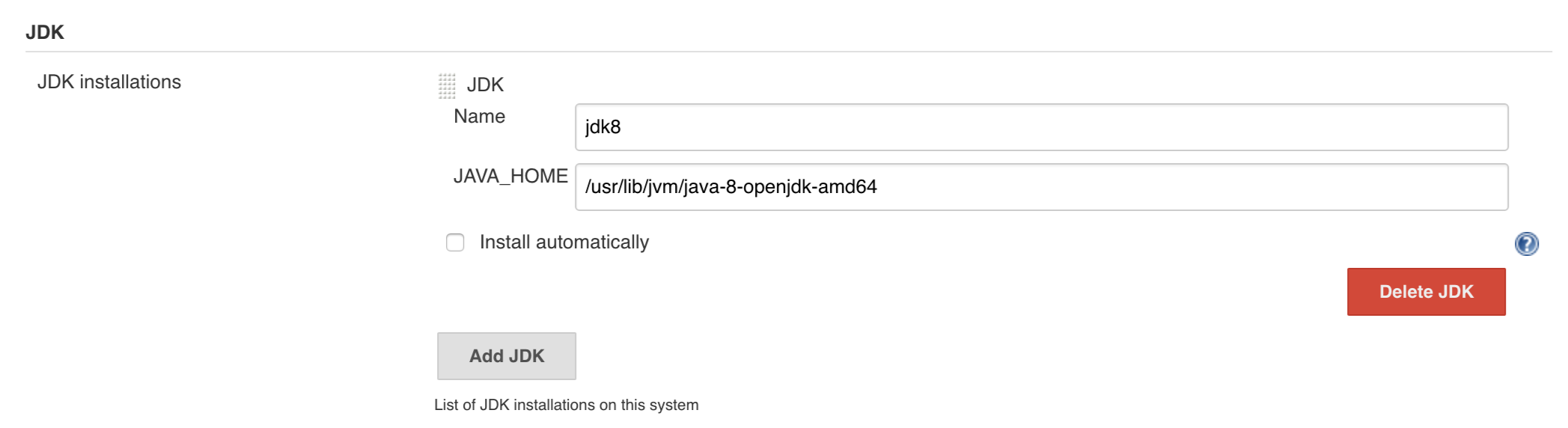

- 10.8. I want to provide a different JDK version

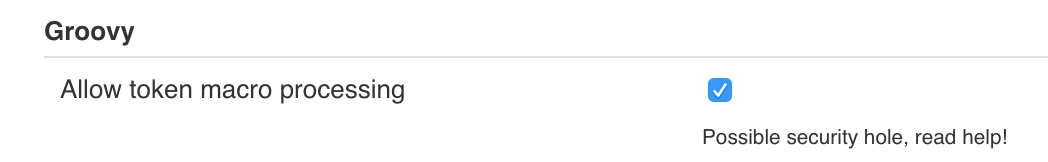

- 10.9. Enable Groovy Token Macro Processing

- 10.10. I want deployment to stage and prod be automatic

- 10.11. I don’t want to test API compativility

- 10.12. I can’t tag the repo!

- 10.13. I’m unauthorized to deploy infrastructure jars

- 10.14. Signing Artifacts

- 10.15. Using SSH keys for git

- 10.16. Deploy to stage fails and doesn’t redeploy a service (Kubernetes)

- 10.17. I ran out of resources!! (Cloud Foundry)

- 10.18. Deploying to test / stage / prod fails - error finding space (Cloud Foundry)

- 10.19. The route is already in use (Cloud Foundry)

- 10.20. How to execute helper scripts against a real CF instance I’m logged into (Cloud Foundry)

- 11. Kubernetes setup

- 12. The demo setup (Cloud Foundry)

- 13. The demo setup (Kubernetes)

- 14. Step-by-step Cloud Foundry migration

- 14.1. Preview

- 14.2. Introduction

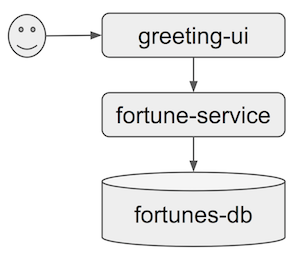

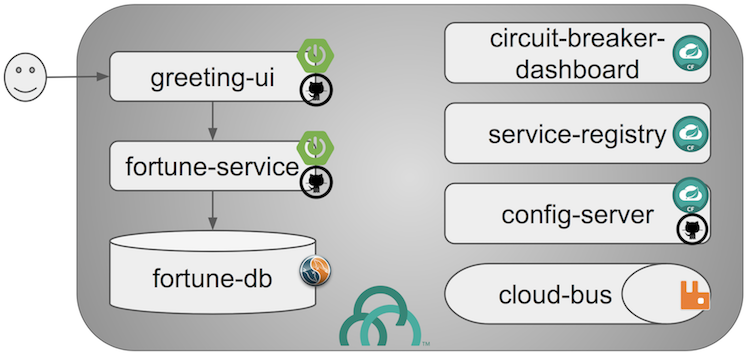

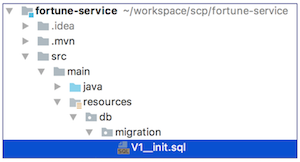

- 14.3. Sample application - initial state

- 14.4. Sample application - end state

- 14.5. Tutorial - toolset

- 14.6. Tutorial - overview

- 14.7. Tutorial - step-by-step

- 14.7.1. Prep: Before you begin

- 14.7.2. Stage 1: Scaffolding

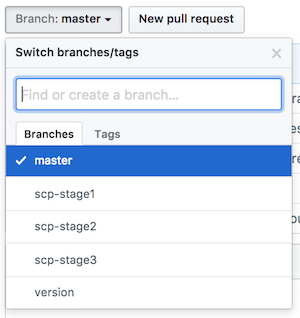

- 1.1 Create GitHub branches

- 1.2 Add Maven wrapper

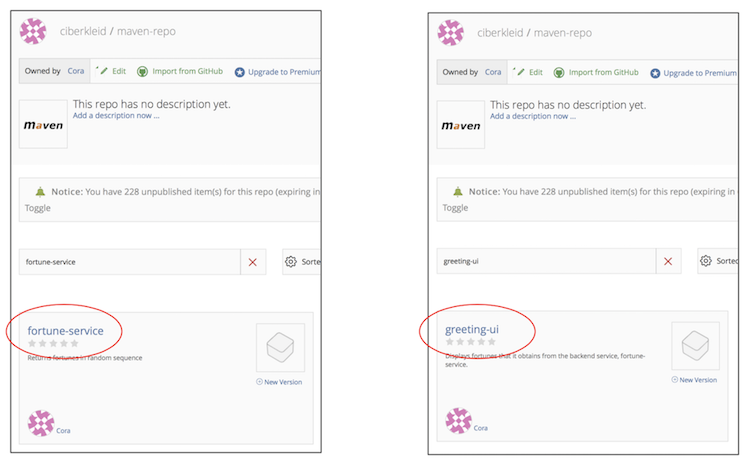

- 1.3 Create Bintray maven repo package

- 1.4 Configure distribution management using Bintray maven repo

- 1.5 Push changes to GitHub

- 1.6 Add Spring Cloud Pipelines credentials file

- 1.7 Set Concourse pipeline

- 1.8 Add Cloud Foundry manifest

- 1.9 Add Spring Cloud Pipelines manifest

- 1.10 Push changes to GitHub

- 1.11 Create Cloud Foundry Orgs/Spaces

- 1.12 Create Cloud Foundry stage and prod service instances

- 1.13 Update Spring Cloud Pipelines credentials file

- 1.14 Update Concourse pipeline with updated credentials files

- Stage 1 Recap & next steps

- 14.7.3. Stage 2: Tests

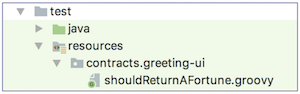

- 14.7.4. Stage 3: Contracts

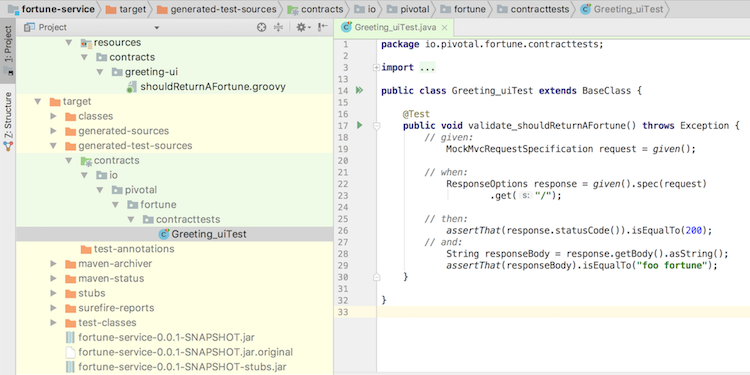

- 3.1 Create a contract

- 3.2 Create a base class for contract tests

- 3.3 Enable automated contract-based testing

- 3.4 Enable backward compatibility API check

- 3.5 Push changes to GitHub

- 3.6 Re-run the fortune-service pipeline

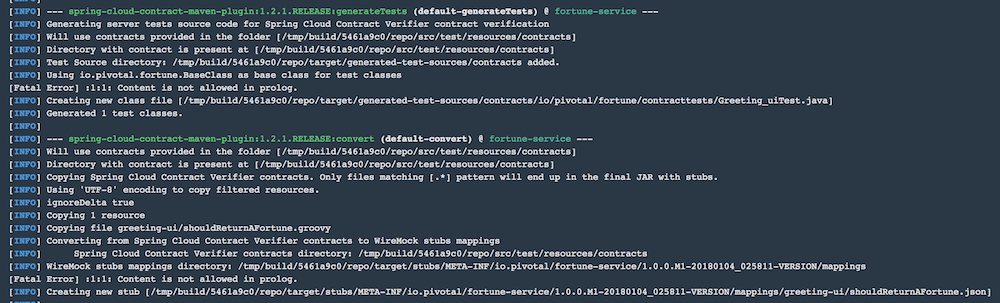

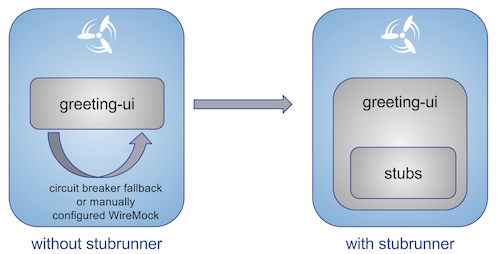

- 3.7 Enable stubs for integration tests

- 3.8 Enable stubs for smoke tests

- 3.9 Push changes to GitHub

- Stage 3 Recap

- 14.8. Conclusion

- 15. Building the project

- 16. Customizing the project

- 17. Releasing the project

Documentation Authors: Marcin Grzejszczak, Cora Iberkleid

Spring, Spring Boot and Spring Cloud are tools that allow developers speed up the time of creating new business features. It’s common knowledge however that the feature is only valuable if it’s in production. That’s why companies spend a lot of time and resources on building their own deployment pipelines.

This project tries to solve the following problems:

- Creation of a common deployment pipeline

- Propagation of good testing & deployment practices

- Speed up the time required to deploy a feature to production

A common way of running, configuring and deploying applications lowers support costs and time needed by new developers to blend in when they change projects.

In the following section we will describe in more depth the rationale behind the presented opinionated pipeline. We will go through each deployment step and describe it in details.

![[Important]](images/important.png) | Important |

|---|---|

You don’t need to use all pieces of Spring Cloud Pipelines. You can (and should) gradually migrate your applications to use those pieces of Spring Cloud Pipelines that you think best suit your needs. |

. ├── common ├── concourse ├── dist ├── docs ├── docs-sources └── jenkins

In the common folder you can find all the Bash scripts containing the pipeline logic. These

scripts are reused by both Concourse and Jenkins pipelines.

In the concourse folder you can find all the necessary scripts and setup to run Concourse demo.

In the dist folder you can find the packaged sources of the project. Since the package

contains no tests or documentation it’s extremely small and can be used in the pipelines.

In the docs folder you have the whole generated documentation of the project.

In the docs-source folder you have the sources required to generate the documentation.

In the jenkins folder you can find all the necessary scripts and setup to run Jenkins demo.

This repository can be treated as a template for your pipeline. We provide some opinionated implementation that you can alter to suit your needs. The best approach to use it to build your production projects would be to download the Spring Cloud Pipelines repository as ZIP, then init a Git project there and modify it as you wish.

$ # pass the branch (e.g. master) or a particular tag (e.g. v1.0.0.RELEASE) $ SC_PIPELINES_RELEASE=... $ curl -LOk https://github.com/spring-cloud/spring-cloud-pipelines/archive/${SC_PIPELINES_RELEASE}.zip $ unzip ${SC_PIPELINES_RELEASE}.zip $ cd spring-cloud-pipelines-${SC_PIPELINES_RELEASE} $ git init $ # modify the pipelines to suit your needs $ git add . $ git commit -m "Initial commit" $ git remote add origin ${YOUR_REPOSITORY_URL} $ git push origin master

You can also clone the repository in case you would like to keep aligned

with the changes in the upstream repository. In order not to have many merge

conflicts it’s encouraged to use the custom folder hooks to override functions.

You can use Spring Cloud Pipelines to generate pipelines for all projects in your system. You can scan all your repositories (e.g. call the Stash / Github API and retrieve the list of repos) and then…

- For Jenkins, call the seed job and pass the

REPOSparameter that would contain the list of repositories - For Concourse, you’d have to call

flyand set pipeline for every single repo

You can use Spring Cloud Pipelines in such a way that each project contains its own pipeline definition in its code. Spring Cloud Pipelines clones the code with the pipeline definitions (the bash scripts) so the only piece of logic that could be there in your application’s repository would be how the pipeline should look like.

- For Jenkins, you’d have to either set up the

Jenkinsfileor the jobs using Jenkins Job DSL plugin in your repo. Then in Jenkins whenever you set up a new pipeline for a repo then you reference the pipeline definition in that repo. - For Concourse, each project contains its own pipeline steps and it’s up to the project to set up the pipeline.

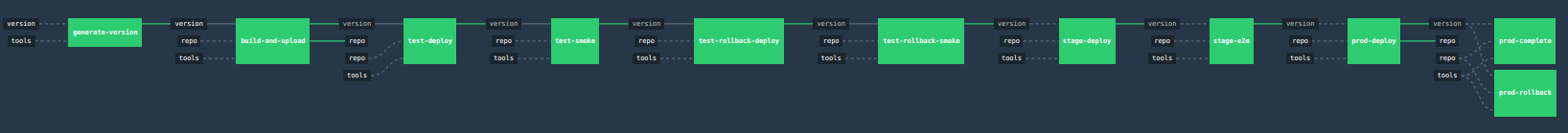

Let’s take a look at the flow of the opinionated pipeline

We’ll first describe the overall concept behind the flow and then we’ll split it into pieces and describe every piece independently.

So we’re on the same page let’s define some common vocabulary. We discern 4 typical environments in terms of running the pipeline.

- build environment is a machine where the building of the application takes place. It’s a CI / CD tool worker.

- test is an environment where you can deploy an application to test it. It doesn’t resemble production, we can’t be sure of it’s state (which application is deployed there and in which version). It can be used by multiple teams at the same time.

- stage is an environment that does resemble production. Most likely applications are deployed there in versions that correspond to those deployed to production. Typically databases there are filled up with (obfuscated) production data. Most often this environment is a single, shared one between many teams. In other words in order to run some performance, user acceptance tests you have to block and wait until the environment is free.

- prod is a production environment where we want our tested applications to be deployed for our customers.

Unit tests - tests that are executed on the application during the build phase. No integrations with databases / HTTP server stubs etc. take place. Generally speaking your application should have plenty of these to have fast feedback if your features are working fine.

Integration tests - tests that are executed on the built application during the build phase. Integrations with in memory databases / HTTP server stubs take place. According to the test pyramid, in most cases you should have not too many of these kind of tests.

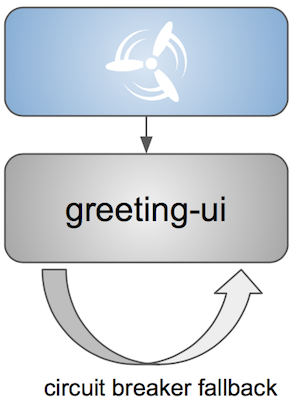

Smoke tests - tests that are executed on a deployed application. The concept of these tests is to check the crucial parts of your application are working properly. If you have 100 features in your application but you gain most money from e.g. 5 features then you could write smoke tests for those 5 features. As you can see we’re talking about smoke tests of an application, not of the whole system. In our understanding inside the opinionated pipeline, these tests are executed against an application that is surrounded with stubs.

End to end tests - tests that are executed on a system composing of multiple applications. The idea of these tests is to check if the tested feature works when the whole system is set up. Due to the fact that it takes a lot of time, effort, resources to maintain such an environment and that often those tests are unreliable (due to many different moving pieces like network database etc.) you should have a handful of those tests. Only for critical parts of your business. Since only production is the key verifier of whether your feature works, some companies don’t even want to do those and move directly to deployment to production. When your system contains KPI monitoring and alerting you can quickly react when your deployed application is not behaving properly.

Performance testing - tests executed on an application or set of applications to check if your system can handle big load of input. In case of our opinionated pipeline these tests could be executed either on test (against stubbed environment) or stage (against the whole system)

Before we go into details of the flow let’s take a look at the following example.

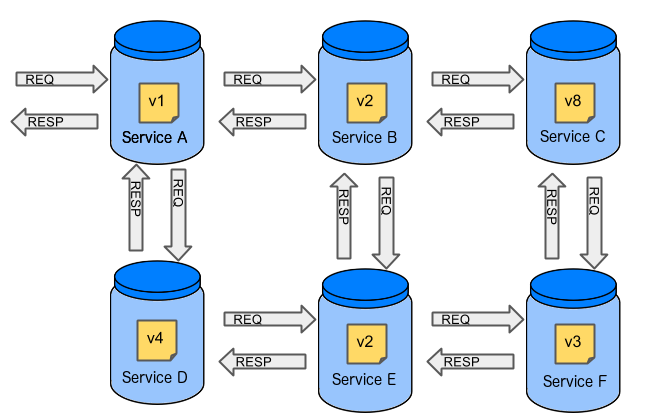

When having only a handful of applications, performing end to end testing is beneficial. From the operations perspective it’s maintainable for a finite number of deployed instances. From the developers perspective it’s nice to verify the whole flow in the system for a feature.

In case of microservices the scale starts to be a problem:

The questions arise:

Should I queue deployments of microservices on one testing environment or should I have an environment per microservice?

- If I queue deployments people will have to wait for hours to have their tests ran - that can be a problem

To remove that issue I can have an environment per microservice

- Who will pay the bills (imagine 100 microservices - each having each own environment).

- Who will support each of those environments?

- Should we spawn a new environment each time we execute a new pipeline and then wrap it up or should we have them up and running for the whole day?

In which versions should I deploy the dependent microservices - development or production versions?

- If I have development versions then I can test my application against a feature that is not yet on production. That can lead to exceptions on production

- If I test against production versions then I’ll never be able to test against a feature under development anytime before deployment to production.

One of the possibilities of tackling these problems is to… not do end to end tests.

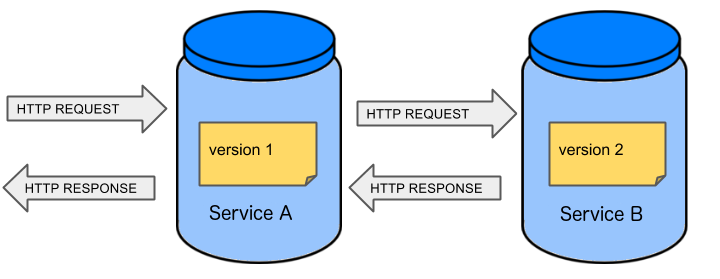

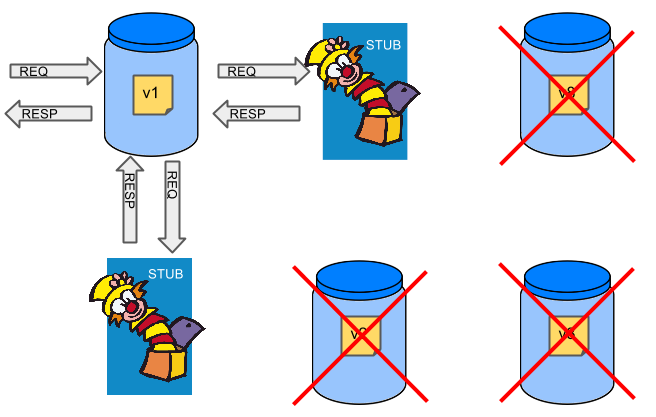

If we stub out all the dependencies of our application then most of the problems presented above disappear. There is no need to start and setup infrastructure required by the dependant microservices. That way the testing setup looks like this:

Such an approach to testing and deployment gives the following benefits (thanks to the usage of Spring Cloud Contract):

- No need to deploy dependant services

- The stubs used for the tests ran on a deployed microservice are the same as those used during integration tests

- Those stubs have been tested against the application that produces them (check Spring Cloud Contract for more information)

- We don’t have many slow tests running on a deployed application - thus the pipeline gets executed much faster

- We don’t have to queue deployments - we’re testing in isolation thus pipelines don’t interfere with each other

- We don’t have to spawn virtual machines each time for deployment purposes

It brings however the following challenges:

- No end to end tests before production - you don’t have the full certainty that a feature is working

- First time the applications will talk in a real way will be on production

Like every solution it has its benefits and drawbacks. The opinionated pipeline allows you to configure whether you want to follow this flow or not.

The general view behind this deployment pipeline is to:

- test the application in isolation

- test the backwards compatibility of the application in order to roll it back if necessary

- allow testing of the packaged app in a deployed environment

- allow user acceptance tests / performance tests in a deployed environment

- allow deployment to production

Obviously the pipeline could have been split to more steps but it seems that all of the aforementioned actions comprise nicely in our opinionated proposal.

Spring Cloud Pipelines uses Bash scripts extensively. Below you can find the list of software that needs to be installed on a CI server worker for the build to pass.

![[Tip]](images/tip.png) | Tip |

|---|---|

In the demo setup all of these libraries are already installed. |

apt-get -y install \

bash \

git \

tar \

zip \

curl \

ruby \

wget \

unzip \

python \

jq![[Important]](images/important.png) | Important |

|---|---|

In the Jenkins case you will also need |

Each application can contain a file called sc-pipelines.yml with the following structure:

build: main_module: foo/bar lowercaseEnvironmentName1: services: - type: service1Type name: service1Name coordinates: value - type: service2Type name: service2Name key: value lowercaseEnvironmentName2: services: - type: service3Type name: service3Name coordinates: value - type: service4Type name: service4Name key: value

If you have a multi-module project, you should point to the folder, where your

module that produces the fat jar lays. In the aforementioned example that module

would be present under the foo/bar folder. If you have a single module project,

then you don’t have to create this section.

For a given environment we declare a list of infrastructure services that we want to have deployed. Services have

type(example:eureka,mysql,rabbitmq,stubrunner) - this value gets then applied to thedeployServiceBash function- [KUBERNETES] for

mysqlyou can pass the database name via thedatabaseproperty name- name of the service to get deployedcoordinates- coordinate that allows you to fetch the binary of the service. Examples: It can be a maven coordinategroupid:artifactid:version, docker imageorganization/nameOfImage, etc.- arbitrary key value pairs - you can customize the services as you wish

When deploying to Cloud Foundry you can provide services of the following types:

type: brokerbroker- name of the CF brokerplan- name of the planparams- additional parameters that will be converted to JSONuseExisting- should use existing one or create a new one (defaults tofalse)

type: appcoordinates- maven coordinates of the stub runner jarmanifestPath- path to the manifest for the stub runner jar

type: cupsparams- additional parameters that will be converted to JSON

type: cupsSyslogurl- URL to the syslog drain

type: cupsRouteurl- URL to the route service

type: stubrunnercoordinates- maven coordinates of the stub runner jarmanifestPath- path to the manifest for the stub runner jar

# This file describes which services are required by this application # in order for the smoke tests on the TEST environment and end to end tests # on the STAGE environment to pass # lowercase name of the environment test: # list of required services services: - name: config-server type: broker broker: p-config-server plan: standard params: git: uri: https://github.com/ciberkleid/app-config useExisting: true - name: cloud-bus type: broker broker: cloudamqp plan: lemur useExisting: true - name: service-registry type: broker broker: p-service-registry plan: standard useExisting: true - name: circuit-breaker-dashboard type: broker broker: p-circuit-breaker-dashboard plan: standard useExisting: true - name: stubrunner type: stubrunner coordinates: io.pivotal:cloudfoundry-stub-runner-boot:0.0.1.M1 manifestPath: sc-pipelines/manifest-stubrunner.yml stage: services: - name: config-server type: broker broker: p-config-server plan: standard params: git: uri: https://github.com/ciberkleid/app-config - name: cloud-bus type: broker broker: cloudamqp plan: lemur - name: service-registry type: broker broker: p-service-registry plan: standard - name: circuit-breaker-dashboard type: broker broker: p-circuit-breaker-dashboard plan: standard

Spring Cloud Pipelines supports three main types of project setup

- Single Project

- Multi Module

- Multi Project (aka mono repo)

A Single Project is a project that contains a single module that gets

built and package into a single, executable artifact.

A Multi Module project is a project that contains a multiple modules.

After building all modules, one gets packaged into a single, executable artifact.

You have to point to that module in your pipeline descriptor.

A Multi Project is a project that contains multiple projects. Each of those

projects can be in turn a Single Project or a Multi Module project. Spring

Cloud Pipelines will assume that if there’s a PROJECT_NAME environment

variable that corresponds to a folder with the same name in the root of the

repository, that means that this is the project it should build. E.g for

PROJECT_NAME=foo, if there’s a folder foo, then Spring Cloud Pipelines

will treat the foo directory as the root of the foo project.

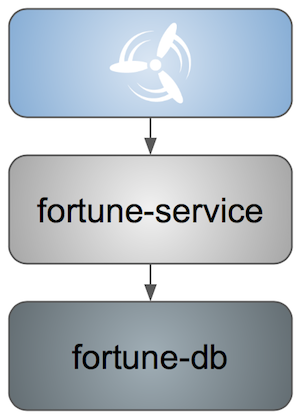

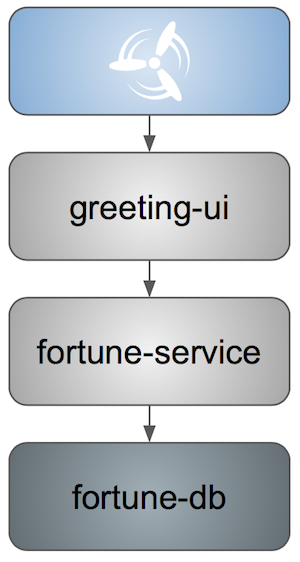

For the demo purposes we’re providing Docker Compose setup with Artifactory and Concourse / Jenkins tools. Regardless of the picked CD application for the pipeline to pass one needs either

- a Cloud Foundry instance (for example Pivotal Web Services or PCF Dev)

- a Kubernetes cluster (for example Minikube)

- the infrastructure applications deployed to the JAR hosting application (for the demo we’re providing Artifactory).

Eurekafor Service DiscoveryStub Runner Bootfor running Spring Cloud Contract stubs.

![[Tip]](images/tip.png) | Tip |

|---|---|

In the demos we’re showing you how to first build the |

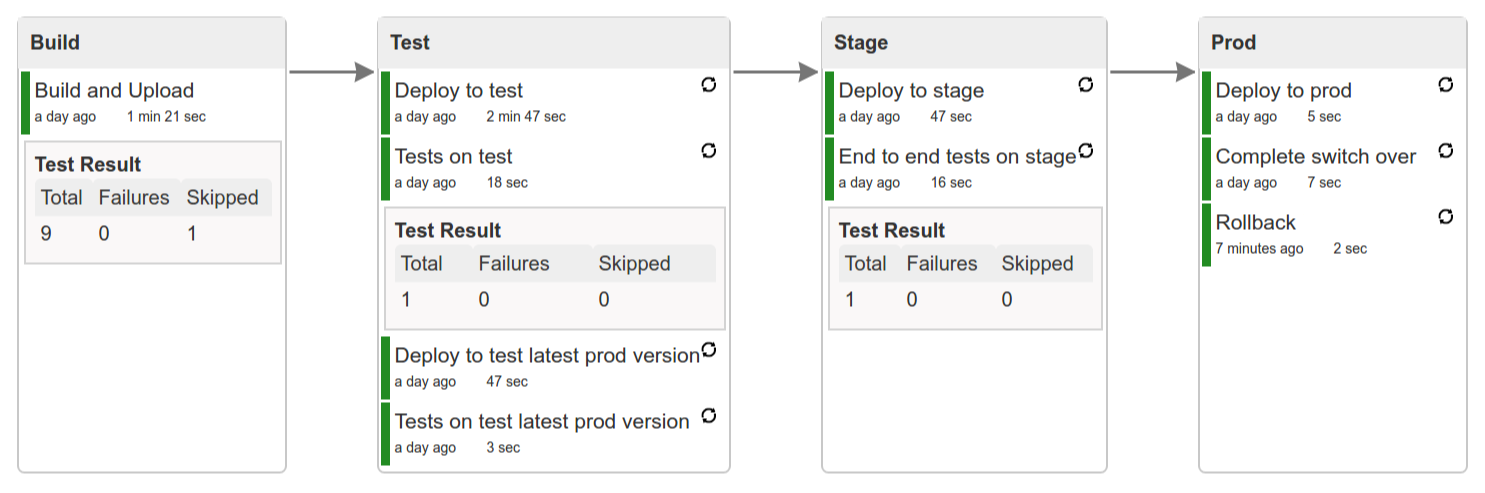

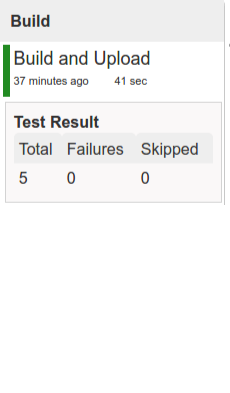

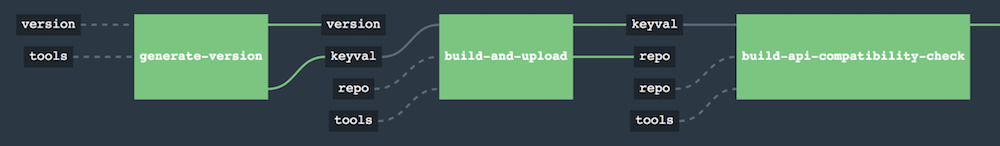

In this step we’re generating a version of the pipeline, next we’re running unit, integration and contract tests. Finally we’re:

- publishing a fat jar of the application

- publishing a Spring Cloud Contract jar containing stubs of the application

- for Kubernetes - uploading a Docker image of the application

During this phase we’re executing a Maven build using Maven Wrapper or a Gradle build using Gradle Wrapper

, with unit and integration tests. We’re also tagging the repository with dev/${version} format. That way in each

subsequent step of the pipeline we’re able to retrieve the tagged version. Also we know

exactly which version of the pipeline corresponds to which Git hash.

Once the artifact got built we’re running API compatibility check.

- we’re searching for the latest production deployment

- we’re retrieving the contracts that were used by that deployment

- from the contracts we’re generating API tests to see if the current implementation is fulfilling the HTTP / messaging contracts that the current production deployment has defined (we’re checking backward compatibility of the API)

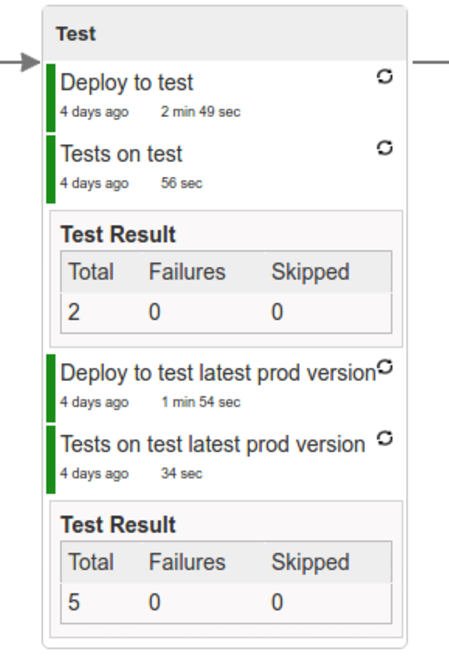

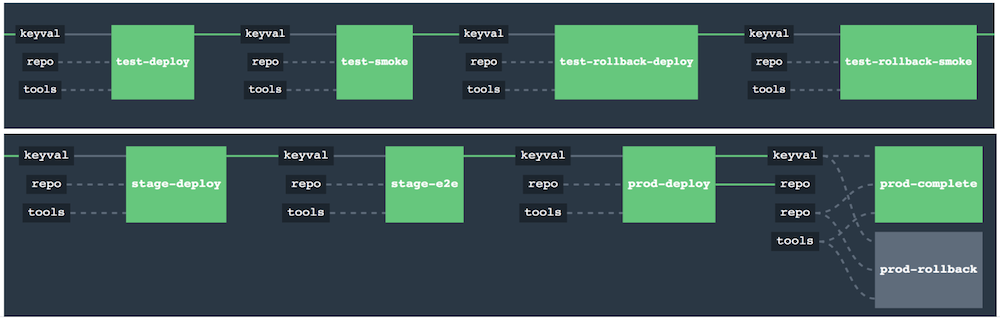

Here we’re

- starting a RabbitMQ service in PaaS

- deploying

Eurekainfrastructure application to PaaS - downloading the fat jar from Nexus and we’re uploading it to PaaS. We want the application to run in isolation (be surrounded by stubs).

![[Tip]](images/tip.png) | Tip |

|---|---|

Currently due to port constraints in Cloud Foundry

we cannot run multiple stubbed HTTP services in the cloud so to fix this issue we’re running

the application with |

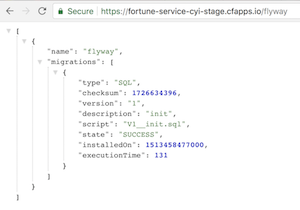

- if the application is using a database then it gets upgraded at this point via Flyway, Liquibase or any other tool once the application gets started

- from the project’s Maven or Gradle build we’re extracting

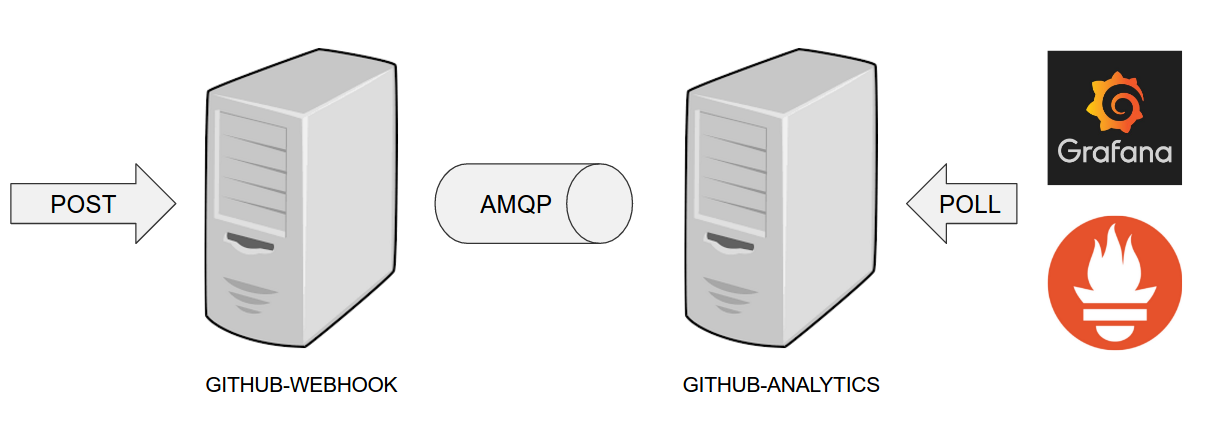

stubrunner.idsproperty that contains all thegroupId:artifactId:version:classifiernotation of dependant projects for which the stubs should be downloaded. - then we’re uploading

Stub Runner Bootand pass the extractedstubrunner.idsto it. That way we’ll have a running application in Cloud Foundry that will download all the necessary stubs of our application - from the checked out code we’re running the tests available under the

smokeprofile. In the case ofGitHub Analyticsapplication we’re triggering a message from theGitHub Webhookapplication’s stub, that is sent via RabbitMQ to GitHub Analytics. Then we’re checking if message count has increased. - once the tests pass we’re searching for the last production release. Once the application

is deployed to production we’re tagging it with

prod/${version}tag. If there is no such tag (there was no production release) there will be no rollback tests executed. If there was a production release the tests will get executed. - assuming that there was a production release we’re checking out the code corresponding to that release (we’re checking out the tag), we’re downloading the appropriate artifact (either JAR for Cloud Foundry or Docker image for Kubernetes) and we’re uploading it to PaaS. IMPORTANT the old artifact is running against the NEW version of the database.

- we’re running the old

smoketests against the freshly deployed application surrounded by stubs. If those tests pass then we have a high probability that the application is backwards compatible - the default behaviour is that after all of those steps the user can manually click to deploy the application to a stage environment

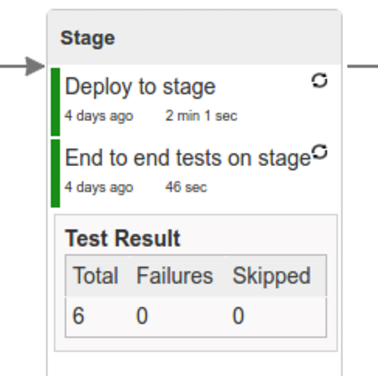

Here we’re

- starting a RabbitMQ service in PaaS

- deploying

Eurekainfrastructure application to PaaS - downloading the artifact (either JAR for Cloud Foundry or Docker image for Kubernetes) from and we’re uploading it to PaaS.

Next we have a manual step in which:

- from the checked out code we’re running the tests available under the

e2eprofile. In the case ofGitHub Analyticsapplication we’re sending a HTTP message to GitHub Analytic’s endpoint. Then we’re checking if the received message count has increased.

The step is manual by default due to the fact that stage environment is often shared between teams and some preparations on databases / infrastructure have to take place before running the tests. Ideally these step should be fully automatic.

The step to deploy to production is manual but ideally it should be automatic.

![[Important]](images/important.png) | Important |

|---|---|

This step does deployment to production. On production you would assume

that you have the infrastructure running. That’s why before you run this step you

must execute a script that will provision the services on the production environment.

For |

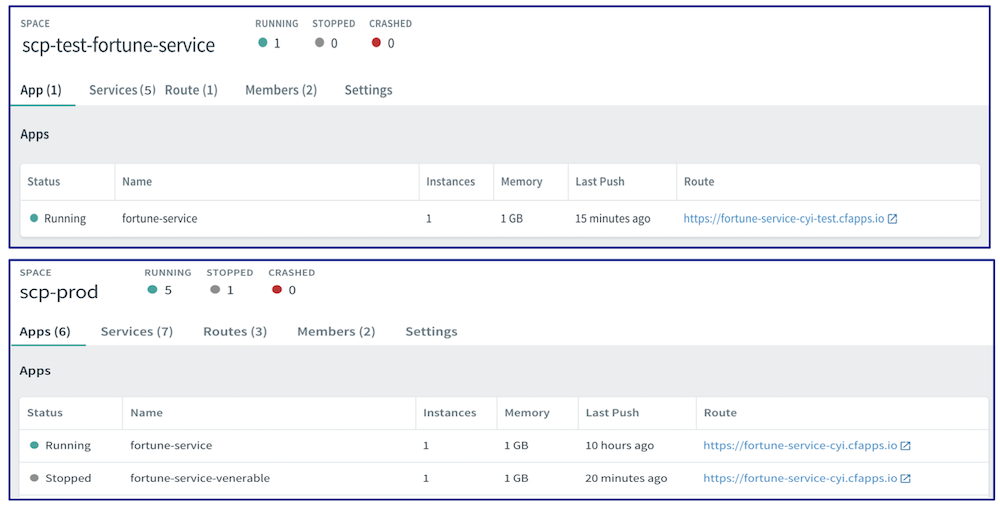

Here we’re

- tagging the Git repo with

prod/${version}tag - downloading the application artifact (either JAR for Cloud Foundry or Docker image for Kubernetes)

- we’re doing Blue Green deployment:

for Cloud Foundry

- we’re renaming the current instance of the app e.g.

fooServicetofooService-venerable - we’re deploying the new instance of the app under the

fooServicename - now two instances of the same application are running on production

- we’re renaming the current instance of the app e.g.

for Kubernetes

- we’re deploying a service with the name of the app e.g.

fooService - we’re doing a deployment with the name of the app with version suffix (with the name escaped

to fulfill the DNS name requirements) e.g.

fooService-1-0-0-M1-123-456-VERSION - all deployments of the same application have the same label

nameequal to app name e.g.fooService - the service is routing the traffic basing on the

namelabel selector - now two instances of the same application are running on production

- we’re deploying a service with the name of the app e.g.

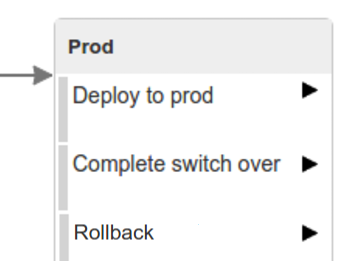

in the

Complete switch overwhich is a manual step- we’re stopping the old instance

- remember to run this step only after you have confirmed that both instances are working fine!

in the

Rollbackwhich is a manual stepwe’re routing all the traffic to the old instance

- in CF we do that by ensuring that blue is running and removing green

- in K8S we do that by scaling the number of instances of green to 0

- we’re removing the latest prod git tag

In this section we will go through the assumptions we’ve made in the project structure and project properties.

We’ve taken the following opinionated decisions for a Cloud Foundry based project:

- application built using Maven or Gradle wrappers

- application deployment to Cloud Foundry

- you application needs a

manifest.ymlCloud Foundry descriptor For Maven (example project):

- usage of Maven Wrapper

settings.xmlis parametrized to pass the credentials to push code to ArtifactoryM2_SETTINGS_REPO_ID- server id for Artifactory / Nexus deploymentM2_SETTINGS_REPO_USERNAME- username for Artifactory / Nexus deploymentM2_SETTINGS_REPO_PASSWORD- password for Artifactory / Nexus deployment

- artifacts deployment by

./mvnw clean deploy stubrunner.idsproperty to retrieve list of collaborators for which stubs should be downloadedrepo.with.binariesproperty - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory)distribution.management.release.idproperty - (Injected by the pipeline) ID of the distribution management. Corresponds to server id insettings.xmldistribution.management.release.urlproperty - (Injected by the pipeline) Will contain the URL to the repo containing binaries (e.g. Artifactory)- running API compatibility tests via the

apicompatibilityMaven profile latest.production.versionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags)- running smoke tests on a deployed app via the

smokeMaven profile - running end to end tests on a deployed app via the

e2eMaven profile

For Gradle (example project check the

gradle/pipeline.gradlefile):- usage of Gradlew Wrapper

deploytask for artifacts deploymentREPO_WITH_BINARIES_FOR_UPLOADenv var - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory)M2_SETTINGS_REPO_USERNAMEenv var - Username used to send the binary to the repo containing binaries (e.g. Artifactory)M2_SETTINGS_REPO_PASSWORDenv var - Password used to send the binary to the repo containing binaries (e.g. Artifactory)- running API compatibility tests via the

apiCompatibilitytask latestProductionVersionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags)- running smoke tests on a deployed app via the

smoketask - running end to end tests on a deployed app via the

e2etask groupIdtask to retrieve group idartifactIdtask to retrieve artifact idcurrentVersiontask to retrieve the current versionstubIdstask to retrieve list of collaborators for which stubs should be downloaded

We’ve taken the following opinionated decisions for a Cloud Foundry based project:

- application built using Maven or Gradle wrappers

- application deployment to Kubernetes

- The produced Java Docker image needs to allow passing of system properties via

SYSTEM_PROPSenv variable For Maven (example project):

- usage of Maven Wrapper

settings.xmlis parametrized to pass the credentials to push code to Artifactory and Docker repositoryM2_SETTINGS_REPO_ID- server id for Artifactory / Nexus deploymentM2_SETTINGS_REPO_USERNAME- username for Artifactory / Nexus deploymentM2_SETTINGS_REPO_PASSWORD- password for Artifactory / Nexus deploymentDOCKER_SERVER_ID- server id for Docker image pushingDOCKER_USERNAME- username for Docker image pushingDOCKER_PASSWORD- password for Docker image pushingDOCKER_EMAIL- email for Artifactory / Nexus deployment

DOCKER_REGISTRY_URLenv var - (Overridable - defaults to DockerHub) URL of the Docker registryDOCKER_REGISTRY_ORGANIZATION- env var containing the organization where your Docker repo lays- artifacts and Docker image deployment by

./mvnw clean deploy stubrunner.idsproperty to retrieve list of collaborators for which stubs should be downloadedrepo.with.binariesproperty - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory)distribution.management.release.idproperty - (Injected by the pipeline) ID of the distribution management. Corresponds to server id insettings.xmldistribution.management.release.urlproperty - (Injected by the pipeline) Will contain the URL to the repo containing binaries (e.g. Artifactory)deployment.ymlcontains the Kubernetes deployment descriptorservice.ymlcontains the Kubernetes service descriptor- running API compatibility tests via the

apicompatibilityMaven profile latest.production.versionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags)- running smoke tests on a deployed app via the

smokeMaven profile - running end to end tests on a deployed app via the

e2eMaven profile

For Gradle (example project check the

gradle/pipeline.gradlefile):- usage of Gradlew Wrapper

deploytask for artifacts deploymentREPO_WITH_BINARIES_FOR_UPLOADenv var - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory)M2_SETTINGS_REPO_USERNAMEenv var - Username used to send the binary to the repo containing binaries (e.g. Artifactory)M2_SETTINGS_REPO_PASSWORDenv var - Password used to send the binary to the repo containing binaries (e.g. Artifactory)DOCKER_REGISTRY_URLenv var - (Overridable - defaults to DockerHub) URL of the Docker registryDOCKER_USERNAMEenv var - Username used to send the the Docker imageDOCKER_PASSWORDenv var - Password used to send the the Docker imageDOCKER_EMAILenv var - Email used to send the the Docker imageDOCKER_REGISTRY_ORGANIZATION- env var containing the organization where your Docker repo laysdeployment.ymlcontains the Kubernetes deployment descriptorservice.ymlcontains the Kubernetes service descriptor- running API compatibility tests via the

apiCompatibilitytask latestProductionVersionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags)- running smoke tests on a deployed app via the

smoketask - running end to end tests on a deployed app via the

e2etask groupIdtask to retrieve group idartifactIdtask to retrieve artifact idcurrentVersiontask to retrieve the current versionstubIdstask to retrieve list of collaborators for which stubs should be downloaded

![[Important]](images/important.png) | Important |

|---|---|

In this chapter we assume that you perform deployment of your application to Cloud Foundry PaaS |

The Spring Cloud Pipelines repository contains opinionated Concourse pipeline definition. Those jobs will form an empty pipeline and a sample, opinionated one that you can use in your company.

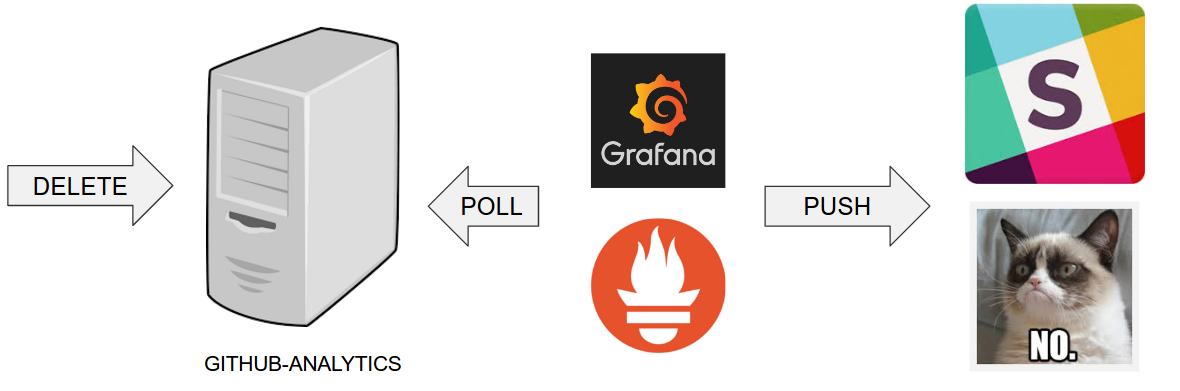

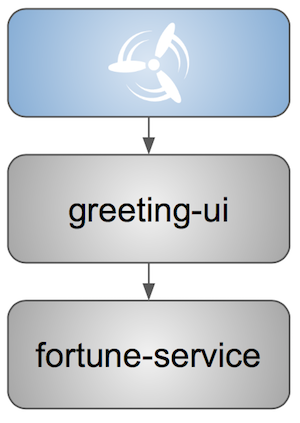

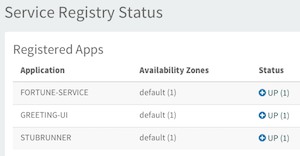

All in all there are the following projects taking part in the whole microservice setup for this demo.

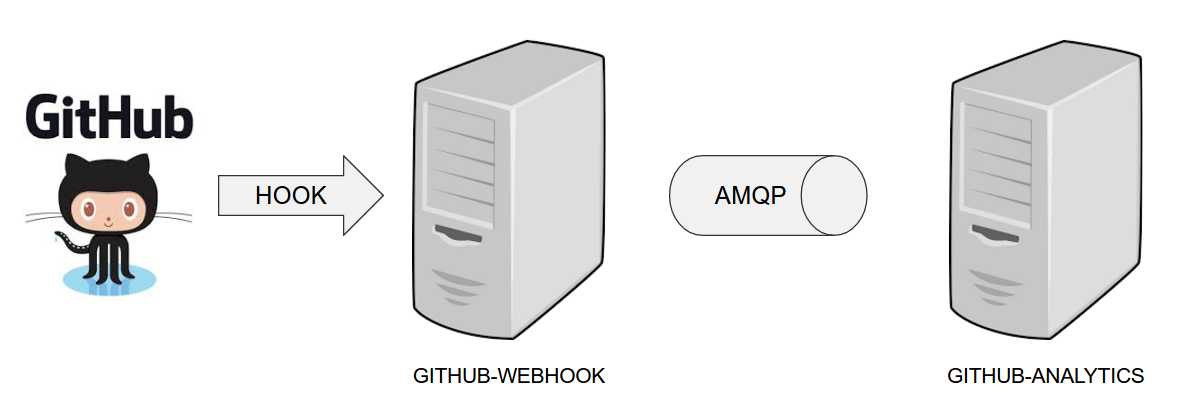

- Github Analytics - the app that has a REST endpoint and uses messaging. Our business application.

- Github Webhook - project that emits messages that are used by Github Analytics. Our business application.

- Eureka - simple Eureka Server. This is an infrastructure application.

- Github Analytics Stub Runner Boot - Stub Runner Boot server to be used for tests with Github Analytics. Uses Eureka and Messaging. This is an infrastructure application.

If you want to just run the demo as far as possible using PCF Dev and Docker Compose

There are 4 apps that are composing the pipeline

You need to fork only these. That’s because only then will your user be able to tag and push the tag to repo.

Concourse + Artifactory can be run locally. To do that just execute the

start.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines cd spring-cloud-pipelines/concourse ./setup_docker_compose.sh ./start.sh 192.168.99.100

The setup_docker_compose.sh script should be executed once only to allow

generation of keys.

The 192.168.99.100 param is an example of an external URL of Concourse

(equal to Docker-Machine ip in this example).

Then Concourse will be running on port 8080 and Artifactory 8081.

When Artifactory is running, just execute the tools/deploy-infra.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/

./tools/deploy-infra.shAs a result both eureka and stub runner repos will be cloned, built

and uploaded to Artifactory.

![[Tip]](images/tip.png) | Tip |

|---|---|

You can skip this step if you have CF installed and don’t want to use PCF Dev The only thing you have to do is to set up spaces. |

![[Warning]](images/warning.png) | Warning |

|---|---|

It’s more than likely that you’ll run out of resources when you reach stage step. Don’t worry! Keep calm and clear some apps from PCF Dev and continue. |

You have to download and start PCF Dev. A link how to do it is available here.

The default credentials when using PCF Dev are:

username: user password: pass email: user org: pcfdev-org space: pcfdev-space api: api.local.pcfdev.io

You can start the PCF Dev like this:

cf dev start

You’ll have to create 3 separate spaces (email admin, pass admin)

cf login -a https://api.local.pcfdev.io --skip-ssl-validation -u admin -p admin -o pcfdev-org cf create-space pcfdev-test cf set-space-role user pcfdev-org pcfdev-test SpaceDeveloper cf create-space pcfdev-stage cf set-space-role user pcfdev-org pcfdev-stage SpaceDeveloper cf create-space pcfdev-prod cf set-space-role user pcfdev-org pcfdev-prod SpaceDeveloper

You can also execute the ./tools/cf-helper.sh setup-spaces to do this.

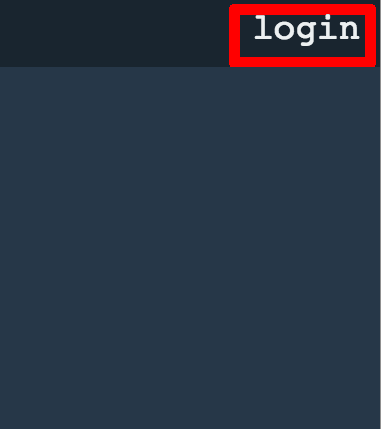

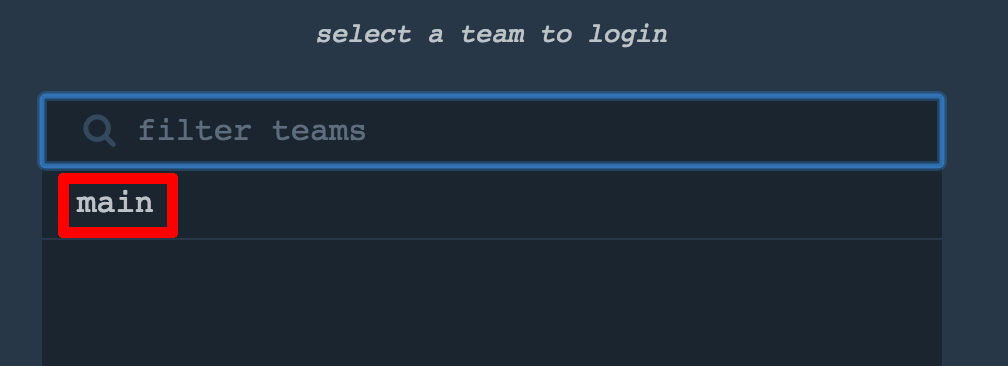

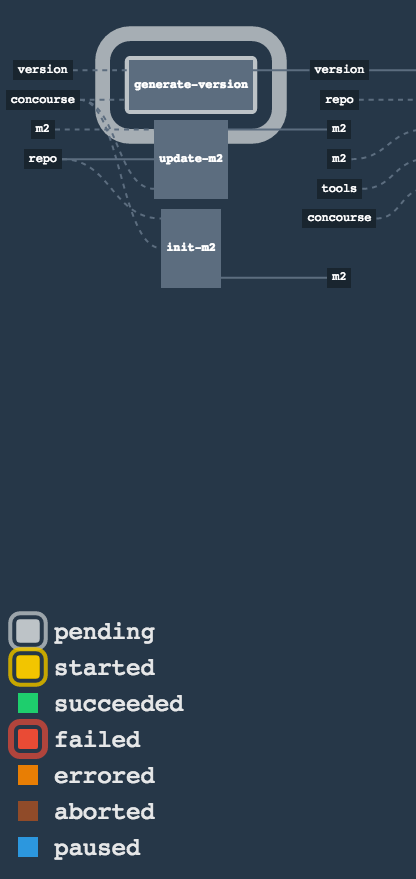

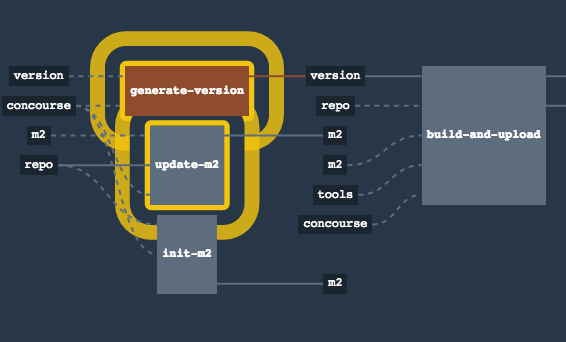

If you go to Concourse website you should see sth like this:

You can click one of the icons (depending on your OS) to download fly, which is the Concourse CLI. Once you’ve downloaded that (and maybe added to your PATH) you can run:

fly --version

If fly is properly installed then it should print out the version.

The repo comes with credentials-sample-cf.yml which is set up with sample data (most credentials) are set to be applicable for PCF Dev. Copy this file to a new file credentials.yml (the file is added to .gitignore so don’t worry that you’ll push it with your passwords) and edit it as you wish. For our demo just setup:

app-url- url pointing to your forkedgithub-webhookrepogithub-private-key- your private key to clone / tag GitHub reposrepo-with-binaries- the IP is set to the defaults for Docker Machine. You should update it to point to your setup

If you don’t have a Docker Machine just execute ./whats_my_ip.sh script to

get an external IP that you can pass to your repo-with-binaries instead of the default

Docker Machine IP.

Below you can see what environment variables are required by the scripts. To the right hand side you can see the default values for PCF Dev that we set in the credentials-sample-cf.yml.

| Property Name | Property Description | Default value |

|---|---|---|

BUILD_OPTIONS | Additional options you would like to pass to the Maven / Gradle build | |

PAAS_TEST_API_URL | The URL to the CF Api for TEST env | api.local.pcfdev.io |

PAAS_STAGE_API_URL | The URL to the CF Api for STAGE env | api.local.pcfdev.io |

PAAS_PROD_API_URL | The URL to the CF Api for PROD env | api.local.pcfdev.io |

PAAS_TEST_ORG | Name of the org for the test env | pcfdev-org |

PAAS_TEST_SPACE_PREFIX | Prefix of the name of the CF space for the test env to which the app name will be appended | sc-pipelines-test |

PAAS_STAGE_ORG | Name of the org for the stage env | pcfdev-org |

PAAS_STAGE_SPACE | Name of the space for the stage env | sc-pipelines-stage |

PAAS_PROD_ORG | Name of the org for the prod env | pcfdev-org |

PAAS_PROD_SPACE | Name of the space for the prod env | sc-pipelines-prod |

REPO_WITH_BINARIES_FOR_UPLOAD | URL to repo with the deployed jars | |

M2_SETTINGS_REPO_ID | The id of server from Maven settings.xml | artifactory-local |

PAAS_HOSTNAME_UUID | Additional suffix for the route. In a shared environment the default routes can be already taken | |

JAVA_BUILDPACK_URL | The URL to the Java buildpack to be used by CF |

Log in (e.g. for Concourse running at 192.168.99.100 - if you don’t provide any value then localhost is assumed). If you execute this script (it assumes that either fly is on your PATH or it’s in the same folder as the script is):

./login.sh 192.168.99.100

Next run the command to create the pipeline.

./set_pipeline.sh

Then you’ll create a github-webhook pipeline under the docker alias, using the provided credentials.yml file.

You can override these values in exactly that order (e.g. ./set-pipeline.sh some-project another-target some-other-credentials.yml)

![[Important]](images/important.png) | Important |

|---|---|

In this chapter we assume that you perform deployment of your application to Kubernetes PaaS |

The Spring Cloud Pipelines repository contains opinionated Concourse pipeline definition. Those jobs will form an empty pipeline and a sample, opinionated one that you can use in your company.

All in all there are the following projects taking part in the whole microservice setup for this demo.

- Github Analytics - the app that has a REST endpoint and uses messaging. Our business application.

- Github Webhook - project that emits messages that are used by Github Analytics. Our business application.

- Eureka - simple Eureka Server. This is an infrastructure application.

- Github Analytics Stub Runner Boot - Stub Runner Boot server to be used for tests with Github Analytics. Uses Eureka and Messaging. This is an infrastructure application.

This is a guide for Concourse pipeline.

If you want to just run the demo as far as possible using PCF Dev and Docker Compose

The simplest way to deploy Concourse to K8S is to use Helm.

Once you have Helm installed and your kubectl is pointing to the

cluster, just type this command to install the Concourse cluster in your K8S cluster.

$ helm install stable/concourse --name concourse

Once it’s done you’ll see the following output

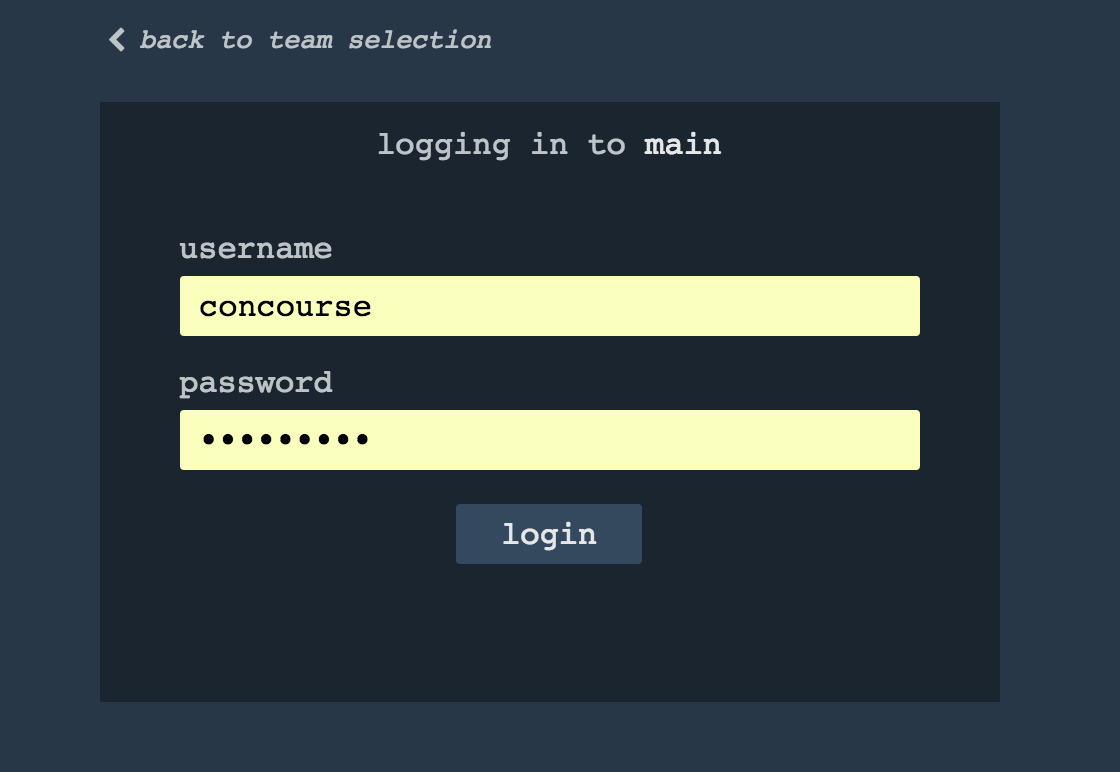

1. Concourse can be accessed: * Within your cluster, at the following DNS name at port 8080: concourse-web.default.svc.cluster.local * From outside the cluster, run these commands in the same shell: export POD_NAME=$(kubectl get pods --namespace default -l "app=concourse-web" -o jsonpath="{.items[0].metadata.name}") echo "Visit http://127.0.0.1:8080 to use Concourse" kubectl port-forward --namespace default $POD_NAME 8080:8080 2. Login with the following credentials Username: concourse Password: concourse

Just follow these steps and log in to Concourse under http://127.0.0.1:8080.

We can use Helm also to deploy Artifactory to K8S

$ helm install --name artifactory --set artifactory.image.repository=docker.bintray.io/jfrog/artifactory-oss stable/artifactoryAfter executing this you’ll see the following output

NOTES: Congratulations. You have just deployed JFrog Artifactory Pro! 1. Get the Artifactory URL by running these commands: NOTE: It may take a few minutes for the LoadBalancer IP to be available. You can watch the status of the service by running 'kubectl get svc -w nginx' export SERVICE_IP=$(kubectl get svc --namespace default nginx -o jsonpath='{.status.loadBalancer.ingress[0].ip}') echo http://$SERVICE_IP/ 2. Open Artifactory in your browser Default credential for Artifactory: user: admin password: password

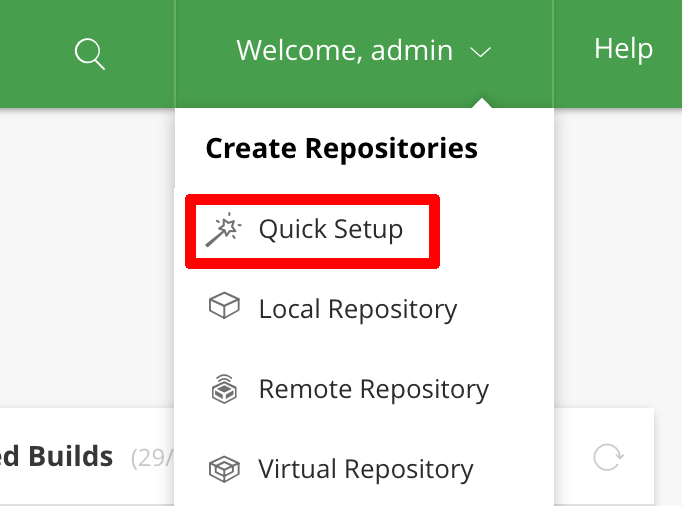

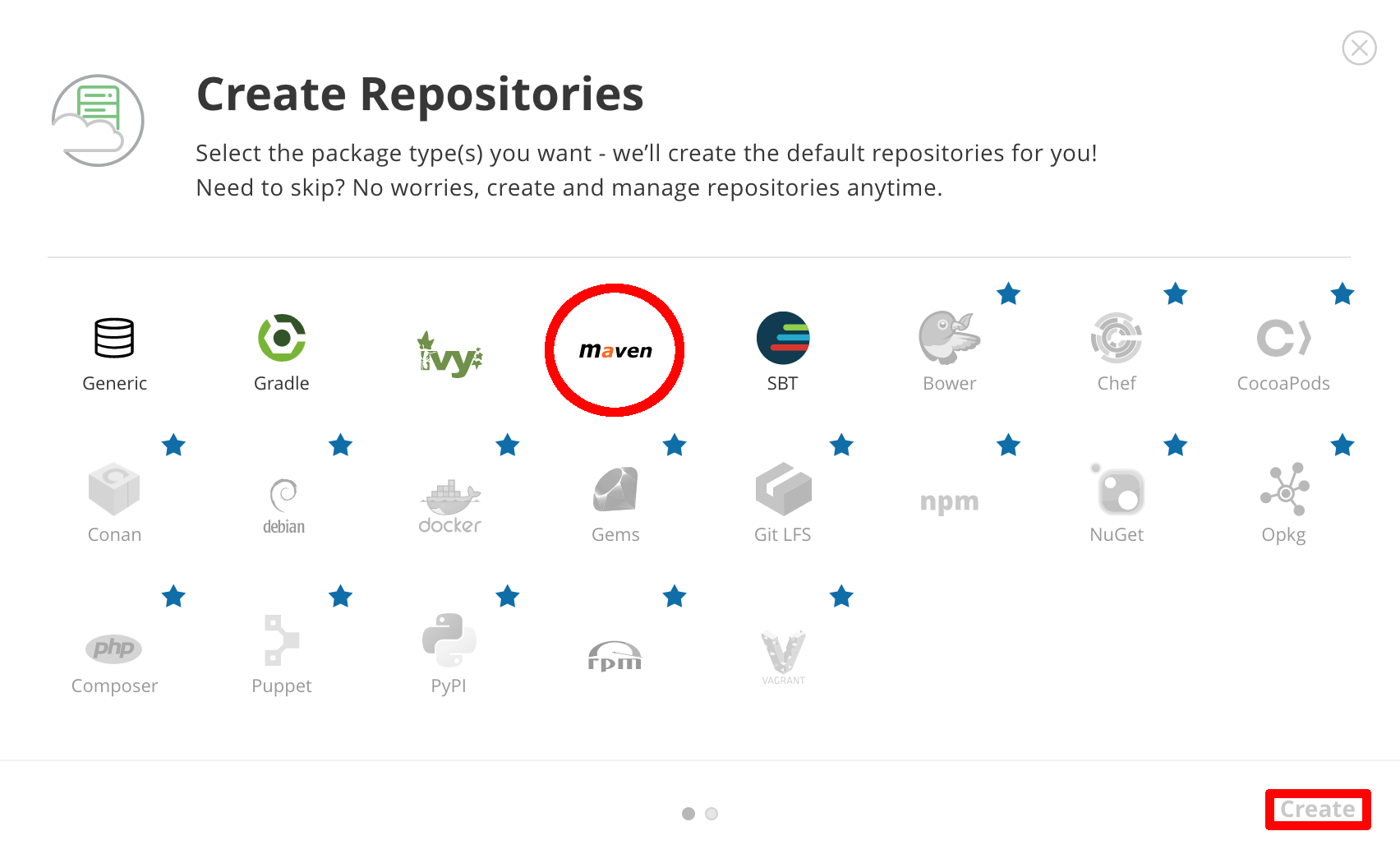

Next, we need to set up the repositories.

First, access the Artifactory URL and log in with

user, admin and password password.

Then, click on the Maven setup and click Create.

If you go to Concourse website you should see sth like this:

You can click one of the icons (depending on your OS) to download fly, which is the Concourse CLI. Once you’ve downloaded that (and maybe added to your PATH) you can run:

fly --version

If fly is properly installed then it should print out the version.

There is a sample credentials file called credentials-sample-k8s.yml

prepared for k8s. You can use it as a base for your credentials.yml.

To allow the Concourse worker’s spawned container to connect to Kubernetes cluster you will need to pass the CA contents and the auth token.

To get the contents of CA for GCE just execute

$ kubectl get secret $(kubectl get secret | grep default-token | awk '{print $1}') -o jsonpath='{.data.ca\.crt}' | base64 --decode

To get the token just type:

$ kubectl get secret $(kubectl get secret | grep default-token | awk '{print $1}') -o jsonpath='{.data.token}' | base64 --decode

Set that value under paas-test-client-token, paas-stage-client-token and paas-prod-client-token

After running Concourse you should get the following output in your terminal

$ export POD_NAME=$(kubectl get pods --namespace default -l "app=concourse-web" -o jsonpath="{.items[0].metadata.name}") $ echo "Visit http://127.0.0.1:8080 to use Concourse" $ kubectl port-forward --namespace default $POD_NAME 8080:8080 Visit http://127.0.0.1:8080 to use Concourse

Log in (e.g. for Concourse running at 127.0.0.1 - if you don’t provide any value then localhost is assumed). If you execute this script (it assumes that either fly is on your PATH or it’s in the same folder as the script is):

$ fly -t k8s login -c http://localhost:8080 -u concourse -p concourseNext run the command to create the pipeline.

$ ./set_pipeline.sh github-webhook k8s credentials-k8s.yml

Not really. This is an opinionated pipeline that’s why we took some

opinionated decisions. Check out the documentation to see

what those decisions are.

Sure! It’s open-source! The important thing is that the core part of the logic is written in Bash scripts. That way, in the majority of cases, you could change only the bash scripts without changing the whole pipeline. You can check out the scripts here.

Furthermore, if you only want to customize a particular function under common/src/main/bash, you can provide your own

function under common/src/main/bash/<some custom identifier> where <some custom identifier> is equal to the value of

the CUSTOM_SCRIPT_IDENTIFIER environment variable. It defaults to custom.

When deploying the app to stage or prod you can get an exception Insufficient resources. The way to

solve it is to kill some apps from test / stage env. To achieve that just call

cf target -o pcfdev-org -s pcfdev-test

cf stop github-webhook

cf stop github-eureka

cf stop stubrunnerYou can also execute ./tools/cf-helper.sh kill-all-apps that will remove

all demo-related apps deployed to PCF Dev.

You must have pushed some tags and have removed the Artifactory volume that contained them. To fix this, just remove the tags

git tag -l | xargs -n 1 git push --delete originYes! Assuming that pipeline name is github-webhook and job name is build-and-upload you can running

fly watch --job github-webhook/build-and-upload -t docker

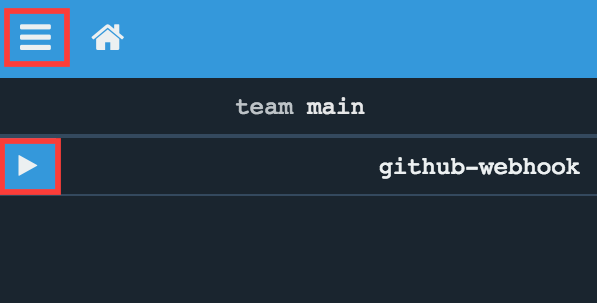

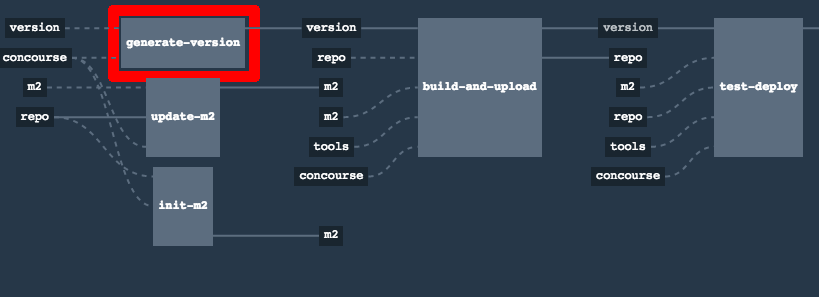

Don’t worry… most likely you’ve just forgotten to click the play button to

unpause the pipeline. Click to the top left, expand the list of pipelines and click

the play button next to github-webhook.

Another problem that might occur is that you need to have the version branch.

Concourse will wait for the version branch to appear in your repo. So in order for

the pipeline to start ensure that when doing some git operations you haven’t

forgotten to create / copy the version branch too.

If you play around with Jenkins / Concourse you might end up with the routes occupied

Using route github-webhook-test.local.pcfdev.io Binding github-webhook-test.local.pcfdev.io to github-webhook... FAILED The route github-webhook-test.local.pcfdev.io is already in use.

Just delete the routes

yes | cf delete-route local.pcfdev.io -n github-webhook-test yes | cf delete-route local.pcfdev.io -n github-eureka-test yes | cf delete-route local.pcfdev.io -n stubrunner-test yes | cf delete-route local.pcfdev.io -n github-webhook-stage yes | cf delete-route local.pcfdev.io -n github-eureka-stage yes | cf delete-route local.pcfdev.io -n github-webhook-prod yes | cf delete-route local.pcfdev.io -n github-eureka-prod

You can also execute the ./tools/cf-helper.sh delete-routes

Most likely you’ve forgotten to update your local settings.xml with the Artifactory’s

setup. Check out this section of the docs and update your settings.xml.

When I click on it it looks like this:

resource script '/opt/resource/check []' failed: exit status 128 stderr: Identity added: /tmp/git-resource-private-key (/tmp/git-resource-private-key) Cloning into '/tmp/git-resource-repo-cache'... warning: Could not find remote branch version to clone. fatal: Remote branch version not found in upstream origin

That means that your repo doesn’t have the version branch. Please

set it up.

In this section we will present the common setup of Jenkins for any platform. We will also provide answers to most frequently asked questions.

.

├── declarative-pipeline

│ └── Jenkinsfile-sample.groovy

├── jobs

│ ├── jenkins_pipeline_empty.groovy

│ ├── jenkins_pipeline_jenkinsfile_empty.groovy

│ ├── jenkins_pipeline_sample.groovy

│ └── jenkins_pipeline_sample_view.groovy

├── seed

│ ├── init.groovy

│ ├── jenkins_pipeline.groovy

│ ├── k8s

│ └── settings.xml

└── src

├── main

└── testIn the declarative-pipeline you can find a definition of a Jenkinsfile-sample.groovy declarative

pipeline. It’s used together with the Blueocean UI.

In the jobs folder you have all the seed jobs that will generate pipelines.

jenkins_pipeline_empty.groovy- is a template of a pipeline with empty steps using the Jenkins Job DSL pluginjenkins_pipeline_jenkinsfile_empty.groovy- is a template of a pipeline with empty steps using the Pipeline pluginjenkins_pipeline_sample.groovy- is an opinionated implementation using the Jenkins Job DSL pluginjenkins_pipeline_sample_view.groovy- builds the views for the pipelines

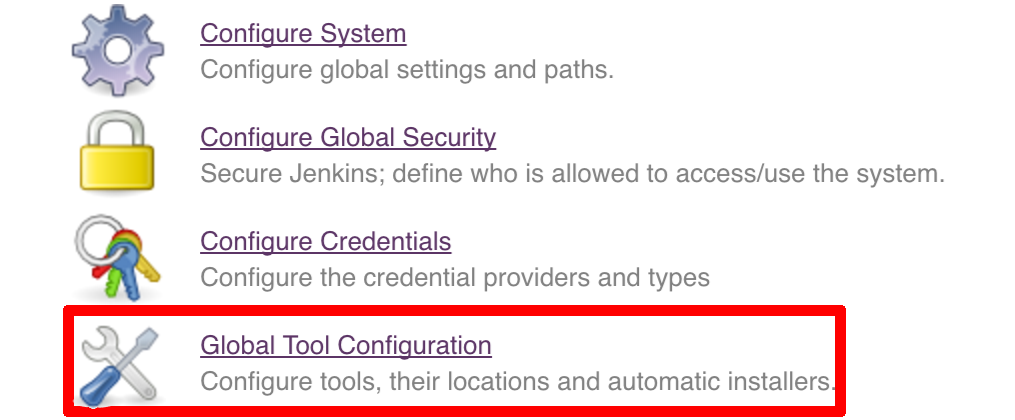

In the seed folder you have the init.groovy file which is executed when Jenkins starts.

That way we can configure most of Jenkins options for you (adding credentials, JDK etc.).

jenkins_pipeline.groovy contains logic to build a seed job (that way you don’t have to even click that

job - we generate it for you). Under the k8s folder there are all the configuration

files required for deployment to a Kubernetes cluster.

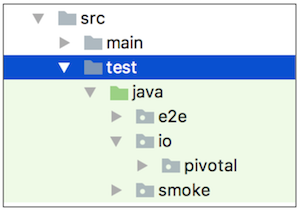

In the src folder you have production and test classes needed for you to build your own pipeline.

Currently we have tests only cause the whole logic resides in the jenkins_pipeline_sample file.

All the steps below are not necessary to run the demo. They are needed only when you want to do some custom changes.

It’s enough to set the ARTIFACTORY_URL environmental variable before

executing tools/deploy-infra.sh. Example for deploying to Artifactory at IP 192.168.99.100

git clone https://github.com/spring-cloud/spring-cloud-pipelines cd spring-cloud-pipelines/ ARTIFACTORY_URL="http://192.168.99.100:8081/artifactory/libs-release-local" ./tools/deploy-infra.sh

![[Tip]](images/tip.png) | Tip |

|---|---|

If you want to use the default connection to the Docker version of Artifactory you can skip this step |

So that ./mvnw deploy works with Artifactory from Docker we’re

already copying the missing settings.xml file for you. It looks more or less like this:

<?xml version="1.0" encoding="UTF-8"?> <settings> <servers> <server> <id>${M2_SETTINGS_REPO_ID}</id> <username>${M2_SETTINGS_REPO_USERNAME}</username> <password>${M2_SETTINGS_REPO_PASSWORD}</password> </server> <server> <id>${DOCKER_SERVER_ID}</id> <username>${DOCKER_USERNAME}</username> <password>${DOCKER_PASSWORD}</password> <configuration> <email>${DOCKER_EMAIL}</email> </configuration> </server> </servers> </settings>

As you can see the file is parameterized. In Maven it’s enough to pass

to ./mvnw command the proper system property to override that value. For example to pass

a different docker email you’d have to call ./mvnw -DDOCKER_EMAIL=[email protected] and the value

gets updated.

If you want to use your own version of Artifactory / Nexus you have to update

the file (it’s in seed/settings.xml).

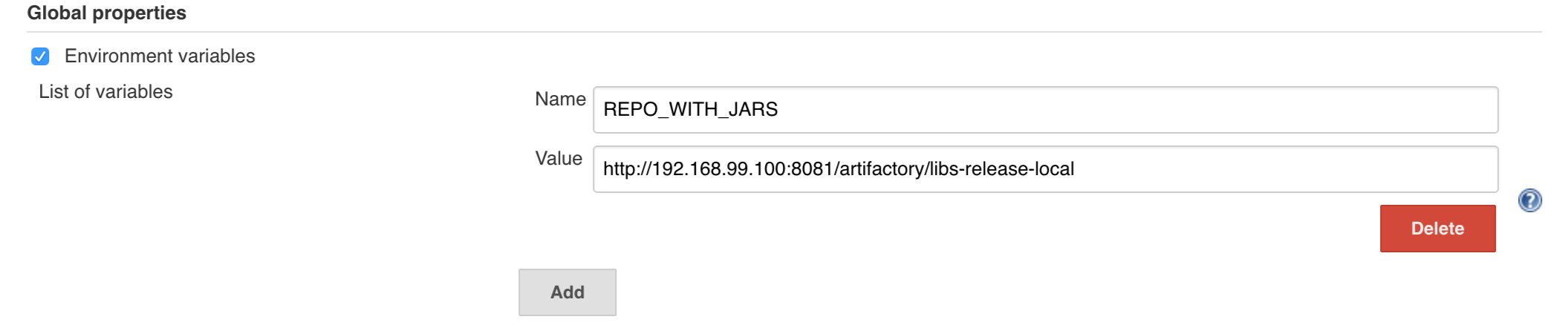

If you want to only play around with the demo that we’ve prepared you have to set ONE variable which is the REPOS variable.

That variable needs to consists of comma separated list of URLs to repositories containing business apps. So you should pass your forked repos URLs.

You can do it in the following ways:

- globally via Jenkins global env vars (then when you run the seed that variable will be taken into consideration and proper pipelines will get built)

- modify the seed job parameters (you’ll have to modify the seed job configuration and change the

REPOSproperty) - provide the repos parameter when running the seed job

For the sake of simplicity let’s go with the last option.

![[Important]](images/important.png) | Important |

|---|---|

If you’re choosing the global envs, you HAVE to remove the other approach

(e.g. if you set the global env for |

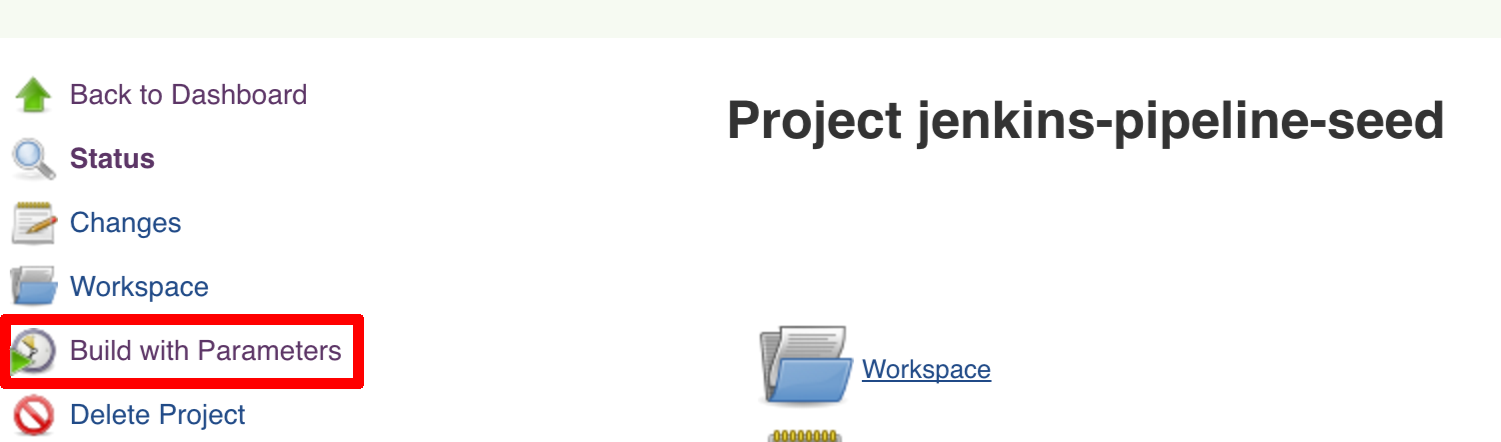

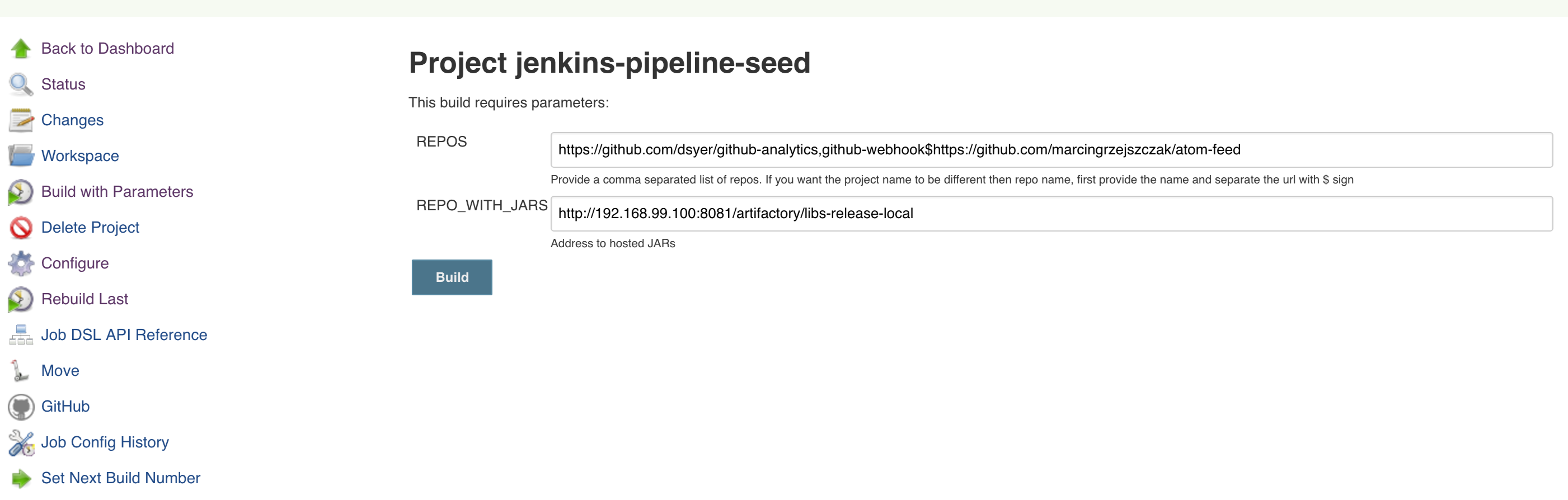

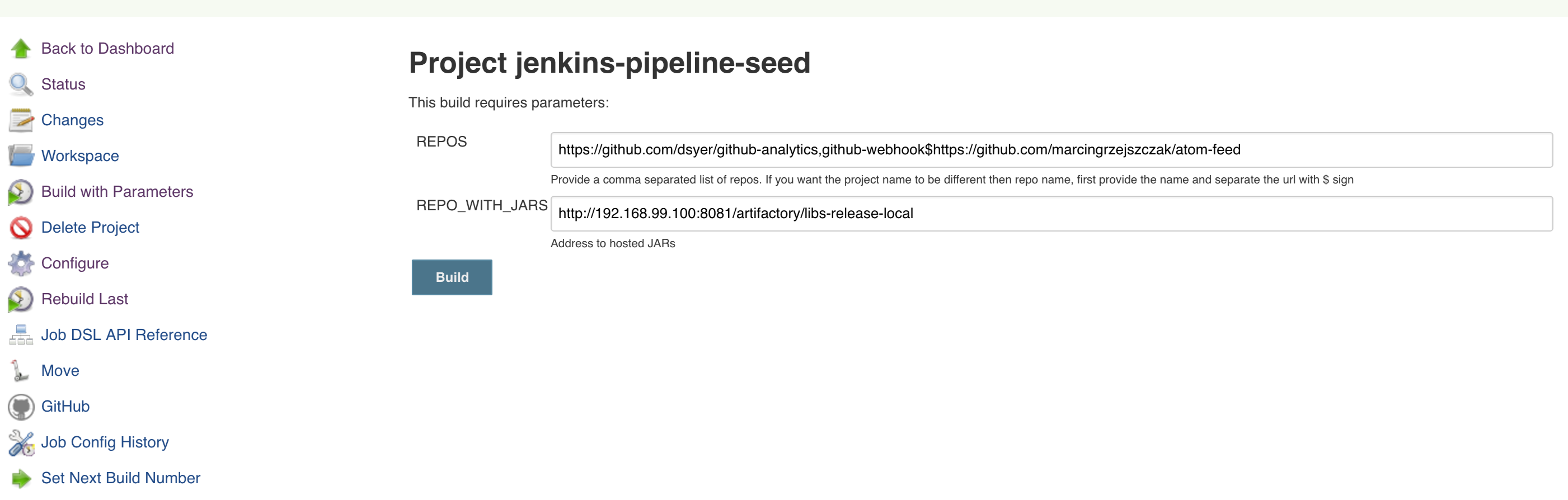

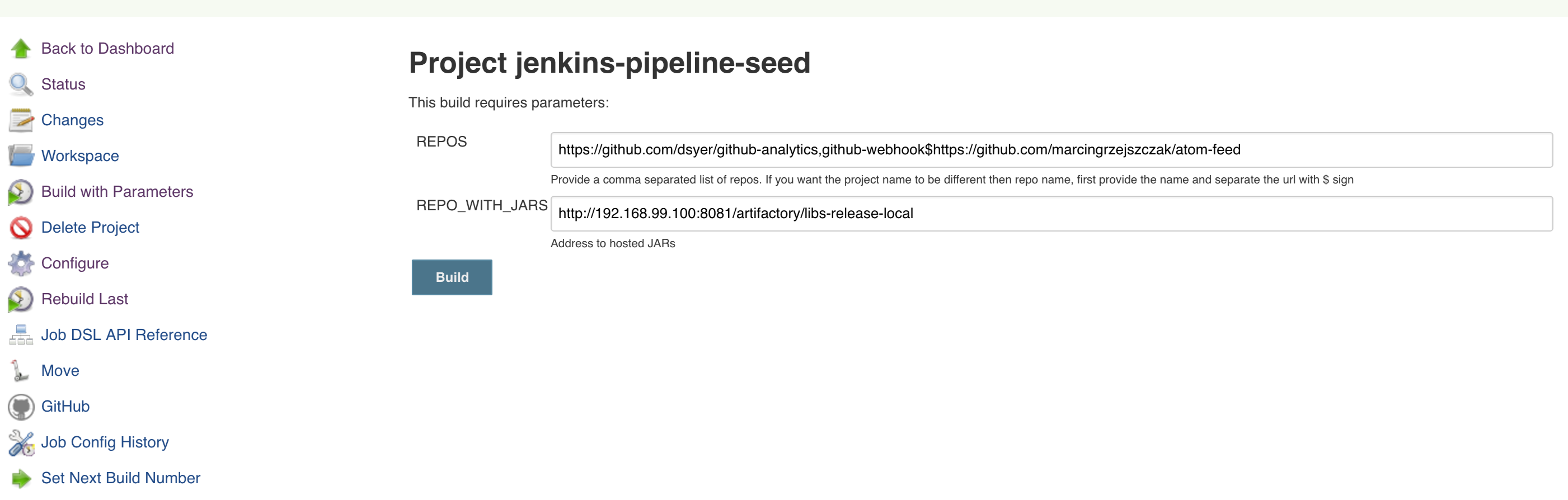

Click on the seed job and pick Build with parameters. Then as presented in the screen below (you’ll have far more properties to set) just modify the REPOS property by providing the comma separated list of URLs to your forks. Whatever you set will be parsed by the seed job and passed to the generated Jenkins jobs.

![[Tip]](images/tip.png) | Tip |

|---|---|

This is very useful when the repos you want to build differ. E.g. use

different JDK. Then some seeds can set the |

Example screen:

In the screenshot we could parametrize the REPOS and REPO_WITH_BINARIES params.

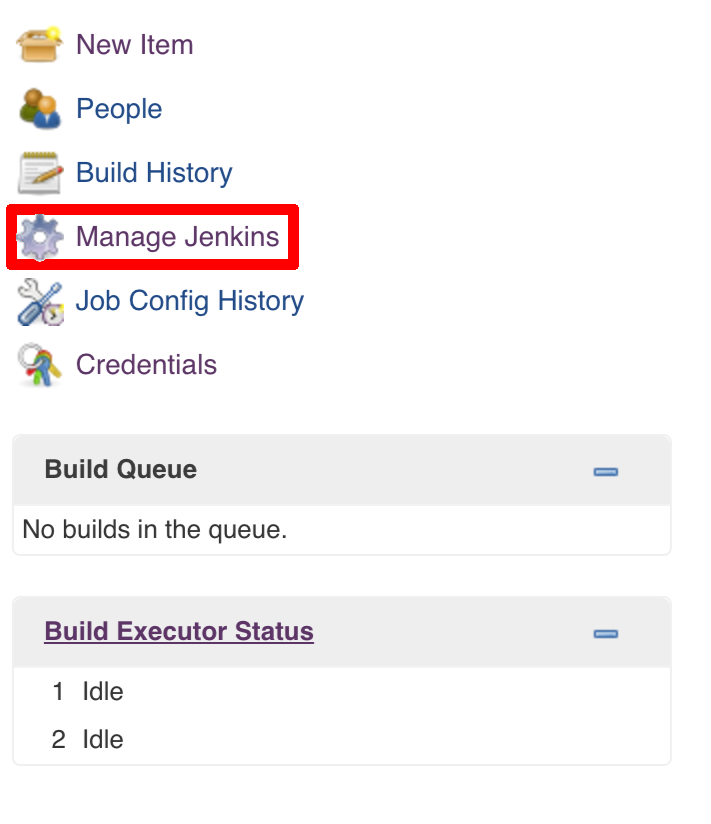

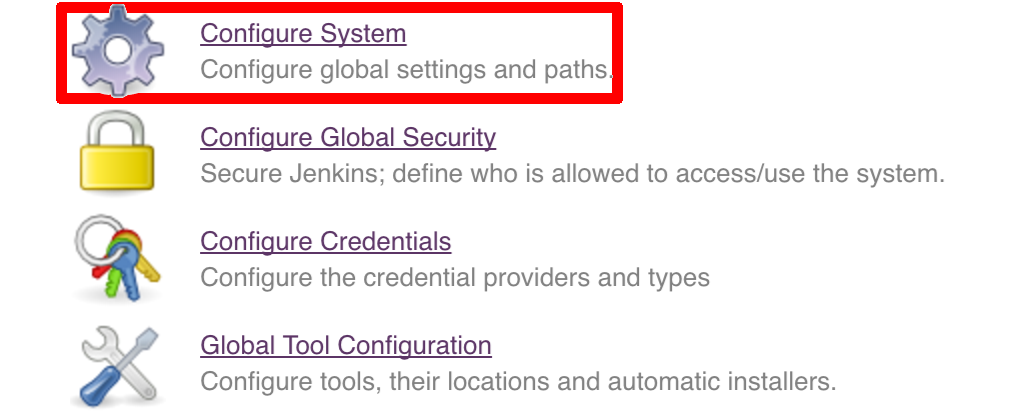

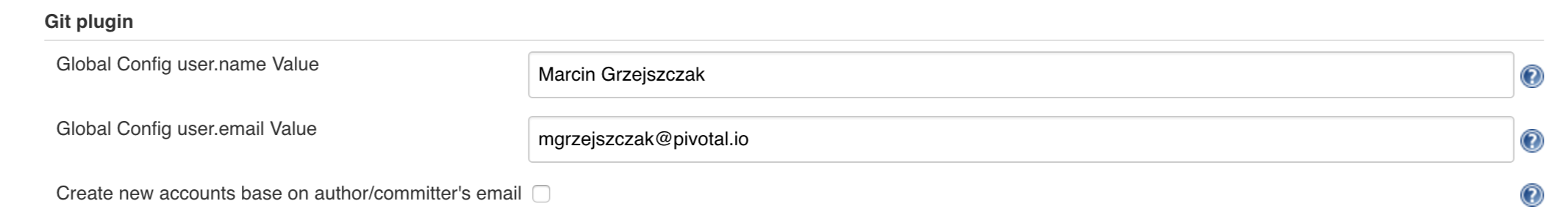

Since our pipeline is setting the git user / name explicitly for the build step

you’d have to go to Configure of the build step and modify the Git name / email.

If you want to set it globally you’ll have to remove the section from the build

step and follow these steps to set it globally.

You can set Git email / user globally like this:

The scripts will need to access the credential in order to tag the repo.

You have to set credentials with id: git.

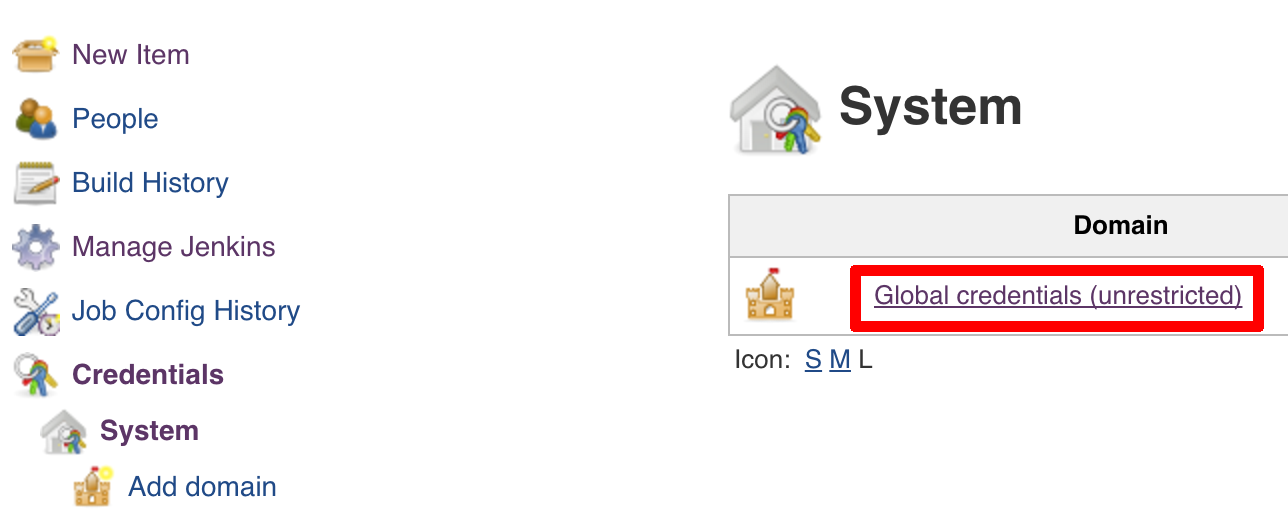

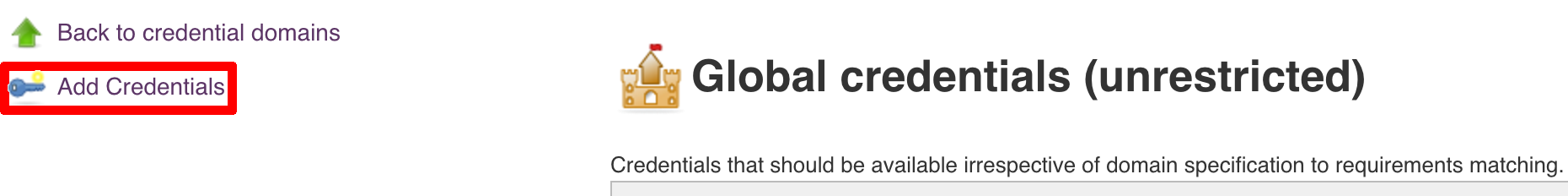

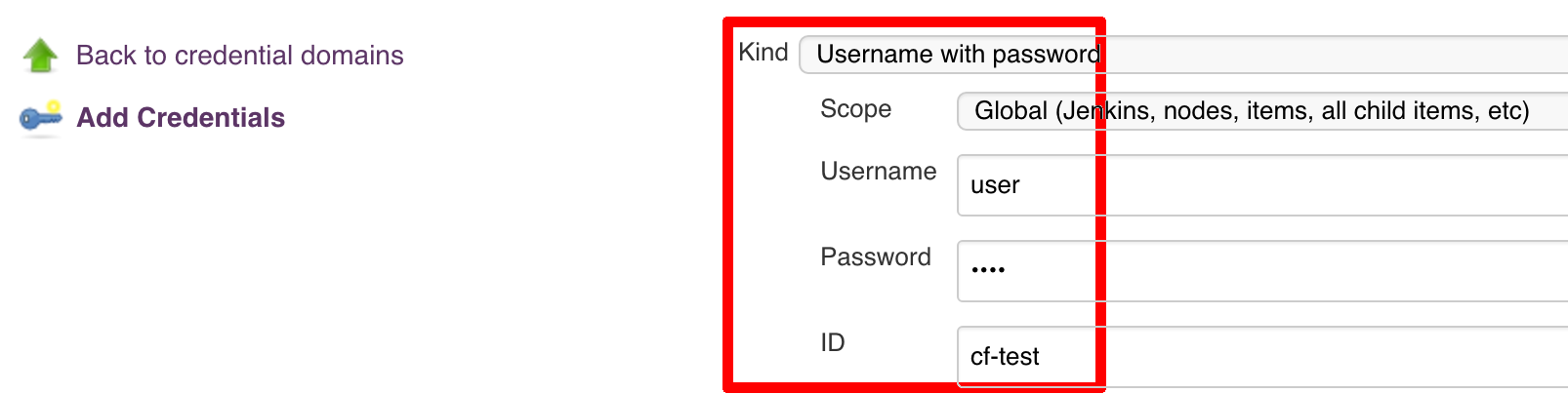

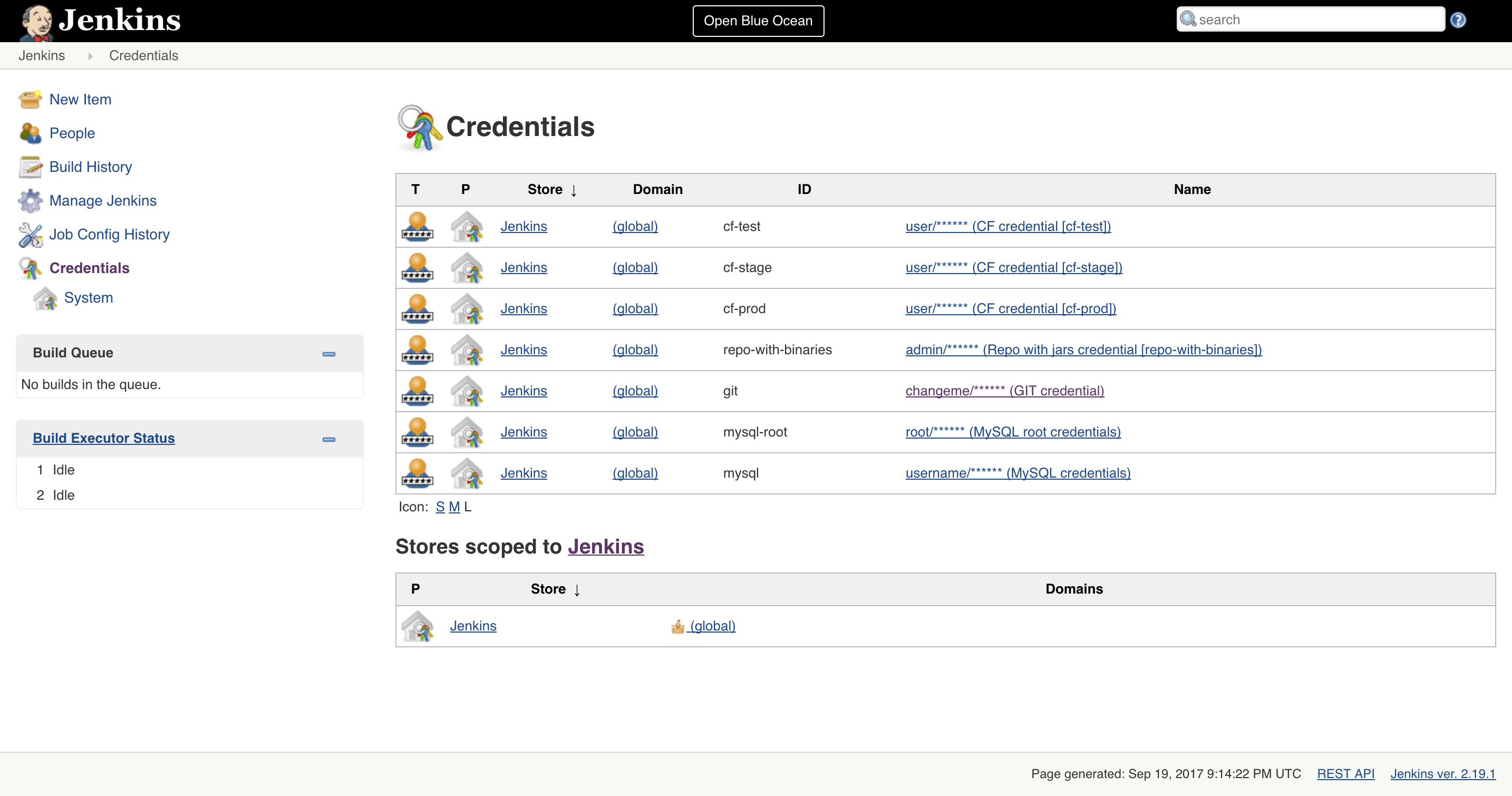

Below you can find instructions on how to set a credential (e.g. for Cloud Foundry cf-test credential but

remember to provide the one with id git).

Figure 7.7. Fill out the user / password and provide the git credential ID (in this example cf-test)

./gradlew clean build

![[Warning]](images/warning.png) | Warning |

|---|---|

The ran test only checks if your scripts compile. |

Check out the tutorial. Provide the link to this repository in your Jenkins installation.

![[Warning]](images/warning.png) | Warning |

|---|---|

Remember that views can be overridden that’s why the suggestion is to contain in one script all the logic needed to build a view

for a single project (check out that |

If you would like to run the pre-configured Jenkins image somewhere other than your local machine, we

have an image you can pull and use on DockerHub.

The latest tag corresponds to the latest snapshot build. You can also find tags

corresponding to stable releases that you can use as well.

![[Important]](images/important.png) | Important |

|---|---|

In this chapter we assume that you perform deployment of your application to Cloud Foundry PaaS |

The Spring Cloud Pipelines repository contains job definitions and the opinionated setup pipeline using Jenkins Job DSL plugin. Those jobs will form an empty pipeline and a sample, opinionated one that you can use in your company.

All in all there are the following projects taking part in the whole microservice setup for this demo.

- Github Analytics - the app that has a REST endpoint and uses messaging. Our business application.

- Github Webhook - project that emits messages that are used by Github Analytics. Our business application.

- Eureka - simple Eureka Server. This is an infrastructure application.

- Github Analytics Stub Runner Boot - Stub Runner Boot server to be used for tests with Github Analytics. Uses Eureka and Messaging. This is an infrastructure application.

This is a guide for Jenkins Job DSL based pipeline.

If you want to just run the demo as far as possible using PCF Dev and Docker Compose

There are 4 apps that are composing the pipeline

You need to fork only these. That’s because only then will your user be able to tag and push the tag to repo.

Jenkins + Artifactory can be ran locally. To do that just execute the

start.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/jenkins

./start.sh yourGitUsername yourGitPassword yourForkedGithubOrgThen Jenkins will be running on port 8080 and Artifactory 8081.

The provided parameters will be passed as env variables to Jenkins VM

and credentials will be set in your set. That way you don’t have to do

any manual work on the Jenkins side. In the above parameters, the third parameter

could be yourForkedGithubOrg or yourGithubUsername. Also the REPOS env variable will

contain your GitHub org in which you have the forked repos.

Instead of the Git username and password parameters you could pass -key <path_to_private_key>

if you prefer to use the key-based authentication with your Git repositories.

When Artifactory is running, just execute the tools/deploy-infra.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/

./tools/deploy-infra.shAs a result both eureka and stub runner repos will be cloned, built

and uploaded to Artifactory.

![[Tip]](images/tip.png) | Tip |

|---|---|

You can skip this step if you have CF installed and don’t want to use PCF Dev The only thing you have to do is to set up spaces. |

![[Warning]](images/warning.png) | Warning |

|---|---|

It’s more than likely that you’ll run out of resources when you reach stage step. Don’t worry! Keep calm and clear some apps from PCF Dev and continue. |

You have to download and start PCF Dev. A link how to do it is available here.

The default credentials when using PCF Dev are:

username: user password: pass email: user org: pcfdev-org space: pcfdev-space api: api.local.pcfdev.io

You can start the PCF Dev like this:

cf dev start

You’ll have to create 3 separate spaces (email admin, pass admin)

cf login -a https://api.local.pcfdev.io --skip-ssl-validation -u admin -p admin -o pcfdev-org cf create-space pcfdev-test cf set-space-role user pcfdev-org pcfdev-test SpaceDeveloper cf create-space pcfdev-stage cf set-space-role user pcfdev-org pcfdev-stage SpaceDeveloper cf create-space pcfdev-prod cf set-space-role user pcfdev-org pcfdev-prod SpaceDeveloper

You can also execute the ./tools/cf-helper.sh setup-spaces to do this.

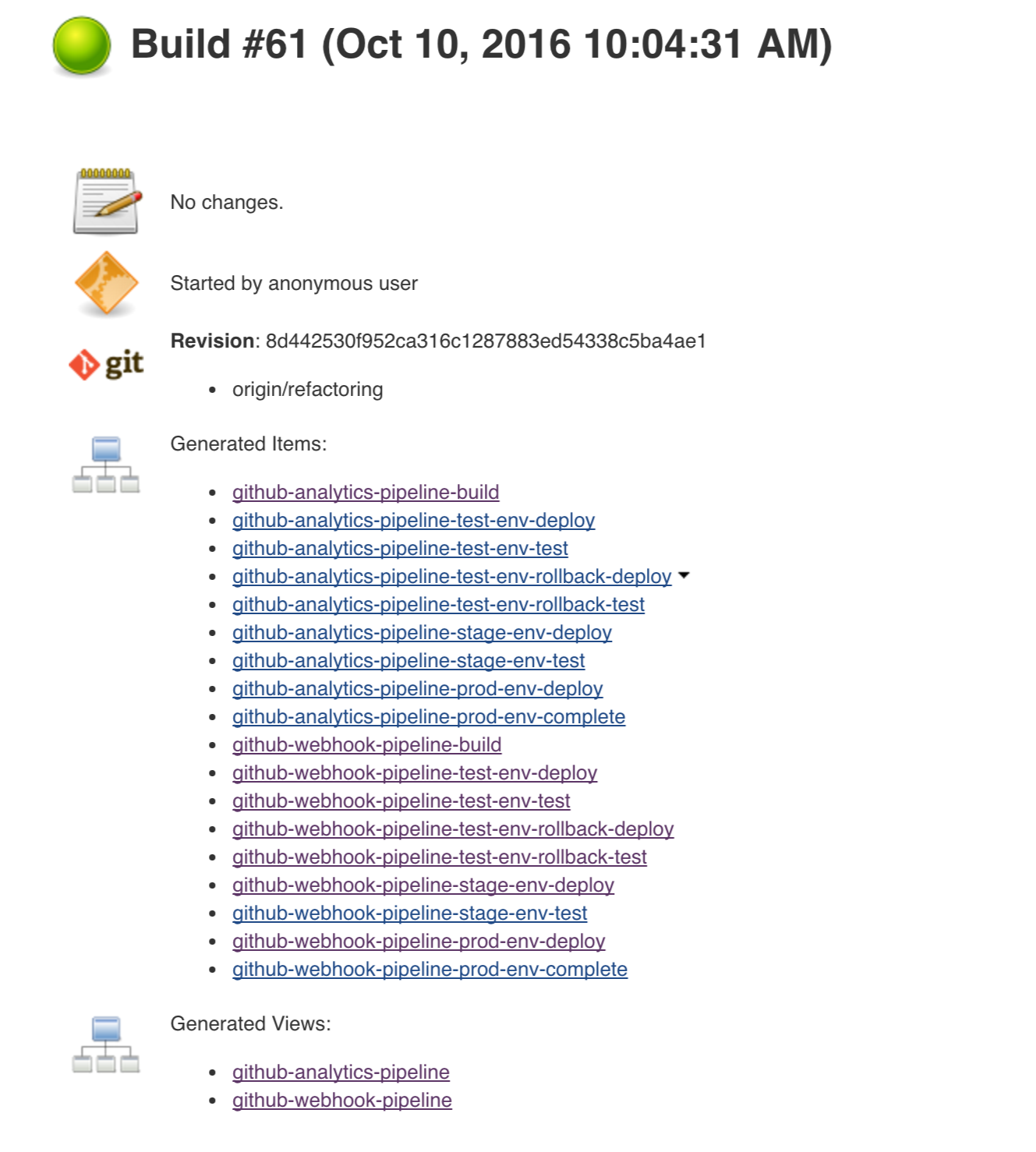

We already create the seed job for you but you’ll have to run it. When you do run it you have to provide some properties. By default we create a seed that has all the properties options, but you can delete most of it. If you set the properties as global env variables you have to remove them from the seed.

Anyways, to run the demo just provide in the REPOS var the comma separated

list of URLs of the 2 aforementioned forks of github-webhook and `github-analytics'.

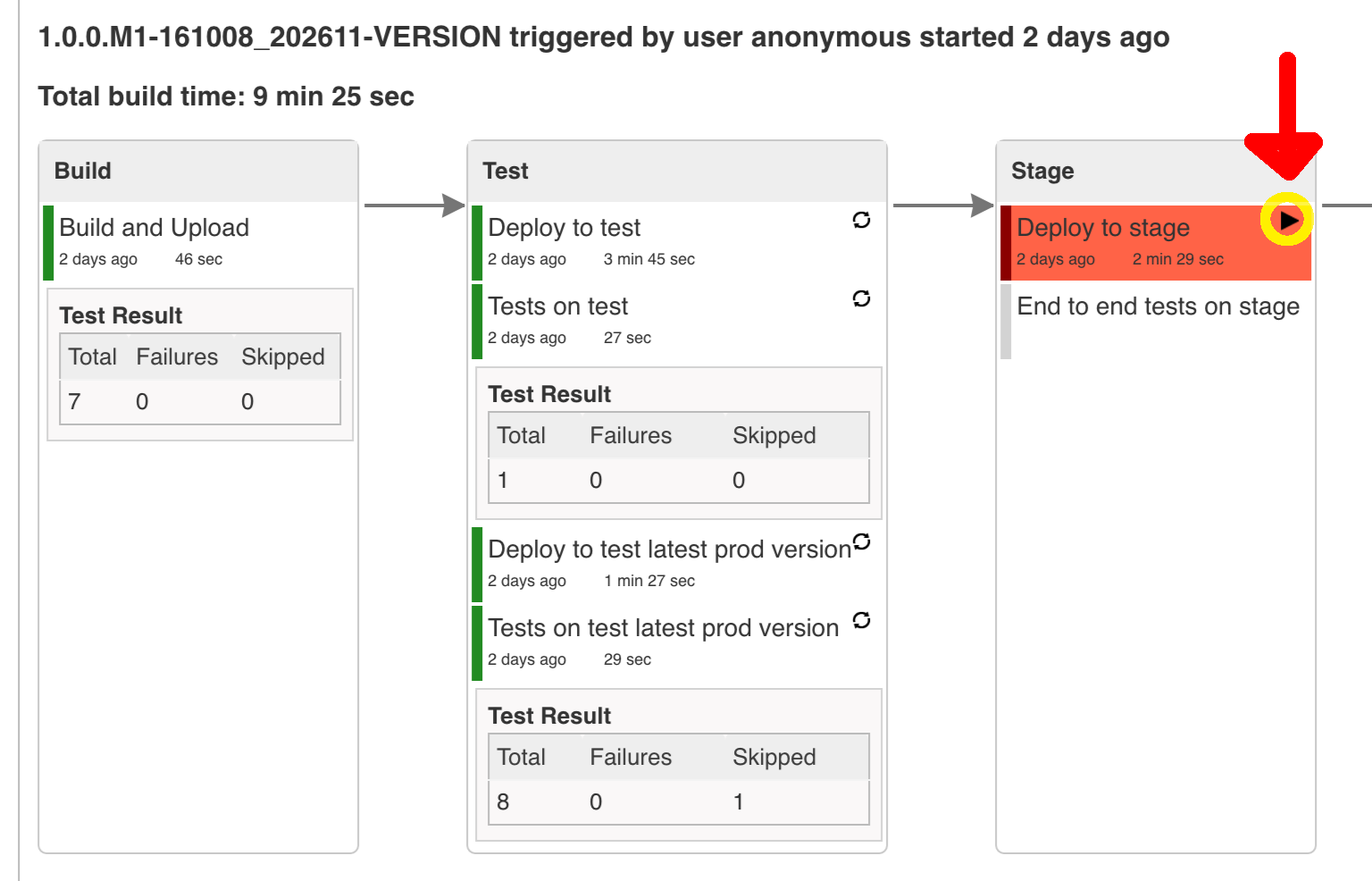

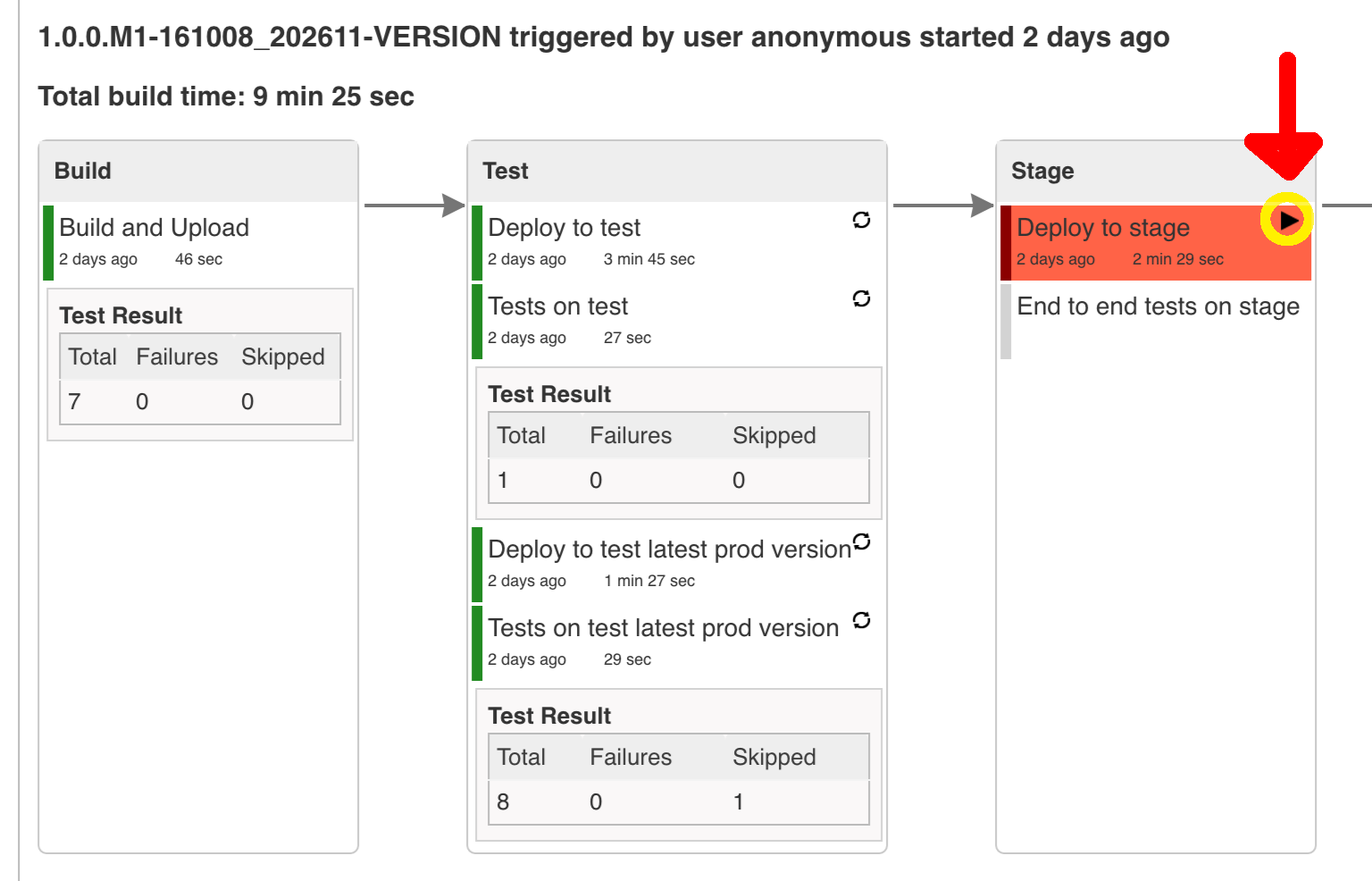

Figure 8.1. Click the 'jenkins-pipeline-seed-cf' job for Cloud Foundry and jenkins-pipeline-seed-k8s for Kubernetes

Figure 8.3. The REPOS parameter should already contain your forked repos (you’ll have more properties than the ones in the screenshot)

We already create the seed job for you but you’ll have to run it. When you do run it you have to provide some properties. By default we create a seed that has all the properties options, but you can delete most of it. If you set the properties as global env variables you have to remove them from the seed.

Anyways, to run the demo just provide in the REPOS var the comma separated

list of URLs of the 2 aforementioned forks of github-webhook and github-analytics.

![[Important]](images/important.png) | Important |

|---|---|

If your build fails on the deploy previous version to stage due to missing jar, that means that you’ve forgotten to clear the tags in your repo. Typically that’s due to the fact that you’ve removed the Artifactory volume with deployed JAR whereas a tag in the repo is still pointing there. Check out this section on how to remove the tag. |

Figure 8.7. Click the manual step to go to stage (remember about killing the apps on test env). To do this click the ARROW next to the job name

![[Important]](images/important.png) | Important |

|---|---|

Most likely you will run out of memory so when reaching the stage environment it’s good to kill all apps on test. Check out the FAQ section for more details! |

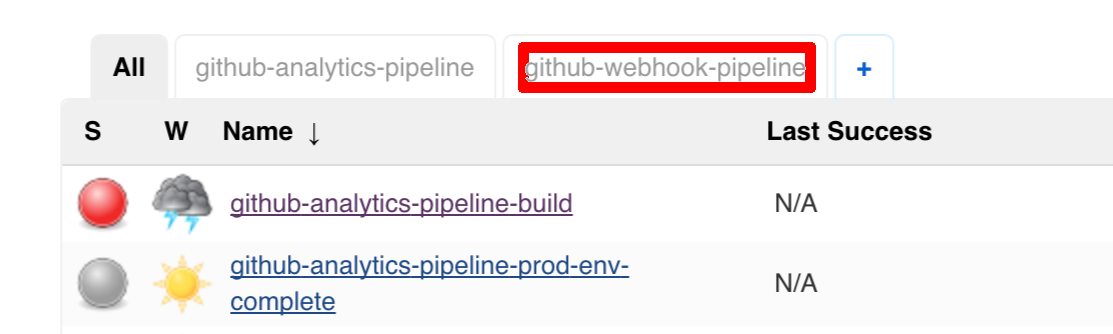

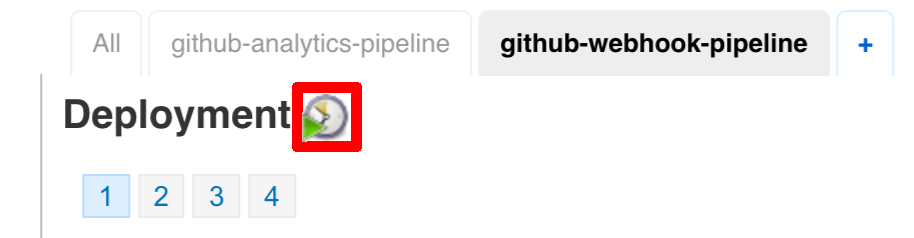

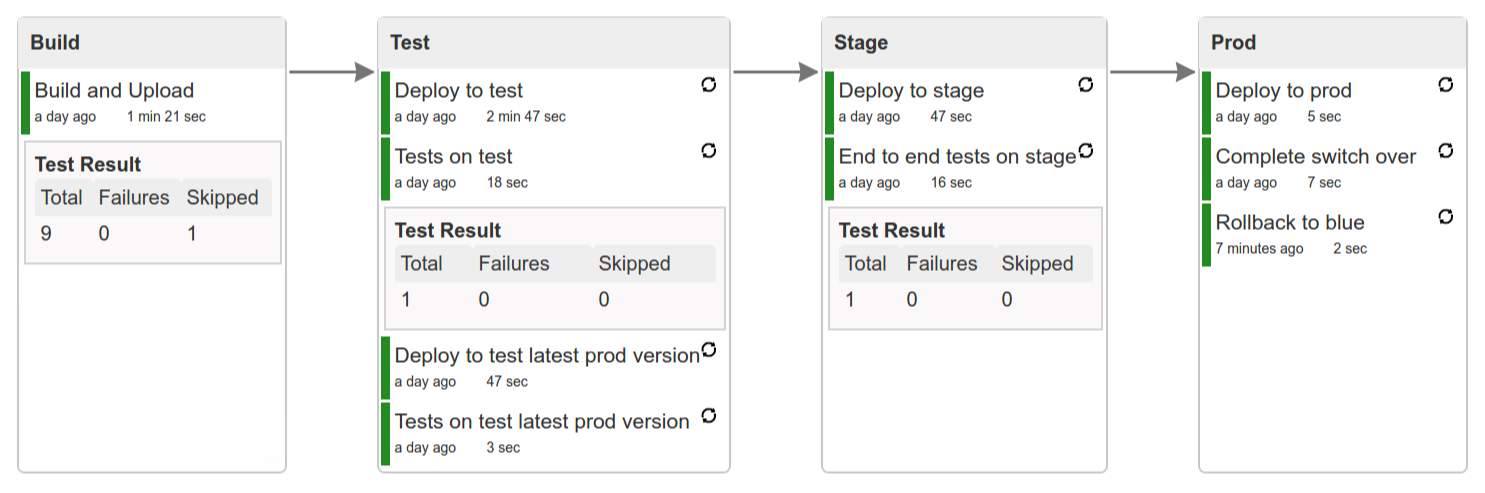

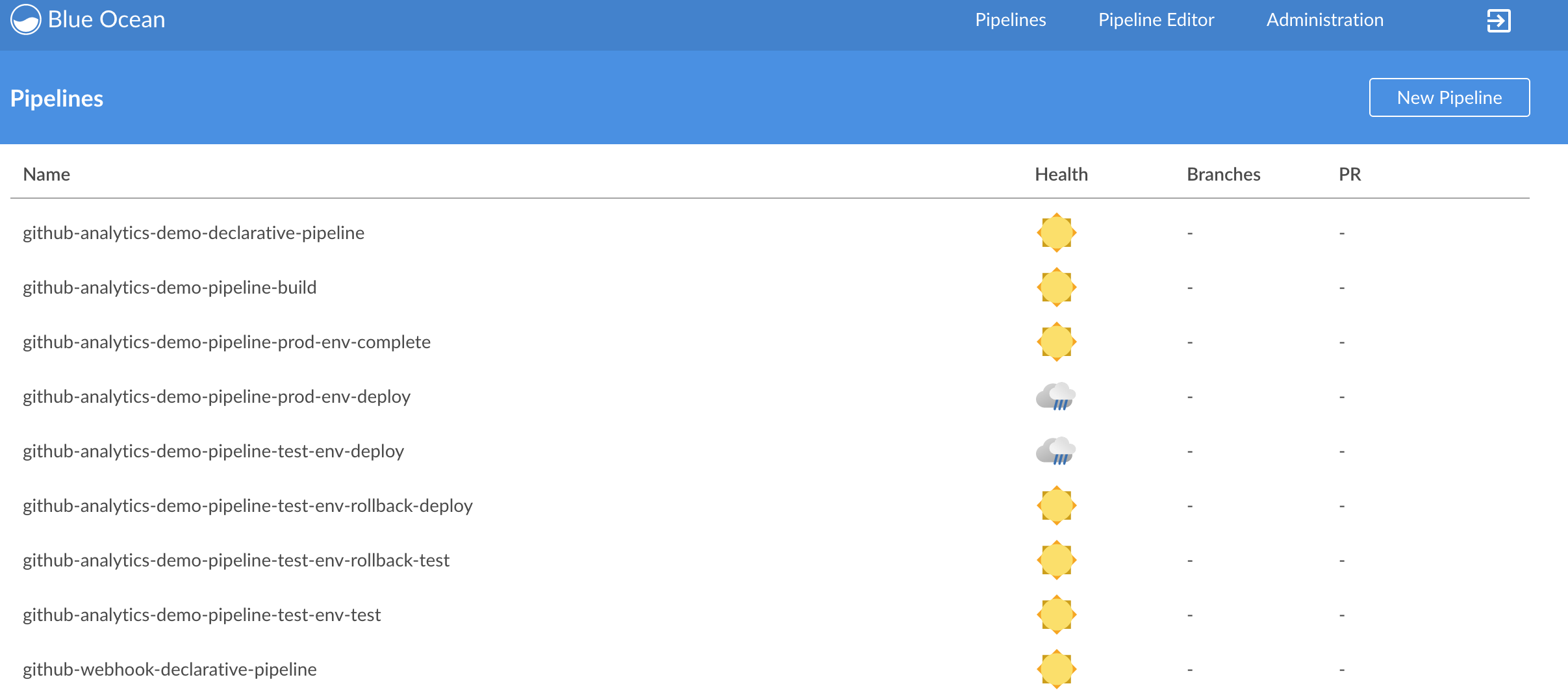

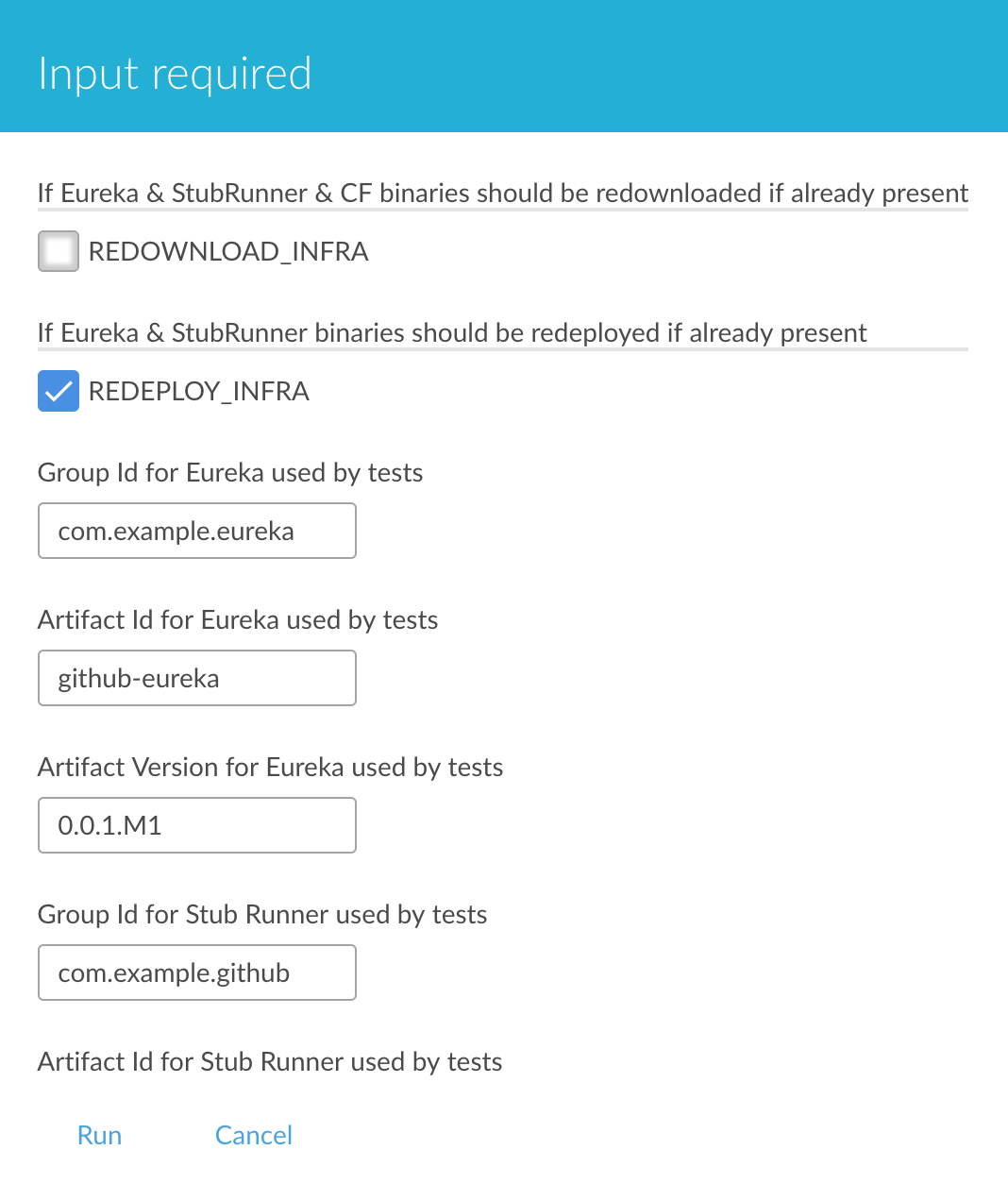

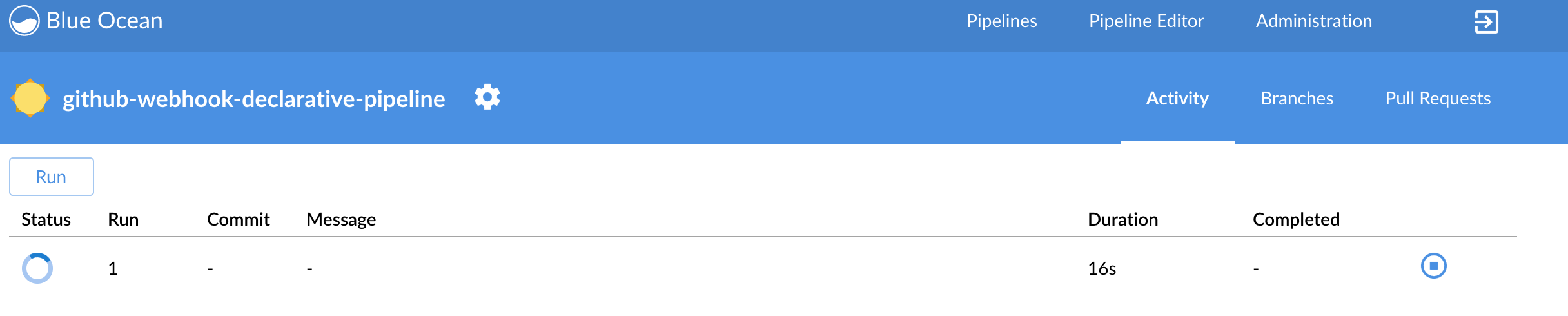

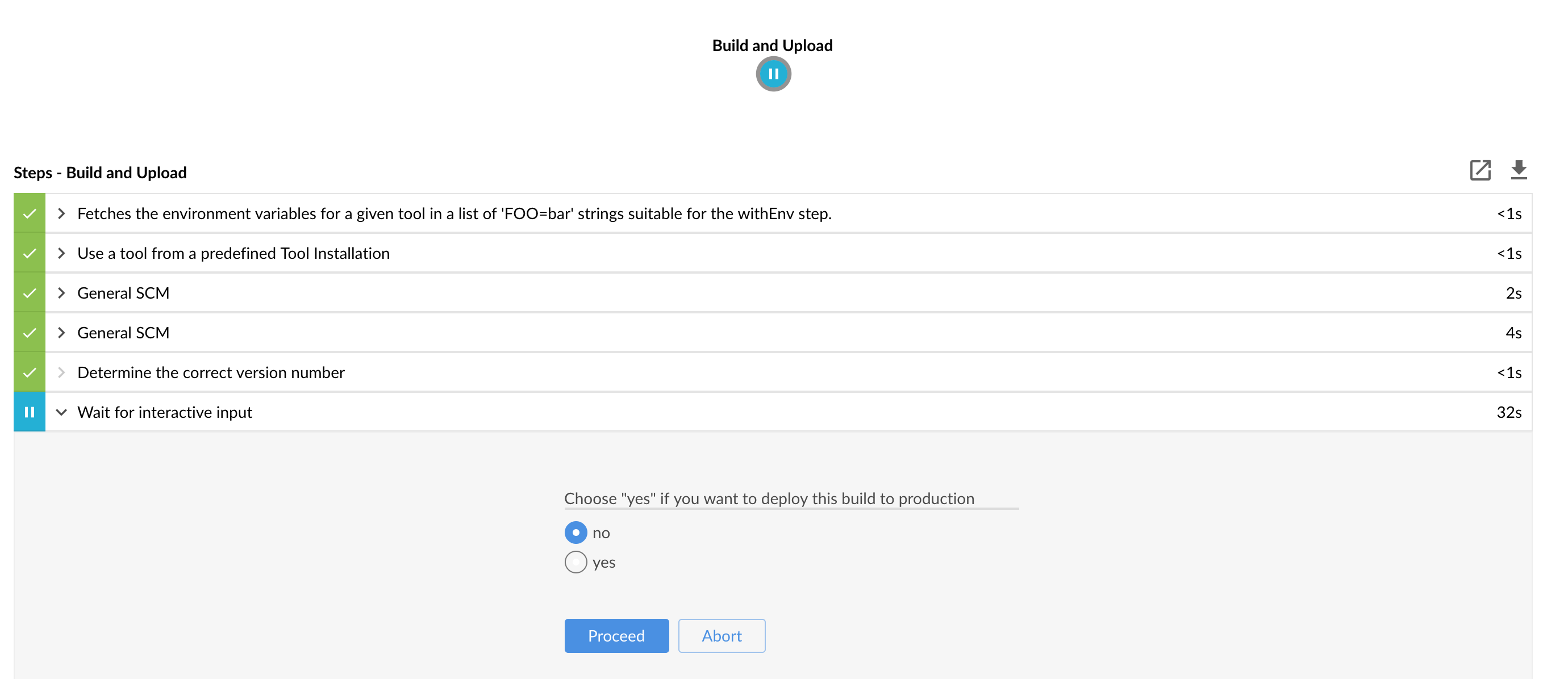

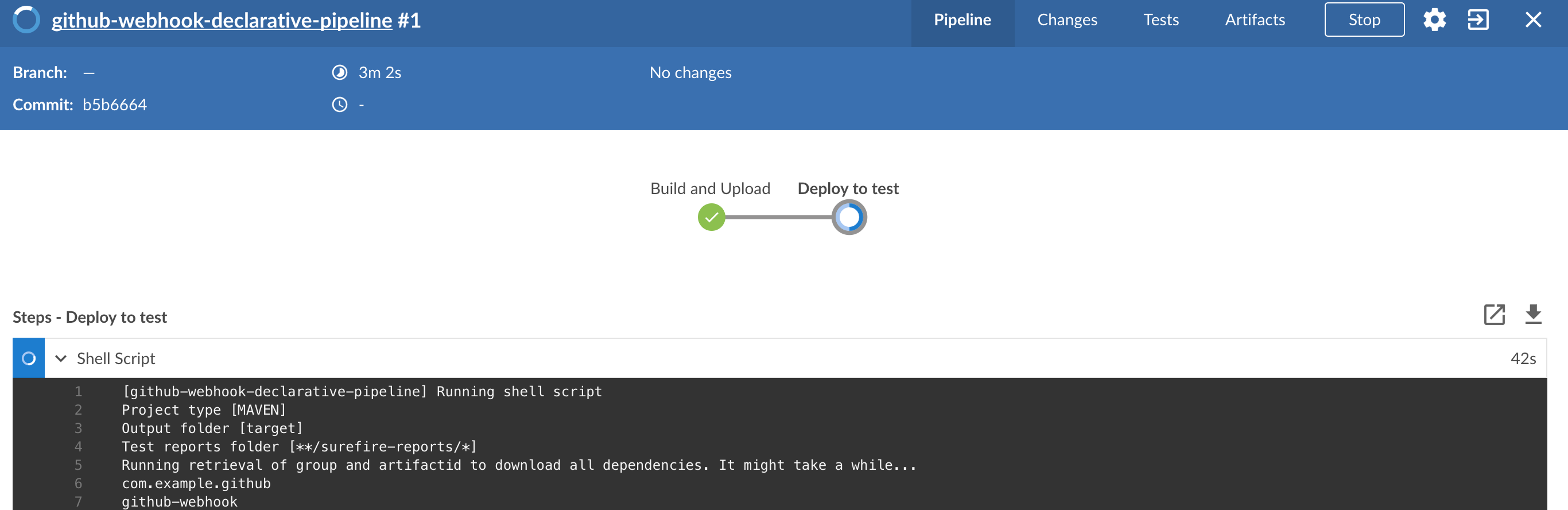

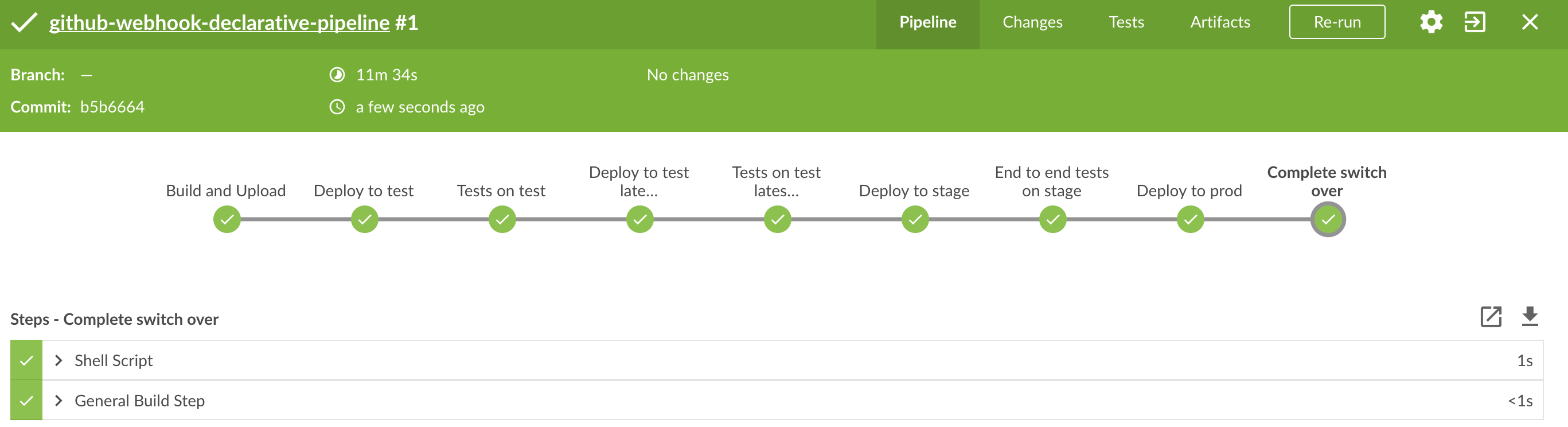

You can also use the declarative pipeline approach with the Blue Ocean UI. Here is a step by step guide to run a pipeline via this approach.

The Blue Ocean UI is available under the blue/ URL. E.g. for Docker Machine based setup http://192.168.99.100:8080/blue.

![[Important]](images/important.png) | Important |

|---|---|

There is no possibility of restarting pipeline from specific stage, after failure. Please check out this issue for more information |

![[Warning]](images/warning.png) | Warning |

|---|---|

Currently there is no way to introduce manual steps in a performant way. Jenkins is blocking an executor when manual step is required. That means that you’ll run out of executors pretty fast. You can check out this issue for and this StackOverflow question for more information. |

All the steps below are not necessary to run the demo. They are needed only when you want to do some custom changes.

The env vars that are used in all of the jobs are as follows:

| Property Name | Property Description | Default value |

|---|---|---|

BINARY_EXTENSION | Extension of the binary uploaded to Artifactory / Nexus. Example: change this to | jar |

PAAS_TEST_API_URL | The URL to the CF Api for TEST env | api.local.pcfdev.io |

PAAS_STAGE_API_URL | The URL to the CF Api for STAGE env | api.local.pcfdev.io |

PAAS_PROD_API_URL | The URL to the CF Api for PROD env | api.local.pcfdev.io |

PAAS_TEST_ORG | Name of the org for the test env | pcfdev-org |

PAAS_TEST_SPACE_PREFIX | Prefix of the name of the CF space for the test env to which the app name will be appended | sc-pipelines-test |

PAAS_STAGE_ORG | Name of the org for the stage env | pcfdev-org |

PAAS_STAGE_SPACE | Name of the space for the stage env | sc-pipelines-stage |

PAAS_PROD_ORG | Name of the org for the prod env | pcfdev-org |

PAAS_PROD_SPACE | Name of the space for the prod env | sc-pipelines-prod |

REPO_WITH_BINARIES_FOR_UPLOAD | URL to repo with the deployed jars | |

M2_SETTINGS_REPO_ID | The id of server from Maven settings.xml | artifactory-local |

JDK_VERSION | The name of the JDK installation | jdk8 |

PIPELINE_VERSION | What should be the version of the pipeline (ultimately also version of the jar) | 1.0.0.M1-${GROOVY,script ="new Date().format('yyMMdd_HHmmss')"}-VERSION |

GIT_EMAIL | The email used by Git to tag repo | |

GIT_NAME | The name used by Git to tag repo | Pivo Tal |

PAAS_HOSTNAME_UUID | Additional suffix for the route. In a shared environment the default routes can be already taken | |

AUTO_DEPLOY_TO_STAGE | Should deployment to stage be automatic | false |

AUTO_DEPLOY_TO_PROD | Should deployment to prod be automatic | false |

API_COMPATIBILITY_STEP_REQUIRED | Should api compatibility step be required | true |

DB_ROLLBACK_STEP_REQUIRED | Should DB rollback step be present | true |

DEPLOY_TO_STAGE_STEP_REQUIRED | Should deploy to stage step be present | true |

JAVA_BUILDPACK_URL | The URL to the Java buildpack to be used by CF | |

BUILD_OPTIONS | Additional options you would like to pass to the Maven / Gradle build |

In your scripts we reference the credentials via IDs. These are the defaults for credentials

| Property Name | Property Description | Default value |

|---|---|---|

PAAS_PROD_CREDENTIAL_ID | Credential ID for CF Prod env access | cf-prod |

GIT_CREDENTIAL_ID | Credential ID used to tag a git repo | git |

GIT_SSH_CREDENTIAL_ID | SSH credential ID used to tag a git repo | gitSsh |

GIT_USE_SSH_KEY | if | false |

REPO_WITH_BINARIES_CREDENTIAL_ID | Credential ID used for the repo with jars | repo-with-binaries |

PAAS_TEST_CREDENTIAL_ID | Credential ID for CF Test env access | cf-test |

PAAS_STAGE_CREDENTIAL_ID | Credential ID for CF Stage env access | cf-stage |

If you already have in your system a credential to for example tag a repo

you can use it by passing the value of the property GIT_CREDENTIAL_ID

![[Tip]](images/tip.png) | Tip |

|---|---|

Check out the |

![[Important]](images/important.png) | Important |

|---|---|

In this chapter we assume that you perform deployment of your application to Kubernetes PaaS |

The Spring Cloud Pipelines repository contains job definitions and the opinionated setup pipeline using Jenkins Job DSL plugin. Those jobs will form an empty pipeline and a sample, opinionated one that you can use in your company.

All in all there are the following projects taking part in the whole microservice setup for this demo.

- Github Analytics - the app that has a REST endpoint and uses messaging. Our business application.

- Github Webhook - project that emits messages that are used by Github Analytics. Our business application.

- Eureka - simple Eureka Server. This is an infrastructure application.

- Github Analytics Stub Runner Boot - Stub Runner Boot server to be used for tests with Github Analytics. Uses Eureka and Messaging. This is an infrastructure application.

This is a guide for Jenkins Job DSL based pipeline.

If you want to just run the demo as far as possible using PCF Dev and Docker Compose

There are 4 apps that are composing the pipeline

You need to fork only these. That’s because only then will your user be able to tag and push the tag to repo.

Jenkins + Artifactory can be ran locally. To do that just execute the

start.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/jenkins

./start.sh yourGitUsername yourGitPassword yourForkedGithubOrg yourDockerRegistryOrganization yourDockerRegistryUsername yourDockerRegistryPassword yourDockerRegistryEmailThen Jenkins will be running on port 8080 and Artifactory 8081.

The provided parameters will be passed as env variables to Jenkins VM

and credentials will be set in your set. That way you don’t have to do

any manual work on the Jenkins side. In the above parameters, the third parameter

could be yourForkedGithubOrg or yourGithubUsername. Also the REPOS env variable will

contain your GitHub org in which you have the forked repos.

Instead of the Git username and password parameters you could pass -key <path_to_private_key>

if you prefer to use the key-based authentication with your Git repositories.

You need to pass the credentials for the Docker organization (by default we will search for the Docker images at Docker Hub) so that the pipeline will be able to push images to your org.

When Artifactory is running, just execute the tools/deploy-infra.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/

./tools/deploy-infra-k8s.shAs a result both eureka and stub runner repos will be cloned, built,

uploaded to Artifactory and their docker images will be built.

![[Important]](images/important.png) | Important |

|---|---|

Your local Docker process will be reused by the Jenkins instance running in Docker. That’s why you don’t have to push these images to Docker Hub. On the other hand if you run this sample in a remote Kubernetes cluster the driver will not be shared by the Jenkins workers so you can consider pushing these Docker images to Docker Hub too. |

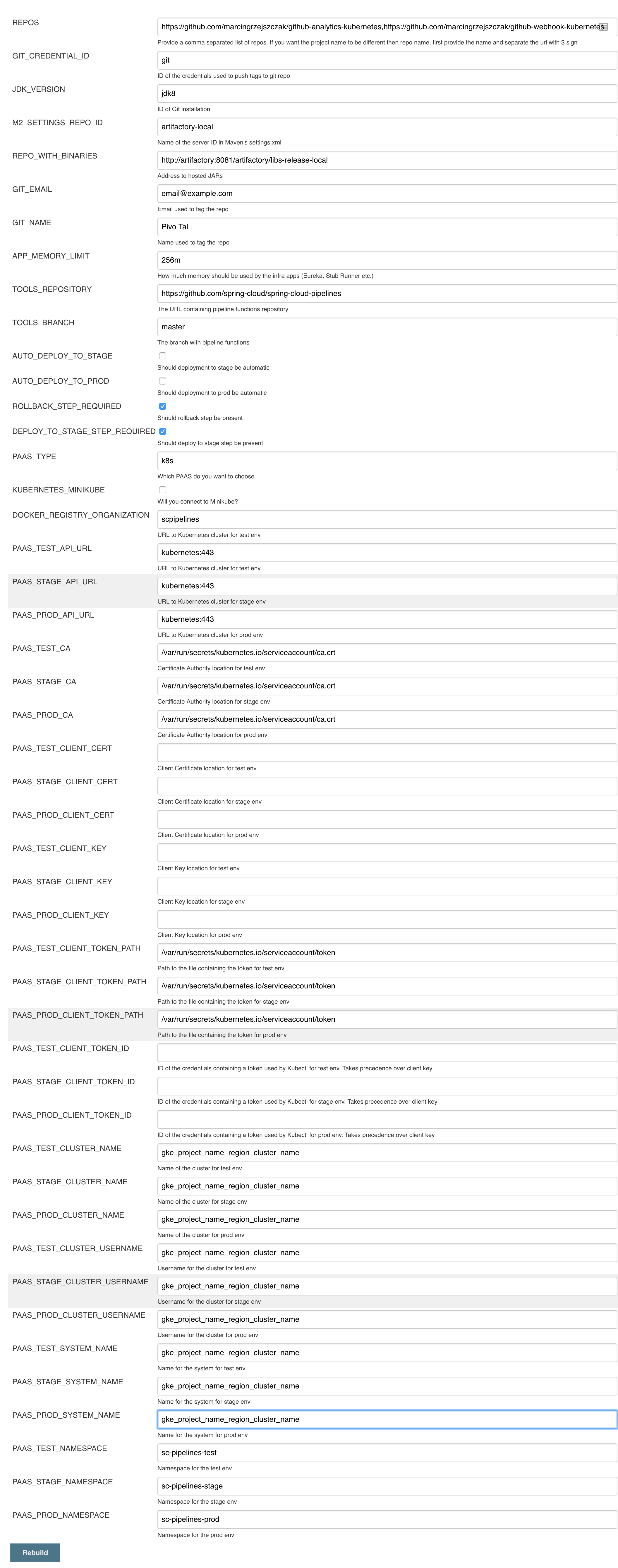

We already create the seed job for you but you’ll have to run it. When you do run it you have to provide some properties. By default we create a seed that has all the properties options, but you can delete most of it. If you set the properties as global env variables you have to remove them from the seed.

Anyways, to run the demo just provide in the REPOS var the comma separated

list of URLs of the 2 aforementioned forks of github-webhook and `github-analytics'.

Figure 9.1. Click the 'jenkins-pipeline-seed-cf' job for Cloud Foundry and jenkins-pipeline-seed-k8s for Kubernetes

Figure 9.3. The REPOS parameter should already contain your forked repos (you’ll have more properties than the ones in the screenshot)

We already create the seed job for you but you’ll have to run it. When you do run it you have to provide some properties. By default we create a seed that has all the properties options, but you can delete most of it. If you set the properties as global env variables you have to remove them from the seed.

Anyways, to run the demo just provide in the REPOS var the comma separated

list of URLs of the 2 aforementioned forks of github-webhook and github-analytics.

![[Important]](images/important.png) | Important |

|---|---|

If your build fails on the deploy previous version to stage due to missing jar, that means that you’ve forgotten to clear the tags in your repo. Typically that’s due to the fact that you’ve removed the Artifactory volume with deployed JAR whereas a tag in the repo is still pointing there. Check out this section on how to remove the tag. |

Figure 9.7. Click the manual step to go to stage (remember about killing the apps on test env). To do this click the ARROW next to the job name

![[Important]](images/important.png) | Important |

|---|---|

Most likely you will run out of memory so when reaching the stage environment it’s good to kill all apps on test. Check out the FAQ section for more details! |

You can also use the declarative pipeline approach with the Blue Ocean UI. Here is a step by step guide to run a pipeline via this approach.

The Blue Ocean UI is available under the blue/ URL. E.g. for Docker Machine based setup http://192.168.99.100:8080/blue.

![[Important]](images/important.png) | Important |

|---|---|

There is no possibility of restarting pipeline from specific stage, after failure. Please check out this issue for more information |

![[Warning]](images/warning.png) | Warning |

|---|---|

Currently there is no way to introduce manual steps in a performant way. Jenkins is blocking an executor when manual step is required. That means that you’ll run out of executors pretty fast. You can check out this issue for and this StackOverflow question for more information. |

![[Important]](images/important.png) | Important |

|---|---|

All the steps below are not necessary to run the demo. They are needed only when you want to do some custom changes. |

The env vars that are used in all of the jobs are as follows:

| Property Name | Property Description | Default value |

|---|---|---|

BUILD_OPTIONS | Additional options you would like to pass to the Maven / Gradle build | |

DOCKER_REGISTRY_ORGANIZATION | Name of the docker organization to which Docker images should be deployed | scpipelines |

DOCKER_REGISTRY_CREDENTIAL_ID | Credential ID used to push Docker images | docker-registry |

DOCKER_SERVER_ID | Server ID in | docker-repo |

DOCKER_EMAIL | Email used to connect to Docker registry` and Maven builds | |

DOCKER_REGISTRY_ORGANIZATION | URL to Kubernetes cluster for test env | scpipelines |

DOCKER_REGISTRY_URL | URL to the docker registry | |

PAAS_TEST_API_URL | URL of the API of the Kubernetes cluster for test environment | 192.168.99.100:8443 |

PAAS_STAGE_API_URL | URL of the API of the Kubernetes cluster for stage environment | 192.168.99.100:8443 |

PAAS_PROD_API_URL | URL of the API of the Kubernetes cluster for prod environment | 192.168.99.100:8443 |

PAAS_TEST_CA_PATH | Path to the certificate authority for test environment | /usr/share/jenkins/cert/ca.crt |

PAAS_STAGE_CA_PATH | Path to the certificate authority for stage environment | /usr/share/jenkins/cert/ca.crt |

PAAS_PROD_CA_PATH | Path to the certificate authority for prod environment | /usr/share/jenkins/cert/ca.crt |

PAAS_TEST_CLIENT_CERT_PATH | Path to the client certificate for test environment | /usr/share/jenkins/cert/apiserver.crt |

PAAS_STAGE_CLIENT_CERT_PATH | Path to the client certificate for stage environment | /usr/share/jenkins/cert/apiserver.crt |

PAAS_PROD_CLIENT_CERT_PATH | Path to the client certificate for prod environment | /usr/share/jenkins/cert/apiserver.crt |

PAAS_TEST_CLIENT_KEY_PATH | Path to the client key for test environment | /usr/share/jenkins/cert/apiserver.key |

PAAS_STAGE_CLIENT_KEY_PATH | Path to the client key for stage environment | /usr/share/jenkins/cert/apiserver.key |

PAAS_PROD_CLIENT_KEY_PATH | Path to the client key for test environment | /usr/share/jenkins/cert/apiserver.key |

PAAS_TEST_CLIENT_TOKEN_PATH | Path to the file containing the token for test env | |

PAAS_STAGE_CLIENT_TOKEN_PATH | Path to the file containing the token for stage env | |

PAAS_PROD_CLIENT_TOKEN_PATH | Path to the file containing the token for prod env | |

PAAS_TEST_CLIENT_TOKEN_ID | ID of the credential containing access token for test environment | |

PAAS_STAGE_CLIENT_TOKEN_ID | ID of the credential containing access token for stage environment | |

PAAS_PROD_CLIENT_TOKEN_ID | ID of the credential containing access token for prod environment | |

PAAS_TEST_CLUSTER_NAME | Name of the cluster for test environment | minikube |

PAAS_STAGE_CLUSTER_NAME | Name of the cluster for stage environment | minikube |

PAAS_PROD_CLUSTER_NAME | Name of the cluster for prod environment | minikube |

PAAS_TEST_CLUSTER_USERNAME | Name of the user for test environment | minikube |

PAAS_STAGE_CLUSTER_USERNAME | Name of the user for stage environment | minikube |

PAAS_PROD_CLUSTER_USERNAME | Name of the user for prod environment | minikube |

PAAS_TEST_SYSTEM_NAME | Name of the system for test environment | minikube |

PAAS_STAGE_SYSTEM_NAME | Name of the system for stage environment | minikube |

PAAS_PROD_SYSTEM_NAME | Name of the system for prod environment | minikube |

PAAS_TEST_NAMESPACE | Namespace for test environment | sc-pipelines-test |

PAAS_STAGE_NAMESPACE | Namespace for stage environment | sc-pipelines-stage |

PAAS_PROD_NAMESPACE | Namespace for prod environment | sc-pipelines-prod |

KUBERNETES_MINIKUBE | Will you connect to Minikube? | true |

REPO_WITH_BINARIES_FOR_UPLOAD | URL to repo with the deployed jars | |

REPO_WITH_BINARIES_CREDENTIAL_ID | Credential ID used for the repo with jars | repo-with-binaries |

M2_SETTINGS_REPO_ID | The id of server from Maven settings.xml | artifactory-local |

JDK_VERSION | The name of the JDK installation | jdk8 |

PIPELINE_VERSION | What should be the version of the pipeline (ultimately also version of the jar) | 1.0.0.M1-${GROOVY,script ="new Date().format('yyMMdd_HHmmss')"}-VERSION |

GIT_EMAIL | The email used by Git to tag repo | |

GIT_NAME | The name used by Git to tag repo | Pivo Tal |

AUTO_DEPLOY_TO_STAGE | Should deployment to stage be automatic | false |

AUTO_DEPLOY_TO_PROD | Should deployment to prod be automatic | false |

API_COMPATIBILITY_STEP_REQUIRED | Should api compatibility step be required | true |

DB_ROLLBACK_STEP_REQUIRED | Should DB rollback step be present | true |

DEPLOY_TO_STAGE_STEP_REQUIRED | Should deploy to stage step be present | true |

![[Important]](images/important.png) | Important |

|---|---|

Skip this step if you’re not using GCE |

In order to use GCE we need to have gcloud running. If you already have the

CLI installed, skip this step. If not just execute to have the CLI

downloaded and an installer started

$ ./tools/k8s-helper.sh download-gcloud

Next, configure gcloud. Execute gcloud init and log in

to your cluster. You will get redirected to a login page, pick the

proper Google account and log in.

Pick an existing project or create a new one.

Go to your platform page (click on Container Engine) in GCP and connect to your cluster

$ CLUSTER_NAME=...

$ ZONE=us-east1-b

$ PROJECT_NAME=...

$ gcloud container clusters get-credentials ${CLUSTER_NAME} --zone ${ZONE} --project ${PROJECT_NAME}

$ kubectl proxyThe Kubernetes dashboard will be running at http://localhost:8001/ui/.

We’ll need a Persistent Disk for our Jenkins installation. Let’s create it

$ ZONE=us-east1-b

$ gcloud compute disks create --size=200GB --zone=${ZONE} sc-pipelines-jenkins-diskSince the disk got created now we need to format it. You can check out the instructions on how to do it here - https://cloud.google.com/compute/docs/disks/add-persistent-disk#formatting

![[Important]](images/important.png) | Important |

|---|---|

Skip this step if you’re not using Kubo or GCE |

In this section a description of steps required to deploy Jenkins and Artifactory to a Kubernetes cluster deployed via Kubo.

![[Tip]](images/tip.png) | Tip |

|---|---|

To see the dashboard just do |

- Log in to the cluster

Deploy Jenkins and Artifactory to the cluster

./tools/k8s-helper.sh setup-tools-infra-vspherefor a cluster deployed on VSphere./tools/k8s-helper.sh setup-tools-infra-gcefor a cluster deployed to GCE

- Forward the ports so that you can access the Jenkins UI from your local machine

$ NAMESPACE=default $ JENKINS_POD=jenkins-1430785859-nfhx4 $ LOCAL_PORT=32044 $ CONTAINER_PORT=8080 $ kubectl port-forward --namespace=${NAMESPACE} ${JENKINS_POD} ${LOCAL_PORT}:${CONTAINER_PORT}

- Go to

Credentials, clickSystemandGlobal credentials

- Update

git,repo-with-binariesanddocker-registrycredentials Run the

jenkins-pipeline-k8s-seedseed job and fill it out with the following dataPut

kubernetes.default:443here (orKUBERNETES_API:KUBERNETES_PORT)PAAS_TEST_API_URLPAAS_STAGE_API_URLPAAS_PROD_API_URL

Put

/var/run/secrets/kubernetes.io/serviceaccount/ca.crtdata herePAAS_TEST_CA_PATHPAAS_STAGE_CA_PATHPAAS_PROD_CA_PATH

- Uncheck the

Kubernetes Minikubevalue Clear the following vars

PAAS_TEST_CLIENT_CERT_PATHPAAS_STAGE_CLIENT_CERT_PATHPAAS_PROD_CLIENT_CERT_PATHPAAS_TEST_CLIENT_KEY_PATHPAAS_STAGE_CLIENT_KEY_PATHPAAS_PROD_CLIENT_KEY_PATH

Set

/var/run/secrets/kubernetes.io/serviceaccount/tokenvalue to these varsPAAS_TEST_CLIENT_TOKEN_PATHPAAS_STAGE_CLIENT_TOKEN_PATHPAAS_STAGE_CLIENT_TOKEN_PATH

Set the cluster name to these vars (you can get it by calling

kubectl config current-context)PAAS_TEST_CLUSTER_NAMEPAAS_STAGE_CLUSTER_NAMEPAAS_PROD_CLUSTER_NAME

Set the system name to these vars (you can get it by calling